Getting Started

Thank you for your interest in contributing to Rust! There are many ways to contribute, and we appreciate all of them.

If this is your first time contributing, the walkthrough chapter can give you a good example of how a typical contribution would go.

This documentation is not intended to be comprehensive; it is meant to be a quick guide for the most useful things. For more information, see this chapter on how to build and run the compiler.

Asking Questions

If you have questions, please make a post on the Rust Zulip server or

internals.rust-lang.org. If you are contributing to Rustup, be aware they are not on

Zulip - you can ask questions in #wg-rustup on Discord.

See the list of teams and working groups and the Community page on the

official website for more resources.

As a reminder, all contributors are expected to follow our Code of Conduct.

The compiler team (or t-compiler) usually hangs out in Zulip in this

"stream"; it will be easiest to get questions answered there.

Please ask questions! A lot of people report feeling that they are "wasting

expert time", but nobody on t-compiler feels this way. Contributors are

important to us.

Also, if you feel comfortable, prefer public topics, as this means others can see the questions and answers, and perhaps even integrate them back into this guide :)

Experts

Not all t-compiler members are experts on all parts of rustc; it's a

pretty large project. To find out who could have some expertise on

different parts of the compiler, consult triagebot assign groups.

The sections that start with [assign* in triagebot.toml file.

But also, feel free to ask questions even if you can't figure out who to ping.

Another way to find experts for a given part of the compiler is to see who has made recent commits.

For example, to find people who have recently worked on name resolution since the 1.68.2 release,

you could run git shortlog -n 1.68.2.. compiler/rustc_resolve/. Ignore any commits starting with

"Rollup merge" or commits by @bors (see CI contribution procedures for

more information about these commits).

Etiquette

We do ask that you be mindful to include as much useful information as you can in your question, but we recognize this can be hard if you are unfamiliar with contributing to Rust.

Just pinging someone without providing any context can be a bit annoying and

just create noise, so we ask that you be mindful of the fact that the

t-compiler folks get a lot of pings in a day.

What should I work on?

The Rust project is quite large and it can be difficult to know which parts of the project need help, or are a good starting place for beginners. Here are some suggested starting places.

Easy or mentored issues

If you're looking for somewhere to start, check out the following issue search. See the Triage for an explanation of these labels. You can also try filtering the search to areas you're interested in. For example:

repo:rust-lang/rust-clippywill only show clippy issueslabel:T-compilerwill only show issues related to the compilerlabel:A-diagnosticswill only show diagnostic issues

Not all important or beginner work has issue labels. See below for how to find work that isn't labelled.

Recurring work

Some work is too large to be done by a single person. In this case, it's common to have "Tracking issues" to co-ordinate the work between contributors. Here are some example tracking issues where it's easy to pick up work without a large time commitment:

- Rustdoc Askama Migration

- Diagnostic Translation

- Move UI tests to subdirectories

- Port run-make tests from Make to Rust

If you find more recurring work, please feel free to add it here!

Clippy issues

The Clippy project has spent a long time making its contribution process as friendly to newcomers as possible. Consider working on it first to get familiar with the process and the compiler internals.

See the Clippy contribution guide for instructions on getting started.

Diagnostic issues

Many diagnostic issues are self-contained and don't need detailed background knowledge of the compiler. You can see a list of diagnostic issues here.

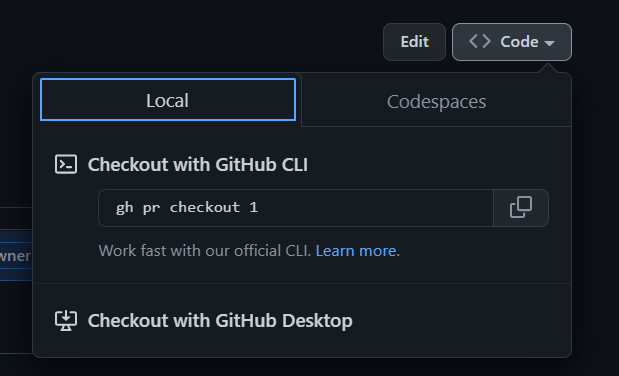

Picking up abandoned pull requests

Sometimes, contributors send a pull request, but later find out that they don't have enough

time to work on it, or they simply are not interested in it anymore. Such PRs are often

eventually closed and they receive the S-inactive label. You could try to examine some of

these PRs and pick up the work. You can find the list of such PRs here.

If the PR has been implemented in some other way in the meantime, the S-inactive label

should be removed from it. If not, and it seems that there is still interest in the change,

you can try to rebase the pull request on top of the latest master branch and send a new

pull request, continuing the work on the feature.

Contributing to std (standard library)

See std-dev-guide.

Contributing code to other Rust projects

There are a bunch of other projects that you can contribute to outside of the

rust-lang/rust repo, including cargo, miri, rustup, and many others.

These repos might have their own contributing guidelines and procedures. Many of them are owned by working groups. For more info, see the documentation in those repos' READMEs.

Other ways to contribute

There are a bunch of other ways you can contribute, especially if you don't

feel comfortable jumping straight into the large rust-lang/rust codebase.

The following tasks are doable without much background knowledge but are incredibly helpful:

- Cleanup crew: find minimal reproductions of ICEs, bisect regressions, etc. This is a way of helping that saves a ton of time for others to fix an error later.

- Writing documentation: if you are feeling a bit more intrepid, you could try to read a part of the code and write doc comments for it. This will help you to learn some part of the compiler while also producing a useful artifact!

- Triaging issues: categorizing, replicating, and minimizing issues is very helpful to the Rust maintainers.

- Working groups: there are a bunch of working groups on a wide variety of rust-related things.

- Answer questions in the Get Help! channels on the Rust Discord server, on users.rust-lang.org, or on StackOverflow.

- Participate in the RFC process.

- Find a requested community library, build it, and publish it to Crates.io. Easier said than done, but very, very valuable!

Cloning and Building

See "How to build and run the compiler".

Contributor Procedures

This section has moved to the "Contribution Procedures" chapter.

Other Resources

This section has moved to the "About this guide" chapter.

About this guide

This guide is meant to help document how rustc – the Rust compiler – works, as well as to help new contributors get involved in rustc development.

There are seven parts to this guide:

- Building

rustc: Contains information that should be useful no matter how you are contributing, about building, debugging, profiling, etc. - Contributing to

rustc: Contains information that should be useful no matter how you are contributing, about procedures for contribution, using git and Github, stabilizing features, etc. - High-Level Compiler Architecture: Discusses the high-level architecture of the compiler and stages of the compile process.

- Source Code Representation: Describes the process of taking raw source code from the user and transforming it into various forms that the compiler can work with easily.

- Analysis: discusses the analyses that the compiler uses to check various properties of the code and inform later stages of the compile process (e.g., type checking).

- From MIR to Binaries: How linked executable machine code is generated.

- Appendices at the end with useful reference information. There are a few of these with different information, including a glossary.

Constant change

Keep in mind that rustc is a real production-quality product,

being worked upon continuously by a sizeable set of contributors.

As such, it has its fair share of codebase churn and technical debt.

In addition, many of the ideas discussed throughout this guide are idealized designs

that are not fully realized yet.

All this makes keeping this guide completely up to date on everything very hard!

The Guide itself is of course open-source as well, and the sources can be found at the GitHub repository. If you find any mistakes in the guide, please file an issue about it. Even better, open a PR with a correction!

If you do contribute to the guide, please see the corresponding subsection on writing documentation in this guide.

“‘All conditioned things are impermanent’ — when one sees this with wisdom, one turns away from suffering.” The Dhammapada, verse 277

Other places to find information

You might also find the following sites useful:

- This guide contains information about how various parts of the compiler work and how to contribute to the compiler.

- rustc API docs -- rustdoc documentation for the compiler, devtools, and internal tools

- Forge -- contains documentation about Rust infrastructure, team procedures, and more

- compiler-team -- the home-base for the Rust compiler team, with description of the team procedures, active working groups, and the team calendar.

- std-dev-guide -- a similar guide for developing the standard library.

- The t-compiler zulip

#contributeand#wg-rustupon Discord.- The Rust Internals forum, a place to ask questions and discuss Rust's internals

- The Rust reference, even though it doesn't specifically talk about Rust's internals, is a great resource nonetheless

- Although out of date, Tom Lee's great blog article is very helpful

- rustaceans.org is helpful, but mostly dedicated to IRC

- The Rust Compiler Testing Docs

- For @bors, this cheat sheet is helpful

- Google is always helpful when programming. You can search all Rust documentation (the standard library, the compiler, the books, the references, and the guides) to quickly find information about the language and compiler.

- You can also use Rustdoc's built-in search feature to find documentation on

types and functions within the crates you're looking at. You can also search

by type signature! For example, searching for

* -> vecshould find all functions that return aVec<T>. Hint: Find more tips and keyboard shortcuts by typing?on any Rustdoc page!

How to build and run the compiler

- Quick Start

- Get the source code

- What is

x.py? - Create a

config.toml - Common

xcommands - Creating a rustup toolchain

- Building targets for cross-compilation

- Other

xcommands - Remarks on disk space

The compiler is built using a tool called x.py. You will need to

have Python installed to run it.

Quick Start

For a less in-depth quick-start of getting the compiler running, see quickstart.

Get the source code

The main repository is rust-lang/rust. This contains the compiler,

the standard library (including core, alloc, test, proc_macro, etc),

and a bunch of tools (e.g. rustdoc, the bootstrapping infrastructure, etc).

The very first step to work on rustc is to clone the repository:

git clone https://github.com/rust-lang/rust.git

cd rust

Partial clone the repository

Due to the size of the repository, cloning on a slower internet connection can take a long time, and requires disk space to store the full history of every file and directory. Instead, it is possible to tell git to perform a partial clone, which will only fully retrieve the current file contents, but will automatically retrieve further file contents when you, e.g., jump back in the history. All git commands will continue to work as usual, at the price of requiring an internet connection to visit not-yet-loaded points in history.

git clone --filter='blob:none' https://github.com/rust-lang/rust.git

cd rust

NOTE: This link describes this type of checkout in more detail, and also compares it to other modes, such as shallow cloning.

Shallow clone the repository

An older alternative to partial clones is to use shallow clone the repository instead.

To do so, you can use the --depth N option with the git clone command.

This instructs git to perform a "shallow clone", cloning the repository but truncating it to

the last N commits.

Passing --depth 1 tells git to clone the repository but truncate the history to the latest

commit that is on the master branch, which is usually fine for browsing the source code or

building the compiler.

git clone --depth 1 https://github.com/rust-lang/rust.git

cd rust

NOTE: A shallow clone limits which

gitcommands can be run. If you intend to work on and contribute to the compiler, it is generally recommended to fully clone the repository as shown above, or to perform a partial clone instead.For example,

git bisectandgit blamerequire access to the commit history, so they don't work if the repository was cloned with--depth 1.

What is x.py?

x.py is the build tool for the rust repository. It can build docs, run tests, and compile the

compiler and standard library.

This chapter focuses on the basics to be productive, but

if you want to learn more about x.py, read this chapter.

Also, using x rather than x.py is recommended as:

./xis the most likely to work on every system (on Unix it runs the shell script that does python version detection, on Windows it will probably run the powershell script - certainly less likely to break than./x.pywhich often just opens the file in an editor).1

(You can find the platform related scripts around the x.py, like x.ps1)

Notice that this is not absolute. For instance, using Nushell in VSCode on Win10,

typing x or ./x still opens x.py in an editor rather than invoking the program. :)

In the rest of this guide, we use x rather than x.py directly. The following

command:

./x check

could be replaced by:

./x.py check

Running x.py

The x.py command can be run directly on most Unix systems in the following format:

./x <subcommand> [flags]

This is how the documentation and examples assume you are running x.py.

Some alternative ways are:

# On a Unix shell if you don't have the necessary `python3` command

./x <subcommand> [flags]

# In Windows Powershell (if powershell is configured to run scripts)

./x <subcommand> [flags]

./x.ps1 <subcommand> [flags]

# On the Windows Command Prompt (if .py files are configured to run Python)

x.py <subcommand> [flags]

# You can also run Python yourself, e.g.:

python x.py <subcommand> [flags]

On Windows, the Powershell commands may give you an error that looks like this:

PS C:\Users\vboxuser\rust> ./x

./x : File C:\Users\vboxuser\rust\x.ps1 cannot be loaded because running scripts is disabled on this system. For more

information, see about_Execution_Policies at https:/go.microsoft.com/fwlink/?LinkID=135170.

At line:1 char:1

+ ./x

+ ~~~

+ CategoryInfo : SecurityError: (:) [], PSSecurityException

+ FullyQualifiedErrorId : UnauthorizedAccess

You can avoid this error by allowing powershell to run local scripts:

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser

Running x.py slightly more conveniently

There is a binary that wraps x.py called x in src/tools/x. All it does is

run x.py, but it can be installed system-wide and run from any subdirectory

of a checkout. It also looks up the appropriate version of python to use.

You can install it with cargo install --path src/tools/x.

To clarify that this is another global installed binary util, which is

similar to the one declared in section What is x.py, but

it works as an independent process to execute the x.py rather than calling the

shell to run the platform related scripts.

Create a config.toml

To start, run ./x setup and select the compiler defaults. This will do some initialization

and create a config.toml for you with reasonable defaults. If you use a different default (which

you'll likely want to do if you want to contribute to an area of rust other than the compiler, such

as rustdoc), make sure to read information about that default (located in src/bootstrap/defaults)

as the build process may be different for other defaults.

Alternatively, you can write config.toml by hand. See config.example.toml for all the available

settings and explanations of them. See src/bootstrap/defaults for common settings to change.

If you have already built rustc and you change settings related to LLVM, then you may have to

execute rm -rf build for subsequent configuration changes to take effect. Note that ./x clean will not cause a rebuild of LLVM.

Common x commands

Here are the basic invocations of the x commands most commonly used when

working on rustc, std, rustdoc, and other tools.

| Command | When to use it |

|---|---|

./x check | Quick check to see if most things compile; rust-analyzer can run this automatically for you |

./x build | Builds rustc, std, and rustdoc |

./x test | Runs all tests |

./x fmt | Formats all code |

As written, these commands are reasonable starting points. However, there are

additional options and arguments for each of them that are worth learning for

serious development work. In particular, ./x build and ./x test

provide many ways to compile or test a subset of the code, which can save a lot

of time.

Also, note that x supports all kinds of path suffixes for compiler, library,

and src/tools directories. So, you can simply run x test tidy instead of

x test src/tools/tidy. Or, x build std instead of x build library/std.

See the chapters on testing and rustdoc for more details.

Building the compiler

Note that building will require a relatively large amount of storage space. You may want to have upwards of 10 or 15 gigabytes available to build the compiler.

Once you've created a config.toml, you are now ready to run

x. There are a lot of options here, but let's start with what is

probably the best "go to" command for building a local compiler:

./x build library

This may look like it only builds the standard library, but that is not the case. What this command does is the following:

- Build

stdusing the stage0 compiler - Build

rustcusing the stage0 compiler- This produces the stage1 compiler

- Build

stdusing the stage1 compiler

This final product (stage1 compiler + libs built using that compiler)

is what you need to build other Rust programs (unless you use #![no_std] or

#![no_core]).

You will probably find that building the stage1 std is a bottleneck for you,

but fear not, there is a (hacky) workaround...

see the section on avoiding rebuilds for std.

Sometimes you don't need a full build. When doing some kind of

"type-based refactoring", like renaming a method, or changing the

signature of some function, you can use ./x check instead for a much faster build.

Note that this whole command just gives you a subset of the full rustc

build. The full rustc build (what you get with ./x build --stage 2 compiler/rustc) has quite a few more steps:

- Build

rustcwith the stage1 compiler.- The resulting compiler here is called the "stage2" compiler.

- Build

stdwith stage2 compiler. - Build

librustdocand a bunch of other things with the stage2 compiler.

You almost never need to do this.

Build specific components

If you are working on the standard library, you probably don't need to build the compiler unless you are planning to use a recently added nightly feature. Instead, you can just build using the bootstrap compiler.

./x build --stage 0 library

If you choose the library profile when running x setup, you can omit --stage 0 (it's the

default).

Creating a rustup toolchain

Once you have successfully built rustc, you will have created a bunch

of files in your build directory. In order to actually run the

resulting rustc, we recommend creating rustup toolchains. The first

one will run the stage1 compiler (which we built above). The second

will execute the stage2 compiler (which we did not build, but which

you will likely need to build at some point; for example, if you want

to run the entire test suite).

rustup toolchain link stage0 build/host/stage0-sysroot # beta compiler + stage0 std

rustup toolchain link stage1 build/host/stage1

rustup toolchain link stage2 build/host/stage2

Now you can run the rustc you built with. If you run with -vV, you

should see a version number ending in -dev, indicating a build from

your local environment:

$ rustc +stage1 -vV

rustc 1.48.0-dev

binary: rustc

commit-hash: unknown

commit-date: unknown

host: x86_64-unknown-linux-gnu

release: 1.48.0-dev

LLVM version: 11.0

The rustup toolchain points to the specified toolchain compiled in your build directory,

so the rustup toolchain will be updated whenever x build or x test are run for

that toolchain/stage.

Note: the toolchain we've built does not include cargo. In this case, rustup will

fall back to using cargo from the installed nightly, beta, or stable toolchain

(in that order). If you need to use unstable cargo flags, be sure to run

rustup install nightly if you haven't already. See the

rustup documentation on custom toolchains.

Note: rust-analyzer and IntelliJ Rust plugin use a component called

rust-analyzer-proc-macro-srv to work with proc macros. If you intend to use a

custom toolchain for a project (e.g. via rustup override set stage1) you may

want to build this component:

./x build proc-macro-srv-cli

Building targets for cross-compilation

To produce a compiler that can cross-compile for other targets,

pass any number of target flags to x build.

For example, if your host platform is x86_64-unknown-linux-gnu

and your cross-compilation target is wasm32-wasip1, you can build with:

./x build --target x86_64-unknown-linux-gnu,wasm32-wasip1

Note that if you want the resulting compiler to be able to build crates that

involve proc macros or build scripts, you must be sure to explicitly build target support for the

host platform (in this case, x86_64-unknown-linux-gnu).

If you want to always build for other targets without needing to pass flags to x build,

you can configure this in the [build] section of your config.toml like so:

[build]

target = ["x86_64-unknown-linux-gnu", "wasm32-wasip1"]

Note that building for some targets requires having external dependencies installed

(e.g. building musl targets requires a local copy of musl).

Any target-specific configuration (e.g. the path to a local copy of musl)

will need to be provided by your config.toml.

Please see config.example.toml for information on target-specific configuration keys.

For examples of the complete configuration necessary to build a target, please visit the rustc book, select any target under the "Platform Support" heading on the left, and see the section related to building a compiler for that target. For targets without a corresponding page in the rustc book, it may be useful to inspect the Dockerfiles that the Rust infrastructure itself uses to set up and configure cross-compilation.

If you have followed the directions from the prior section on creating a rustup toolchain, then once you have built your compiler you will be able to use it to cross-compile like so:

cargo +stage1 build --target wasm32-wasip1

Other x commands

Here are a few other useful x commands. We'll cover some of them in detail

in other sections:

- Building things:

./x build– builds everything using the stage 1 compiler, not just up tostd./x build --stage 2– builds everything with the stage 2 compiler includingrustdoc

- Running tests (see the section on running tests for

more details):

./x test library/std– runs the unit tests and integration tests fromstd./x test tests/ui– runs theuitest suite./x test tests/ui/const-generics- runs all the tests in theconst-generics/subdirectory of theuitest suite./x test tests/ui/const-generics/const-types.rs- runs the single testconst-types.rsfrom theuitest suite

Cleaning out build directories

Sometimes you need to start fresh, but this is normally not the case. If you need to run this then bootstrap is most likely not acting right and you should file a bug as to what is going wrong. If you do need to clean everything up then you only need to run one command!

./x clean

rm -rf build works too, but then you have to rebuild LLVM, which can take

a long time even on fast computers.

Remarks on disk space

Building the compiler (especially if beyond stage 1) can require significant amounts of free disk

space, possibly around 100GB. This is compounded if you have a separate build directory for

rust-analyzer (e.g. build-rust-analyzer). This is easy to hit with dev-desktops which have a set

disk

quota

for each user, but this also applies to local development as well. Occassionally, you may need to:

- Remove

build/directory. - Remove

build-rust-analyzer/directory (if you have a separate rust-analyzer build directory). - Uninstall unnecessary toolchains if you use

cargo-bisect-rustc. You can check which toolchains are installed withrustup toolchain list.

issue#1707

Quickstart

This is a quickstart guide about getting the compiler running. For more information on the individual steps, see the other pages in this chapter.

First, clone the repository:

git clone https://github.com/rust-lang/rust.git

cd rust

When building the compiler, we don't use cargo directly, instead we use a

wrapper called "x". It is invoked with ./x.

We need to create a configuration for the build. Use ./x setup to create a

good default.

./x setup

Then, we can build the compiler. Use ./x build to build the compiler, standard

library and a few tools. You can also ./x check to just check it. All these

commands can take specific components/paths as arguments, for example ./x check compiler to just check the compiler.

./x build

When doing a change to the compiler that does not affect the way it compiles the standard library (so for example, a change to an error message), use

--keep-stage-std 1to avoid recompiling it.

After building the compiler and standard library, you now have a working compiler toolchain. You can use it with rustup by linking it.

rustup toolchain link stage1 build/host/stage1

Now you have a toolchain called stage1 linked to your build. You can use it to

test the compiler.

rustc +stage1 testfile.rs

After doing a change, you can run the compiler test suite with ./x test.

./x test runs the full test suite, which is slow and rarely what you want.

Usually, ./x test tests/ui is what you want after a compiler change, testing

all UI tests that invoke the compiler on a specific test file

and check the output.

./x test tests/ui

Use --bless if you've made a change and want to update the .stderr files

with the new output.

./x suggestcan also be helpful for suggesting which tests to run after a change.

Congrats, you are now ready to make a change to the compiler! If you have more questions, the full chapter might contain the answers, and if it doesn't, feel free to ask for help on Zulip.

If you use VSCode, Vim, Emacs or Helix, ./x setup will ask you if you want to

set up the editor config. For more information, check out suggested

workflows.

Prerequisites

Dependencies

See the rust-lang/rust INSTALL.

Hardware

You will need an internet connection to build. The bootstrapping process involves updating git submodules and downloading a beta compiler. It doesn't need to be super fast, but that can help.

There are no strict hardware requirements, but building the compiler is computationally expensive, so a beefier machine will help, and I wouldn't recommend trying to build on a Raspberry Pi! We recommend the following.

- 30GB+ of free disk space. Otherwise, you will have to keep clearing incremental caches. More space is better, the compiler is a bit of a hog; it's a problem we are aware of.

- 8GB+ RAM

- 2+ cores. Having more cores really helps. 10 or 20 or more is not too many!

Beefier machines will lead to much faster builds. If your machine is not very

powerful, a common strategy is to only use ./x check on your local machine

and let the CI build test your changes when you push to a PR branch.

Building the compiler takes more than half an hour on my moderately powerful laptop. We suggest downloading LLVM from CI so you don't have to build it from source (see here).

Like cargo, the build system will use as many cores as possible. Sometimes

this can cause you to run low on memory. You can use -j to adjust the number

of concurrent jobs. If a full build takes more than ~45 minutes to an hour, you

are probably spending most of the time swapping memory in and out; try using

-j1.

If you don't have too much free disk space, you may want to turn off incremental compilation (see here). This will make compilation take longer (especially after a rebase), but will save a ton of space from the incremental caches.

Suggested Workflows

The full bootstrapping process takes quite a while. Here are some suggestions to make your life easier.

- Installing a pre-push hook

- Configuring

rust-analyzerforrustc - Check, check, and check again

x suggest- Configuring

rustupto use nightly - Faster builds with

--keep-stage. - Using incremental compilation

- Fine-tuning optimizations

- Working on multiple branches at the same time

- Using nix-shell

- Shell Completions

Installing a pre-push hook

CI will automatically fail your build if it doesn't pass tidy, our internal

tool for ensuring code quality. If you'd like, you can install a Git

hook that will

automatically run ./x test tidy on each push, to ensure your code is up to

par. If the hook fails then run ./x test tidy --bless and commit the changes.

If you decide later that the pre-push behavior is undesirable, you can delete

the pre-push file in .git/hooks.

A prebuilt git hook lives at src/etc/pre-push.sh. It can be copied into

your .git/hooks folder as pre-push (without the .sh extension!).

You can also install the hook as a step of running ./x setup!

Configuring rust-analyzer for rustc

Project-local rust-analyzer setup

rust-analyzer can help you check and format your code whenever you save a

file. By default, rust-analyzer runs the cargo check and rustfmt commands,

but you can override these commands to use more adapted versions of these tools

when hacking on rustc. With custom setup, rust-analyzer can use ./x check

to check the sources, and the stage 0 rustfmt to format them.

The default rust-analyzer.check.overrideCommand command line will check all

the crates and tools in the repository. If you are working on a specific part,

you can override the command to only check the part you are working on to save

checking time. For example, if you are working on the compiler, you can override

the command to x check compiler --json-output to only check the compiler part.

You can run x check --help --verbose to see the available parts.

Running ./x setup editor will prompt you to create a project-local LSP config

file for one of the supported editors. You can also create the config file as a

step of running ./x setup.

Using a separate build directory for rust-analyzer

By default, when rust-analyzer runs a check or format command, it will share the same build directory as manual command-line builds. This can be inconvenient for two reasons:

- Each build will lock the build directory and force the other to wait, so it becomes impossible to run command-line builds while rust-analyzer is running commands in the background.

- There is an increased risk of one of the builds deleting previously-built artifacts due to conflicting compiler flags or other settings, forcing additional rebuilds in some cases.

To avoid these problems:

- Add

--build-dir=build-rust-analyzerto all of the customxcommands in your editor's rust-analyzer configuration. (Feel free to choose a different directory name if desired.) - Modify the

rust-analyzer.procMacro.serversetting so that it points to the copy ofrust-analyzer-proc-macro-srvin that other build directory.

Using separate build directories for command-line builds and rust-analyzer

requires extra disk space, and also means that running ./x clean on the

command-line will not clean out the separate build directory. To clean the

separate build directory, run ./x clean --build-dir=build-rust-analyzer

instead.

Visual Studio Code

Selecting vscode in ./x setup editor will prompt you to create a

.vscode/settings.json file which will configure Visual Studio code. The

recommended rust-analyzer settings live at

src/etc/rust_analyzer_settings.json.

If running ./x check on save is inconvenient, in VS Code you can use a Build

Task instead:

// .vscode/tasks.json

{

"version": "2.0.0",

"tasks": [

{

"label": "./x check",

"command": "./x check",

"type": "shell",

"problemMatcher": "$rustc",

"presentation": { "clear": true },

"group": { "kind": "build", "isDefault": true }

}

]

}

Neovim

For Neovim users there are several options for configuring for rustc. The easiest way is by using neoconf.nvim, which allows for project-local configuration files with the native LSP. The steps for how to use it are below. Note that they require rust-analyzer to already be configured with Neovim. Steps for this can be found here.

- First install the plugin. This can be done by following the steps in the README.

- Run

./x setup editor, and selectvscodeto create a.vscode/settings.jsonfile.neoconfis able to read and update rust-analyzer settings automatically when the project is opened when this file is detected.

If you're using coc.nvim, you can run ./x setup editor and select vim to

create a .vim/coc-settings.json. The settings can be edited with

:CocLocalConfig. The recommended settings live at

src/etc/rust_analyzer_settings.json.

Another way is without a plugin, and creating your own logic in your

configuration. To do this you must translate the JSON to Lua yourself. The

translation is 1:1 and fairly straight-forward. It must be put in the

["rust-analyzer"] key of the setup table, which is shown

here.

If you would like to use the build task that is described above, you may either

make your own command in your config, or you can install a plugin such as

overseer.nvim that can read

VSCode's task.json

files,

and follow the same instructions as above.

Emacs

Emacs provides support for rust-analyzer with project-local configuration

through Eglot.

Steps for setting up Eglot with rust-analyzer can be found

here.

Having set up Emacs & Eglot for Rust development in general, you can run

./x setup editor and select emacs, which will prompt you to create

.dir-locals.el with the recommended configuration for Eglot.

The recommended settings live at src/etc/rust_analyzer_eglot.el.

For more information on project-specific Eglot configuration, consult the

manual.

Helix

Helix comes with built-in LSP and rust-analyzer support.

It can be configured through languages.toml, as described

here.

You can run ./x setup editor and select helix, which will prompt you to

create languages.toml with the recommended configuration for Helix. The

recommended settings live at src/etc/rust_analyzer_helix.toml.

Check, check, and check again

When doing simple refactoring, it can be useful to run ./x check

continuously. If you set up rust-analyzer as described above, this will be

done for you every time you save a file. Here you are just checking that the

compiler can build, but often that is all you need (e.g., when renaming a

method). You can then run ./x build when you actually need to run tests.

In fact, it is sometimes useful to put off tests even when you are not 100% sure

the code will work. You can then keep building up refactoring commits and only

run the tests at some later time. You can then use git bisect to track down

precisely which commit caused the problem. A nice side-effect of this style

is that you are left with a fairly fine-grained set of commits at the end, all

of which build and pass tests. This often helps reviewing.

x suggest

The x suggest subcommand suggests (and runs) a subset of the extensive

rust-lang/rust tests based on files you have changed. This is especially

useful for new contributors who have not mastered the arcane x flags yet and

more experienced contributors as a shorthand for reducing mental effort. In all

cases it is useful not to run the full tests (which can take on the order of

tens of minutes) and just run a subset which are relevant to your changes. For

example, running tidy and linkchecker is useful when editing Markdown files,

whereas UI tests are much less likely to be helpful. While x suggest is a

useful tool, it does not guarantee perfect coverage (just as PR CI isn't a

substitute for bors). See the dedicated chapter for

more information and contribution instructions.

Please note that x suggest is in a beta state currently and the tests that it

will suggest are limited.

Configuring rustup to use nightly

Some parts of the bootstrap process uses pinned, nightly versions of tools like

rustfmt. To make things like cargo fmt work correctly in your repo, run

cd <path to rustc repo>

rustup override set nightly

after installing a nightly toolchain with rustup. Don't forget to do this

for all directories you have setup a worktree for. You may need to use the

pinned nightly version from src/stage0, but often the normal nightly channel

will work.

Note see the section on vscode for how to configure it with this real

rustfmt x uses, and the section on rustup for how to setup rustup

toolchain for your bootstrapped compiler

Note This does not allow you to build rustc with cargo directly. You

still have to use x to work on the compiler or standard library, this just

lets you use cargo fmt.

Faster builds with --keep-stage.

Sometimes just checking whether the compiler builds is not enough. A common

example is that you need to add a debug! statement to inspect the value of

some state or better understand the problem. In that case, you don't really need

a full build. By bypassing bootstrap's cache invalidation, you can often get

these builds to complete very fast (e.g., around 30 seconds). The only catch is

this requires a bit of fudging and may produce compilers that don't work (but

that is easily detected and fixed).

The sequence of commands you want is as follows:

- Initial build:

./x build library- As documented previously, this will build a functional stage1 compiler as

part of running all stage0 commands (which include building a

stdcompatible with the stage1 compiler) as well as the first few steps of the "stage 1 actions" up to "stage1 (sysroot stage1) builds std".

- As documented previously, this will build a functional stage1 compiler as

part of running all stage0 commands (which include building a

- Subsequent builds:

./x build library --keep-stage 1- Note that we added the

--keep-stage 1flag here

- Note that we added the

As mentioned, the effect of --keep-stage 1 is that we just assume that the

old standard library can be re-used. If you are editing the compiler, this is

almost always true: you haven't changed the standard library, after all. But

sometimes, it's not true: for example, if you are editing the "metadata" part of

the compiler, which controls how the compiler encodes types and other states

into the rlib files, or if you are editing things that wind up in the metadata

(such as the definition of the MIR).

The TL;DR is that you might get weird behavior from a compile when using

--keep-stage 1 -- for example, strange ICEs

or other panics. In that case, you should simply remove the --keep-stage 1

from the command and rebuild. That ought to fix the problem.

You can also use --keep-stage 1 when running tests. Something like this:

- Initial test run:

./x test tests/ui - Subsequent test run:

./x test tests/ui --keep-stage 1

Using incremental compilation

You can further enable the --incremental flag to save additional time in

subsequent rebuilds:

./x test tests/ui --incremental --test-args issue-1234

If you don't want to include the flag with every command, you can enable it in

the config.toml:

[rust]

incremental = true

Note that incremental compilation will use more disk space than usual. If disk

space is a concern for you, you might want to check the size of the build

directory from time to time.

Fine-tuning optimizations

Setting optimize = false makes the compiler too slow for tests. However, to

improve the test cycle, you can disable optimizations selectively only for the

crates you'll have to rebuild

(source).

For example, when working on rustc_mir_build, the rustc_mir_build and

rustc_driver crates take the most time to incrementally rebuild. You could

therefore set the following in the root Cargo.toml:

[profile.release.package.rustc_mir_build]

opt-level = 0

[profile.release.package.rustc_driver]

opt-level = 0

Working on multiple branches at the same time

Working on multiple branches in parallel can be a little annoying, since building the compiler on one branch will cause the old build and the incremental compilation cache to be overwritten. One solution would be to have multiple clones of the repository, but that would mean storing the Git metadata multiple times, and having to update each clone individually.

Fortunately, Git has a better solution called worktrees. This lets you create multiple "working trees", which all share the same Git database. Moreover, because all of the worktrees share the same object database, if you update a branch (e.g. master) in any of them, you can use the new commits from any of the worktrees. One caveat, though, is that submodules do not get shared. They will still be cloned multiple times.

Given you are inside the root directory for your Rust repository, you can create a "linked working tree" in a new "rust2" directory by running the following command:

git worktree add ../rust2

Creating a new worktree for a new branch based on master looks like:

git worktree add -b my-feature ../rust2 master

You can then use that rust2 folder as a separate workspace for modifying and

building rustc!

Using nix-shell

If you're using nix, you can use the following nix-shell to work on Rust:

{ pkgs ? import <nixpkgs> {} }:

pkgs.mkShell {

name = "rustc";

nativeBuildInputs = with pkgs; [

binutils cmake ninja pkg-config python3 git curl cacert patchelf nix

];

buildInputs = with pkgs; [

openssl glibc.out glibc.static

];

# Avoid creating text files for ICEs.

RUSTC_ICE = "0";

# Provide `libstdc++.so.6` for the self-contained lld.

LD_LIBRARY_PATH = "${with pkgs; lib.makeLibraryPath [

stdenv.cc.cc.lib

]}";

}

Note that when using nix on a not-NixOS distribution, it may be necessary to set

patch-binaries-for-nix = true in config.toml. Bootstrap tries to detect

whether it's running in nix and enable patching automatically, but this

detection can have false negatives.

You can also use your nix shell to manage config.toml:

let

config = pkgs.writeText "rustc-config" ''

# Your config.toml content goes here

''

pkgs.mkShell {

/* ... */

# This environment variable tells bootstrap where our config.toml is.

RUST_BOOTSTRAP_CONFIG = config;

}

Shell Completions

If you use Bash, Fish or PowerShell, you can find automatically-generated shell

completion scripts for x.py in

src/etc/completions.

Zsh support will also be included once issues with

clap_complete have been resolved.

You can use source ./src/etc/completions/x.py.<extension> to load completions

for your shell of choice, or & .\src\etc\completions\x.py.ps1 for PowerShell.

Adding this to your shell's startup script (e.g. .bashrc) will automatically

load this completion.

Build distribution artifacts

You might want to build and package up the compiler for distribution. You’ll want to run this command to do it:

./x dist

Install from source

You might want to prefer installing Rust (and tools configured in your configuration) by building from source. If so, you want to run this command:

./x install

Note: If you are testing out a modification to a compiler, you might

want to build the compiler (with ./x build) then create a toolchain as

discussed in here.

For example, if the toolchain you created is called "foo", you would then

invoke it with rustc +foo ... (where ... represents the rest of the arguments).

Instead of installing Rust (and tools in your config file) globally, you can set DESTDIR

environment variable to change the installation path. If you want to set installation paths

more dynamically, you should prefer install options in your config file to achieve that.

Building documentation

This chapter describes how to build documentation of toolchain components, like the standard library (std) or the compiler (rustc).

-

Document everything

This uses

rustdocfrom the beta toolchain, so will produce (slightly) different output to stage 1 rustdoc, as rustdoc is under active development:./x docIf you want to be sure the documentation looks the same as on CI:

./x doc --stage 1This ensures that (current) rustdoc gets built, then that is used to document the components.

-

Much like running individual tests or building specific components, you can build just the documentation you want:

./x doc src/doc/book ./x doc src/doc/nomicon ./x doc compiler librarySee the nightly docs index page for a full list of books.

-

Document internal rustc items

Compiler documentation is not built by default. To create it by default with

x doc, modifyconfig.toml:[build] compiler-docs = trueNote that when enabled, documentation for internal compiler items will also be built.

NOTE: The documentation for the compiler is found at this link.

Rustdoc overview

rustdoc lives in-tree with the

compiler and standard library. This chapter is about how it works.

For information about Rustdoc's features and how to use them, see

the Rustdoc book.

For more details about how rustdoc works, see the

"Rustdoc internals" chapter.

rustdoc uses rustc internals (and, of course, the standard library), so you

will have to build the compiler and std once before you can build rustdoc.

Rustdoc is implemented entirely within the crate librustdoc. It runs

the compiler up to the point where we have an internal representation of a

crate (HIR) and the ability to run some queries about the types of items. HIR

and queries are discussed in the linked chapters.

librustdoc performs two major steps after that to render a set of

documentation:

- "Clean" the AST into a form that's more suited to creating documentation (and slightly more resistant to churn in the compiler).

- Use this cleaned AST to render a crate's documentation, one page at a time.

Naturally, there's more than just this, and those descriptions simplify out lots of details, but that's the high-level overview.

(Side note: librustdoc is a library crate! The rustdoc binary is created

using the project in src/tools/rustdoc. Note that literally all that

does is call the main() that's in this crate's lib.rs, though.)

Cheat sheet

- Run

./x setup toolsbefore getting started. This will configurexwith nice settings for developing rustdoc and other tools, including downloading a copy of rustc rather than building it. - Use

./x check rustdocto quickly check for compile errors. - Use

./x build library rustdocto make a usable rustdoc you can run on other projects.- Add

library/testto be able to userustdoc --test. - Run

rustup toolchain link stage2 build/host/stage2to add a custom toolchain calledstage2to your rustup environment. After running that,cargo +stage2 docin any directory will build with your locally-compiled rustdoc.

- Add

- Use

./x doc libraryto use this rustdoc to generate the standard library docs.- The completed docs will be available in

build/host/doc(undercore,alloc, andstd). - If you want to copy those docs to a webserver, copy all of

build/host/doc, since that's where the CSS, JS, fonts, and landing page are.

- The completed docs will be available in

- Use

./x test tests/rustdoc*to run the tests using a stage1 rustdoc.- See Rustdoc internals for more information about tests.

Code structure

- All paths in this section are relative to

src/librustdocin the rust-lang/rust repository. - Most of the HTML printing code is in

html/format.rsandhtml/render/mod.rs. It's in a bunch offmt::Displayimplementations and supplementary functions. - The types that got

Displayimpls above are defined inclean/mod.rs, right next to the customCleantrait used to process them out of the rustc HIR. - The bits specific to using rustdoc as a test harness are in

doctest.rs. - The Markdown renderer is loaded up in

html/markdown.rs, including functions for extracting doctests from a given block of Markdown. - The tests on the structure of rustdoc HTML output are located in

tests/rustdoc, where they're handled by the test runner of bootstrap and the supplementary scriptsrc/etc/htmldocck.py.

Tests

- All paths in this section are relative to

testsin the rust-lang/rust repository. - Tests on search engine and index are located in

rustdoc-jsandrustdoc-js-std. The format is specified in the search guide. - Tests on the "UI" of rustdoc (the terminal output it produces when run) are in

rustdoc-ui - Tests on the "GUI" of rustdoc (the HTML, JS, and CSS as rendered in a browser)

are in

rustdoc-gui. These use a NodeJS tool called browser-UI-test that uses puppeteer to run tests in a headless browser and check rendering and interactivity.

Constraints

We try to make rustdoc work reasonably well with JavaScript disabled, and when browsing local files. We support these browsers.

Supporting local files (file:/// URLs) brings some surprising restrictions.

Certain browser features that require secure origins, like localStorage and

Service Workers, don't work reliably. We can still use such features but we

should make sure pages are still usable without them.

Multiple runs, same output directory

Rustdoc can be run multiple times for varying inputs, with its output set to the same directory. That's how cargo produces documentation for dependencies of the current crate. It can also be done manually if a user wants a big documentation bundle with all of the docs they care about.

HTML is generated independently for each crate, but there is some cross-crate information that we update as we add crates to the output directory:

crates<SUFFIX>.jsholds a list of all crates in the output directory.search-index<SUFFIX>.jsholds a list of all searchable items.- For each trait, there is a file under

implementors/.../trait.TraitName.jscontaining a list of implementors of that trait. The implementors may be in different crates than the trait, and the JS file is updated as we discover new ones.

Use cases

There are a few major use cases for rustdoc that you should keep in mind when working on it:

Standard library docs

These are published at https://doc.rust-lang.org/std as part of the Rust release process. Stable releases are also uploaded to specific versioned URLs like https://doc.rust-lang.org/1.57.0/std/. Beta and nightly docs are published to https://doc.rust-lang.org/beta/std/ and https://doc.rust-lang.org/nightly/std/. The docs are uploaded with the promote-release tool and served from S3 with CloudFront.

The standard library docs contain five crates: alloc, core, proc_macro, std, and test.

docs.rs

When crates are published to crates.io, docs.rs automatically builds and publishes their documentation, for instance at https://docs.rs/serde/latest/serde/. It always builds with the current nightly rustdoc, so any changes you land in rustdoc are "insta-stable" in that they will have an immediate public effect on docs.rs. Old documentation is not rebuilt, so you will see some variation in UI when browsing old releases in docs.rs. Crate authors can request rebuilds, which will be run with the latest rustdoc.

Docs.rs performs some transformations on rustdoc's output in order to save

storage and display a navigation bar at the top. In particular, certain static

files, like main.js and rustdoc.css, may be shared across multiple invocations

of the same version of rustdoc. Others, like crates.js and sidebar-items.js, are

different for different invocations. Still others, like fonts, will never

change. These categories are distinguished using the SharedResource enum in

src/librustdoc/html/render/write_shared.rs

Documentation on docs.rs is always generated for a single crate at a time, so the search and sidebar functionality don't include dependencies of the current crate.

Locally generated docs

Crate authors can run cargo doc --open in crates they have checked

out locally to see the docs. This is useful to check that the docs they

are writing are useful and display correctly. It can also be useful for

people to view documentation on crates they aren't authors of, but want to

use. In both cases, people may use --document-private-items Cargo flag to

see private methods, fields, and so on, which are normally not displayed.

By default cargo doc will generate documentation for a crate and all of its

dependencies. That can result in a very large documentation bundle, with a large

(and slow) search corpus. The Cargo flag --no-deps inhibits that behavior and

generates docs for just the crate.

Self-hosted project docs

Some projects like to host their own documentation. For example:

https://docs.serde.rs/. This is easy to do by locally generating docs, and

simply copying them to a web server. Rustdoc's HTML output can be extensively

customized by flags. Users can add a theme, set the default theme, and inject

arbitrary HTML. See rustdoc --help for details.

Adding a new target

These are a set of steps to add support for a new target. There are numerous end states and paths to get there, so not all sections may be relevant to your desired goal.

- Specifying a new LLVM

- Creating a target specification

- Patching crates

- Cross-compiling

- Promoting a target from tier 2 (target) to tier 2 (host)

Specifying a new LLVM

For very new targets, you may need to use a different fork of LLVM

than what is currently shipped with Rust. In that case, navigate to

the src/llvm-project git submodule (you might need to run ./x check at least once so the submodule is updated), check out the

appropriate commit for your fork, then commit that new submodule

reference in the main Rust repository.

An example would be:

cd src/llvm-project

git remote add my-target-llvm some-llvm-repository

git checkout my-target-llvm/my-branch

cd ..

git add llvm-project

git commit -m 'Use my custom LLVM'

Using pre-built LLVM

If you have a local LLVM checkout that is already built, you may be able to configure Rust to treat your build as the system LLVM to avoid redundant builds.

You can tell Rust to use a pre-built version of LLVM using the target section

of config.toml:

[target.x86_64-unknown-linux-gnu]

llvm-config = "/path/to/llvm/llvm-7.0.1/bin/llvm-config"

If you are attempting to use a system LLVM, we have observed the following paths before, though they may be different from your system:

/usr/bin/llvm-config-8/usr/lib/llvm-8/bin/llvm-config

Note that you need to have the LLVM FileCheck tool installed, which is used

for codegen tests. This tool is normally built with LLVM, but if you use your

own preinstalled LLVM, you will need to provide FileCheck in some other way.

On Debian-based systems, you can install the llvm-N-tools package (where N

is the LLVM version number, e.g. llvm-8-tools). Alternately, you can specify

the path to FileCheck with the llvm-filecheck config item in config.toml

or you can disable codegen test with the codegen-tests item in config.toml.

Creating a target specification

You should start with a target JSON file. You can see the specification

for an existing target using --print target-spec-json:

rustc -Z unstable-options --target=wasm32-unknown-unknown --print target-spec-json

Save that JSON to a file and modify it as appropriate for your target.

Adding a target specification

Once you have filled out a JSON specification and been able to compile somewhat successfully, you can copy the specification into the compiler itself.

You will need to add a line to the big table inside of the

supported_targets macro in the rustc_target::spec module. You

will then add a corresponding file for your new target containing a

target function.

Look for existing targets to use as examples.

After adding your target to the rustc_target crate you may want to add

core, std, ... with support for your new target. In that case you will

probably need access to some target_* cfg. Unfortunately when building with

stage0 (the beta compiler), you'll get an error that the target cfg is

unexpected because stage0 doesn't know about the new target specification and

we pass --check-cfg in order to tell it to check.

To fix the errors you will need to manually add the unexpected value to the

different Cargo.toml in library/{std,alloc,core}/Cargo.toml. Here is an

example for adding NEW_TARGET_ARCH as target_arch:

library/std/Cargo.toml:

[lints.rust.unexpected_cfgs]

level = "warn"

check-cfg = [

'cfg(bootstrap)',

- 'cfg(target_arch, values("xtensa"))',

+ # #[cfg(bootstrap)] NEW_TARGET_ARCH

+ 'cfg(target_arch, values("xtensa", "NEW_TARGET_ARCH"))',

To use this target in bootstrap, we need to explicitly add the target triple to the STAGE0_MISSING_TARGETS

list in src/bootstrap/src/core/sanity.rs. This is necessary because the default compiler bootstrap uses does

not recognize the new target we just added. Therefore, it should be added to STAGE0_MISSING_TARGETS so that the

bootstrap is aware that this target is not yet supported by the stage0 compiler.

const STAGE0_MISSING_TARGETS: &[&str] = &[

+ "NEW_TARGET_TRIPLE"

];

Patching crates

You may need to make changes to crates that the compiler depends on,

such as libc or cc. If so, you can use Cargo's

[patch] ability. For example, if you want to use an

unreleased version of libc, you can add it to the top-level

Cargo.toml file:

diff --git a/Cargo.toml b/Cargo.toml

index 1e83f05e0ca..4d0172071c1 100644

--- a/Cargo.toml

+++ b/Cargo.toml

@@ -113,6 +113,8 @@ cargo-util = { path = "src/tools/cargo/crates/cargo-util" }

[patch.crates-io]

+libc = { git = "https://github.com/rust-lang/libc", rev = "0bf7ce340699dcbacabdf5f16a242d2219a49ee0" }

# See comments in `src/tools/rustc-workspace-hack/README.md` for what's going on

# here

rustc-workspace-hack = { path = 'src/tools/rustc-workspace-hack' }

After this, run cargo update -p libc to update the lockfiles.

Beware that if you patch to a local path dependency, this will enable

warnings for that dependency. Some dependencies are not warning-free, and due

to the deny-warnings setting in config.toml, the build may suddenly start

to fail.

To work around warnings, you may want to:

- Modify the dependency to remove the warnings

- Or for local development purposes, suppress the warnings by setting deny-warnings = false in config.toml.

# config.toml

[rust]

deny-warnings = false

Cross-compiling

Once you have a target specification in JSON and in the code, you can

cross-compile rustc:

DESTDIR=/path/to/install/in \

./x install -i --stage 1 --host aarch64-apple-darwin.json --target aarch64-apple-darwin \

compiler/rustc library/std

If your target specification is already available in the bootstrap compiler, you can use it instead of the JSON file for both arguments.

Promoting a target from tier 2 (target) to tier 2 (host)

There are two levels of tier 2 targets:

a) Targets that are only cross-compiled (rustup target add)

b) Targets that have a native toolchain (rustup toolchain install)

For an example of promoting a target from cross-compiled to native, see #75914.

Optimized build of the compiler

There are multiple additional build configuration options and techniques that can be used to compile a

build of rustc that is as optimized as possible (for example when building rustc for a Linux

distribution). The status of these configuration options for various Rust targets is tracked here.

This page describes how you can use these approaches when building rustc yourself.

Link-time optimization

Link-time optimization is a powerful compiler technique that can increase program performance. To

enable (Thin-)LTO when building rustc, set the rust.lto config option to "thin"

in config.toml:

[rust]

lto = "thin"

Note that LTO for

rustcis currently supported and tested only for thex86_64-unknown-linux-gnutarget. Other targets may work, but no guarantees are provided. Notably, LTO-optimizedrustccurrently produces miscompilations on Windows.

Enabling LTO on Linux has produced speed-ups by up to 10%.

Memory allocator

Using a different memory allocator for rustc can provide significant performance benefits. If you

want to enable the jemalloc allocator, you can set the rust.jemalloc option to true

in config.toml:

[rust]

jemalloc = true

Note that this option is currently only supported for Linux and macOS targets.

Codegen units

Reducing the amount of codegen units per rustc crate can produce a faster build of the compiler.

You can modify the number of codegen units for rustc and libstd in config.toml with the

following options:

[rust]

codegen-units = 1

codegen-units-std = 1

Instruction set

By default, rustc is compiled for a generic (and conservative) instruction set architecture

(depending on the selected target), to make it support as many CPUs as possible. If you want to

compile rustc for a specific instruction set architecture, you can set the target_cpu compiler

option in RUSTFLAGS:

RUSTFLAGS="-C target_cpu=x86-64-v3" ./x build ...

If you also want to compile LLVM for a specific instruction set, you can set llvm flags

in config.toml:

[llvm]

cxxflags = "-march=x86-64-v3"

cflags = "-march=x86-64-v3"

Profile-guided optimization

Applying profile-guided optimizations (or more generally, feedback-directed optimizations) can

produce a large increase to rustc performance, by up to 15% (1, 2). However, these techniques

are not simply enabled by a configuration option, but rather they require a complex build workflow

that compiles rustc multiple times and profiles it on selected benchmarks.

There is a tool called opt-dist that is used to optimize rustc with PGO (profile-guided

optimizations) and BOLT (a post-link binary optimizer) for builds distributed to end users. You

can examine the tool, which is located in src/tools/opt-dist, and build a custom PGO build

workflow based on it, or try to use it directly. Note that the tool is currently quite hardcoded to

the way we use it in Rust's continuous integration workflows, and it might require some custom

changes to make it work in a different environment.

To use the tool, you will need to provide some external dependencies:

- A Python3 interpreter (for executing

x.py). - Compiled LLVM toolchain, with the

llvm-profdatabinary. Optionally, if you want to use BOLT, thellvm-boltandmerge-fdatabinaries have to be available in the toolchain.

These dependencies are provided to opt-dist by an implementation of the Environment struct.

It specifies directories where will the PGO/BOLT pipeline take place, and also external dependencies

like Python or LLVM.

Here is an example of how can opt-dist be used locally (outside of CI):

- Build the tool with the following command:

./x build tools/opt-dist - Run the tool with the

localmode and provide necessary parameters:

You can run./build/host/stage0-tools-bin/opt-dist local \ --target-triple <target> \ # select target, e.g. "x86_64-unknown-linux-gnu" --checkout-dir <path> \ # path to rust checkout, e.g. "." --llvm-dir <path> \ # path to built LLVM toolchain, e.g. "/foo/bar/llvm/install" -- python3 x.py dist # pass the actual build command--helpto see further parameters that you can modify.

Note: if you want to run the actual CI pipeline, instead of running

opt-distlocally, you can executeDEPLOY=1 src/ci/docker/run.sh dist-x86_64-linux.

Testing the compiler

The Rust project runs a wide variety of different tests, orchestrated by the

build system (./x test). This section gives a brief overview of the different

testing tools. Subsequent chapters dive into running tests and

adding new tests.

Kinds of tests

There are several kinds of tests to exercise things in the Rust distribution.

Almost all of them are driven by ./x test, with some exceptions noted below.

Compiletest

The main test harness for testing the compiler itself is a tool called compiletest.

compiletest supports running different styles of tests, organized into test

suites. A test mode may provide common presets/behavior for a set of test

suites. compiletest-supported tests are located in the tests directory.

The Compiletest chapter goes into detail on how to use this tool.

Example:

./x test tests/ui

Package tests

The standard library and many of the compiler packages include typical Rust

#[test] unit tests, integration tests, and documentation tests. You can pass a

path to ./x test for almost any package in the library/ or compiler/

directory, and x will essentially run cargo test on that package.

Examples:

| Command | Description |

|---|---|

./x test library/std | Runs tests on std only |

./x test library/core | Runs tests on core only |

./x test compiler/rustc_data_structures | Runs tests on rustc_data_structures |

The standard library relies very heavily on documentation tests to cover its functionality. However, unit tests and integration tests can also be used as needed. Almost all of the compiler packages have doctests disabled.

All standard library and compiler unit tests are placed in separate tests file

(which is enforced in tidy). This ensures that when the test

file is changed, the crate does not need to be recompiled. For example:

#[cfg(test)]

mod tests;

If it wasn't done this way, and you were working on something like core, that

would require recompiling the entire standard library, and the entirety of

rustc.

./x test includes some CLI options for controlling the behavior with these

package tests:

--doc— Only runs documentation tests in the package.--no-doc— Run all tests except documentation tests.

Tidy

Tidy is a custom tool used for validating source code style and formatting conventions, such as rejecting long lines. There is more information in the section on coding conventions.

Examples:

./x test tidy

Formatting

Rustfmt is integrated with the build system to enforce uniform style across the compiler. The formatting check is automatically run by the Tidy tool mentioned above.

Examples:

| Command | Description |

|---|---|

./x fmt --check | Checks formatting and exits with an error if formatting is needed. |

./x fmt | Runs rustfmt across the entire codebase. |

./x test tidy --bless | First runs rustfmt to format the codebase, then runs tidy checks. |

Book documentation tests

All of the books that are published have their own tests, primarily for

validating that the Rust code examples pass. Under the hood, these are

essentially using rustdoc --test on the markdown files. The tests can be run

by passing a path to a book to ./x test.

Example:

./x test src/doc/book

Documentation link checker

Links across all documentation is validated with a link checker tool.

Example:

./x test src/tools/linkchecker

Example:

./x test linkchecker

This requires building all of the documentation, which might take a while.

Dist check

distcheck verifies that the source distribution tarball created by the build

system will unpack, build, and run all tests.

Example:

./x test distcheck

Tool tests

Packages that are included with Rust have all of their tests run as well. This includes things such as cargo, clippy, rustfmt, miri, bootstrap (testing the Rust build system itself), etc.

Most of the tools are located in the src/tools directory. To run the tool's

tests, just pass its path to ./x test.

Example:

./x test src/tools/cargo

Usually these tools involve running cargo test within the tool's directory.

If you want to run only a specified set of tests, append --test-args FILTER_NAME to the command.

Example:

./x test src/tools/miri --test-args padding

In CI, some tools are allowed to fail. Failures send notifications to the corresponding teams, and is tracked on the toolstate website. More information can be found in the toolstate documentation.

Ecosystem testing

Rust tests integration with real-world code to catch regressions and make informed decisions about the evolution of the language. There are several kinds of ecosystem tests, including Crater. See the Ecosystem testing chapter for more details.

Performance testing

A separate infrastructure is used for testing and tracking performance of the compiler. See the Performance testing chapter for more details.

Further reading

The following blog posts may also be of interest:

- brson's classic "How Rust is tested"

Running tests

- Running a subset of the test suites

- Run unit tests on the compiler/library

- Running an individual test

- Passing arguments to

rustcwhen running tests - Editing and updating the reference files

- Configuring test running

- Passing

--pass $mode - Running tests with different "compare modes"

- Running tests manually

- Running

run-maketests - Running tests on a remote machine

- Testing on emulators

- Running rustc_codegen_gcc tests

You can run the entire test collection using x. But note that running the

entire test collection is almost never what you want to do during local

development because it takes a really long time. For local development, see the

subsection after on how to run a subset of tests.

You usually only want to run a subset of the test suites (or even a smaller set of tests than that) which you expect will exercise your changes. PR CI exercises a subset of test collections, and merge queue CI will exercise all of the test collection.

./x test

The test results are cached and previously successful tests are ignored during

testing. The stdout/stderr contents as well as a timestamp file for every test

can be found under build/<target-triple>/test/ for the given

<target-triple>. To force-rerun a test (e.g. in case the test runner fails to

notice a change) you can use the --force-rerun CLI option.

Note on requirements of external dependencies

Some test suites may require external dependecies. This is especially true of debuginfo tests. Some debuginfo tests require a Python-enabled gdb. You can test if your gdb install supports Python by using the

pythoncommand from within gdb. Once invoked you can type some Python code (e.g.print("hi")) followed by return and thenCTRL+Dto execute it. If you are building gdb from source, you will need to configure with--with-python=<path-to-python-binary>.

Running a subset of the test suites

When working on a specific PR, you will usually want to run a smaller set of

tests. For example, a good "smoke test" that can be used after modifying rustc

to see if things are generally working correctly would be to exercise the ui

test suite (tests/ui):

./x test tests/ui

This will run the ui test suite. Of course, the choice of test suites is

somewhat arbitrary, and may not suit the task you are doing. For example, if you

are hacking on debuginfo, you may be better off with the debuginfo test suite:

./x test tests/debuginfo

If you only need to test a specific subdirectory of tests for any given test

suite, you can pass that directory as a filter to ./x test:

./x test tests/ui/const-generics

Note for MSYS2

On MSYS2 the paths seem to be strange and

./x testneither recognizestests/ui/const-genericsnortests\ui\const-generics. In that case, you can workaround it by using e.g../x test ui --test-args="tests/ui/const-generics".

Likewise, you can test a single file by passing its path:

./x test tests/ui/const-generics/const-test.rs

x doesn't support running a single tool test by passing its path yet. You'll

have to use the --test-args argument as describled

below.

./x test src/tools/miri --test-args tests/fail/uninit/padding-enum.rs

Run only the tidy script

./x test tidy

Run tests on the standard library

./x test --stage 0 library/std

Note that this only runs tests on std; if you want to test core or other

crates, you have to specify those explicitly.

Run the tidy script and tests on the standard library

./x test --stage 0 tidy library/std

Run tests on the standard library using a stage 1 compiler

./x test --stage 1 library/std

By listing which test suites you want to run you avoid having to run tests for components you did not change at all.

Run all tests using a stage 2 compiler

./x test --stage 2

Run unit tests on the compiler/library

You may want to run unit tests on a specific file with following:

./x test compiler/rustc_data_structures/src/thin_vec/tests.rs

But unfortunately, it's impossible. You should invoke the following instead:

./x test compiler/rustc_data_structures/ --test-args thin_vec

Running an individual test

Another common thing that people want to do is to run an individual test,

often the test they are trying to fix. As mentioned earlier, you may pass the

full file path to achieve this, or alternatively one may invoke x with the

--test-args option:

./x test tests/ui --test-args issue-1234

Under the hood, the test runner invokes the standard Rust test runner (the same

one you get with #[test]), so this command would wind up filtering for tests

that include "issue-1234" in the name. Thus, --test-args is a good way to run

a collection of related tests.

Passing arguments to rustc when running tests

It can sometimes be useful to run some tests with specific compiler arguments,