Website Performance Monitoring: An Introduction

Website performance monitoring is the process of ensuring that end users enjoy a smooth and painless experience with websites, web applications and web services whenever they interact with them.

With more than 20 percent of all consumer spending in the United States occurring online, companies cannot afford to lose sales opportunities because of poorly performing or malfunctioning websites. Indeed, slow-loading websites cost retailers approximately $72.6 billion per year, one study found. Nine out of 10 U.S. shoppers say they would leave a site if it didn’t load in a reasonable amount of time, 57 percent would leave to make a purchase from another retailer, and 21 percent would never return, according to a recent survey.

Web performance monitoring tools help prevent such misfortunes with features that let network administrators view, gauge, manage and fine-tune the health of their online properties. Specifically, these tools enable administrators to gather metrics to monitor website performance on key performance indicators — such as website speed, content load speeds, website uptime, connection and latency — to isolate and address issues before they affect customers.

There are two main types of performance monitoring technologies: synthetic monitoring, which proactively seeks out current or short-term website issues, and real user monitoring (RUM), a passive or reactive approach for gathering information to understand long-term trends.

Website performance monitoring is rapidly becoming an indispensable tool for improving online customer experience and minimizing the rise of poorly performing sites leading to lost sales. This article offers an in-depth examination of how website performance monitoring works, how organizations can implement it and the best practices for getting started.

Website Performance Monitoring Basics

Website performance is all about speeds and feeds. How well are web pages loading after a user clicks on a link or types in a URL address? How is the page size affecting usability of the site, and by association, user satisfaction? Do they pop up faster than a user can blink? Or do pages appear glitchy, fail to load images or crash altogether? Also, how do the web pages perform on different devices, such as desktop PCs or smartphones?

When evaluating how customers might experience a website, network administrators try to assess many questions such as these. Many industry experts say ideally an average website should load in less than three seconds. This target likely exists because nearly half (47 percent) of U.S. consumers say that’s how long their patience will last before they decide to abandon a slow-loading website.

It is difficult to know if companies are meeting those targets, as conclusions from related studies vary widely. Some indicate average page load times are slightly above 3 seconds. Others put average times at around 10 seconds for desktop PCs and as high as 22 seconds for mobile devices.

What is known is that most brands are still striving to improve those numbers, and many also try to track and influence how customers subjectively perceive the performance of their site. In many ways, how fast a website feels to a user can have an even greater impact on their brand experiences than the measurable reality. Some brands address perception gaps with design tricks, such as displaying loading spinners while users wait for an entire page to emerge or providing jokes and tips to entertain them during the loading interlude. These approaches can help pass the time for customers and keep them around longer.

Learn how PUMA improved its e-commerce experience with Splunk to boost revenue by $10,000 per hour in the customer story.

Performance Monitoring and Google’s Core Web Vitals

Google’s Core Web Vitals, a subset of its Web Vitals, have become a significant way of ranking how well web pages are delivering quality user experiences. Metrics in Core Web Vitals look at key performance indicators, such as connection speed, content loading speeds and page speeds, interactivity, bottlenecks and visual stability. Google is continually updating Core Web Vitals to consider more granular details influencing site performance. Recently, for example, it added capabilities for examining: Largest Contentful Paint (LCP), which measures the time it takes large images to render after a user tries to load a page; First Input Delay (FID), which measures the time between a user’s first interaction with a site and when a browser is able to respond to that interaction; and Cumulative Layout Shift (CLS), which is a measure of visual stability.

With website performance monitoring, organizations can proactively and reactively run tests to measure how well their web properties are meeting Core Web Vitals criteria. Uptime monitoring, for example, can determine the ratio of uptime, directly corresponding to user experience and satisfaction. If a website isn’t quite up to snuff, some solutions will even provide relevant best practices and insights for improving user experiences.

Benefits of Web Performance Monitoring

Website performance monitoring tools make administrators’ lives easier by gathering meaningful data on website performance, creating visuals on dashboards and providing notifications when a metric is not up to par. These include:

- Bounce rate: This refers to how often a user comes to a website but only views a single page on it before leaving it. On average, website bounce rates reportedly range from 26 percent to 70 percent. The average worldwide for all industries was around 30 percent in October 2021. Website performance monitoring tools can help you maintain optimal response times to reduce bounce rates.

- Page load times: Slow page load times lead to higher bounce rates. In fact, Google says if page load times increase from one to three seconds, bounce rates increase by 32 percent. If the load times increase to six seconds, bounce rates surge an incredible 106 percent. Good tools identify when pages aren’t loading properly and help administrators analyze what might be causing the issue.

- Error rate: No site, regardless of its sophistication, is error free. Error rate measures how many glitches occur within specified timeframes and under certain circumstances. For example, using website performance monitoring, an administrator might try to ascertain whether higher volumes of site visitors during the holidays are leading to more errors than, say, lower traffic times like summer. Having such data can help administrators address and minimize errors in the future.

- Throughput/average load: This metric essentially looks at the number of requests website servers receive each second. Application performance monitoring tools allow administrators to assess how much pressure a company’s web applications are experiencing so they can take steps to lighten the load and avoid potential glitches and failures down the road.

- Interaction time: As website visitors, we’ve all tried to click a link or type something into a search field and have nothing happen right away. We click again a few seconds later, and it suddenly works. Sometimes, it’s an internet connectivity issue. But many times, website issues are the culprit. By measuring interaction time as part of website performance monitoring, administrators can identify when problems exist, examine data to find their root causes and then take action

- Uptime: Uptime is exactly what it sounds like: How often is the website available to users who want to access it? Websites go down all the time, even for major companies like Amazon, Walmart and others. This can be catastrophic from both revenue and brand reputation standpoints. For example, it’s estimated some companies lose nearly $5 million for every hour of website outages. The ability to stay on top of uptime metrics, therefore, is considered one of the most critical aspects of website performance monitoring.

While a major advantage of website performance monitoring is that it allows organizations to head off problems, it also provides numerous business and operational benefits. These include:

- Knowing system status — everywhere: Most website performance monitoring tools allow administrators to perform real-time system checks no matter where their web servers reside in the world.

- Gaining end-to-end visibility: Website performance monitoring, especially when used in tandem with APM and observability tools, provides unprecedented visibility into system and application performance for all on-premises and cloud-based web servers. This is no small feat. Many organizations have a mishmash of on-premises and cloud-based web servers powering their online sites — but they lack visibility into them, resulting in numerous integration issues. Website performance monitoring tools bridge that gap. They enable administrators to look across a complex landscape of infrastructure, applications and user interfaces and pinpoint the root cause of performance issues.

- Reducing costs: By regularly monitoring and continually improving website operations, administrators can head off many crises that might require them to work nights, weekends and holidays. Of course, all that overtime plus the expense of burning the midnight oil results in higher operating costs, often passed along to the customer as higher pricing.

- Reducing shopping cart abandonment: All of us have left items that vaguely interested us sitting in our shopping carts. Sometimes our attention is diverted to other things. But often, poor website performance makes it so difficult to check out that we simply walk away. That’s concerning for retailers because checkout abandonment overall is reportedly a $4 trillion problem, with 70 percent of shoppers regularly failing to complete their online purchases. While it won’t help to keep shoppers from changing their minds, website performance monitoring can remove technical problems as a cause of shopping cart churn

- Improving customer experience and brand loyalty: Having a snazzy website is great, but if customers can’t easily access or use it — whether due to navigation issues, a javascript error or faulty plugins — they’ll have a poor experience. What’s more, studies show a poor website can taint their impressions of a brand — quite possibly enough that they would communicate their negative views to other potential customers in social media. Website performance monitoring helps offset this risk by helping to optimize site functionality.

Common Challenges of Web Performance Monitoring

There are many technical and design issues creating challenges that some — but not all — website performance monitoring tools attempt to solve. Here are a few:

- Complex web architectures: Developers are restructuring their traditional code stacks to take advantage of microservices and containers. These architectures are vastly different from traditional LAMP and MEAN development stacks and involve much more data that must still be inspected and troubleshooted. Some modern website performance monitoring tools are up to the task; many older ones, not so much.

- Lack of intelligent alerting: Alerting is central to most website performance monitoring tools, but that doesn’t mean these features are all flagging issues intelligently. In fact, alert fatigue is a common problem that can lead to missed alerts, slow incident response times and burnout. Website performance monitoring tools allow you to configure alerts to cut down on this digital noise, but this takes time and doesn’t always help limit alerts to the most critical performance issues.

- Uncertain root causes: To address performance issues, you must know what’s causing them. This isn’t easy, though, because root causes are tough to determine based on surface information. Any event, such as a website failing to load, could have multiple causes. It could be a request overload issue, a network bandwidth or CPU problem, a device’s inability to process images or a host of other culprits. Ascertaining the real root cause typically requires powerful website performance monitoring and analytical capabilities, as well as transaction monitoring and potentially server monitoring capabilities as well.

- Common errors overshadowing newer ones: Common errors make up the majority of weblog data and are often taken for granted. That’s why it’s easy to overlook or even find new and unique kinds of errors when they come along. Some website performance monitoring tools excel at finding these anomalies, which can signal future performance issues

- Web pages keep getting bigger: Webpage sizes are getting larger every year as companies increasingly add rich capabilities to them. Unfortunately, this added complexity can lead to potential performance problems, which older website performance monitoring tools may or may not catch.

Website performance monitoring has many business and operational advantages but can be challenging in light of complex architectures and lack of intelligent alerting.

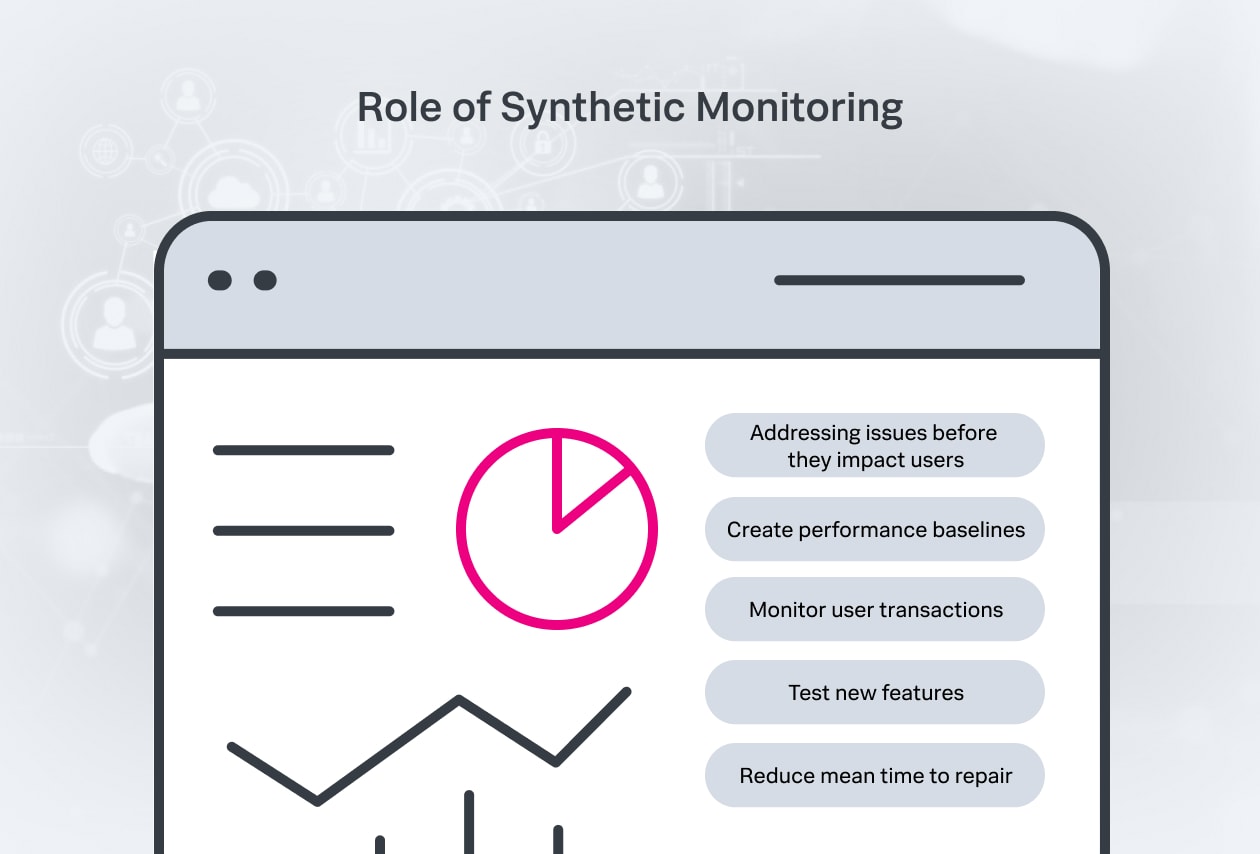

The Role of Synthetic Monitoring

Synthetic monitoring (also known as synthetic testing and active monitoring) simulates user transactions by relying on behavioral scripts that emulate flows and measure availability, functionality and performance for critical endpoints or across the entire user journey. Because this technique stages and directs an artificial user experience, it’s classified as active monitoring, whereas real user monitoring is considered passive monitoring. In practice, synthetic monitoring works like this: administrators (likely teams responsible for SLA uptime) define several checkpoints and select performance metrics. A robot client follows the predefined user journey through the app, simulating transactions that mimic human behavior, and sending information back on a page’s availability (did the URL respond?), functionality (did all the buttons work?) and performance (how fast were page resources loaded?). Typically, teams set up alerts to notify them of outages for critical service endpoints, which can trigger their incident response.

In a nutshell, synthetic monitoring allows organizations to proactively:

- Identify and address issues before they impact users: Unlike real user monitoring, which requires human user interaction, synthetic monitoring can be employed 24/7, in low traffic and in testing before pushing code live. This proactive approach gives teams the time to recognize, troubleshoot and resolve issues before users are even aware of them.

- Create performance baselines: Synthetic monitoring can help benchmark and improve page performance by measuring critical elements of user experience, such as how fast a page loads content, interactivity, visual stability, error count and trends in performance relative to previous builds, releases or competitors.

- Monitor user transactions and business processes: With synthetic monitoring, teams can emulate and monitor the web performance of searches, form-fills, logins and other transactions from different geographies. Comparing performance data from these processes across geographies can indicate where and how to make improvements.

- Test new features: Teams can also test new app features before they go live to see how they respond under load and if any of the changes impact third-party application programming interfaces (APIs).

- Reduce mean time to repair (MTTR): MTTR represents the average time it takes to repair and restore service to functionality. The lower the MTTR for an affected service, the less likely it is to negatively impact the business. Because synthetic monitoring enables teams to get ahead of issues before they affect the user — and alerts from synthetic monitors often trigger larger incident response coordination — it’s an effective tool for reducing MTTR.

Synthetic monitors real transactions by simulating user flows, while measuring availability, functionality and performance.

The Role of Real User Monitoring (RUM)

Real User Monitoring (RUM) (also known as end user monitoring or end user experience monitoring) is a method used to measure the end user experience in application performance management. It provides visibility into user experience with websites or web apps by passively collecting and analyzing timing, errors and dimensional information on end users in real time. RUM helps developers understand how their code impacts page performance, user experience and other performance issues.

RUM offers comprehensive views into the customer experience as opposed to simple uptime/downtime monitoring, which only measures availability. For example, an e-commerce website’s home page might be available, but the page might be delivering content or images slowly. It might also be experiencing delays when processing a user’s click or keystroke, resulting in site or shopping cart abandonment. RUM captures the customer’s experience after the web server has sent the initial HTML document. This information is valuable because 80 percent to 90 percent of end user wait time is experienced on the client-side in the web browser, rather than on the back end.

RUM is an increasingly important tool for understanding and optimizing user experience, as well as alerting administrators to issues and achieving business objectives.

Getting Started With Web Performance Monitoring

As with most technical disciplines, the best way to get started with website performance monitoring is to first determine your goals. What are you hoping to accomplish? What opportunities would you like to explore with your web properties? What performance challenges have you faced, and how have they been affecting customer experience? And what kind of budget do you have to upgrade your current situation?

Once you have solid answers to these questions, it’s time to evaluate the various website performance tools and short list those that meet your specific requirements. You’ll likely want to prioritize recently built tools as opposed to legacy products that might struggle to keep up with the complexity of modern web architectures. Similarly, look at solutions that integrate the latest real-time, intelligent alerting capabilities. It will also be important to evaluate offerings that have been designed or updated to help organizations meet or exceed Google Core Web Vitals standards. Finally, look for platforms that offer multiple types of web performance monitoring approaches, like synthetic monitoring and RUM. Most organizations want to blend these capabilities, so you are better off choosing a platform offering several of the more useful ones.

The Bottom Line: Your website is your business; monitor it!

Customers today expect companies to do everything they can to deliver consistently superior experiences across every physical and digital touchpoint. That’s because so many businesses are online now that customers have the power to be more selective than ever about the brands they patronize. As part of that, if a website’s design or performance issues lead to unnecessarily unpleasant experiences, customers will most likely take their business elsewhere — quite possibly for good.

As such, organizations can never take website performance monitoring lightly. Website architectures and user expectations are evolving too quickly for that, and even one misstep can lead to significantly higher costs, shrinking revenues and lost customer trust. It is vital, therefore, to find a website performance monitoring solution that will not only meet your immediate needs but also be flexible enough to serve future requirements. Your business could truly depend on it.

- IT Monitoring

- Application Performance Monitoring

- APM vs Network Performance Monitoring

- Security Monitoring

- Cloud Monitoring

- Data Monitoring

- Endpoint Monitoring

- DevOps Monitoring

- IaaS Monitoring

- Windows Infrastructure Monitoring

- Active vs Passive Monitoring

- Multicloud Monitoring

- Cloud Network Monitoring

- Database Monitoring

- Infrastructure Monitoring

- IoT Monitoring

- Kubernetes Monitoring

- Network Monitoring

- Network Security Monitoring

- RED Monitoring

- Real User Monitoring

- Server Monitoring

- Service Performance Monitoring

- SNMP Monitoring

- Storage Monitoring

- Synthetic Monitoring

- Synthetic Monitoring Tools/Features

- Synthetic Monitoring vs RUM

- User Behavior Monitoring

- Website Performance Monitoring

- Log Monitoring

- Continuous Monitoring

- On-Premises Monitoring

- Monitoring vs Observability vs Telemetry

See an error or have a suggestion? Please let us know by emailing [email protected].

This posting does not necessarily represent Splunk's position, strategies or opinion.

Related Articles

About Splunk

The Splunk platform removes the barriers between data and action, empowering observability, IT and security teams to ensure their organizations are secure, resilient and innovative.

Founded in 2003, Splunk is a global company — with over 7,500 employees, Splunkers have received over 1,020 patents to date and availability in 21 regions around the world — and offers an open, extensible data platform that supports shared data across any environment so that all teams in an organization can get end-to-end visibility, with context, for every interaction and business process. Build a strong data foundation with Splunk.