Here are stats on some random hard disk from 1991, the Seagate ST-2106N:

Capacity: 106 MB

Average seek: 18 ms

Max full seek: 35 ms

And here are some numbers from a newer drive, the Seagate ST3500830NS:

Capacity: 500,000 MB

Random write seek: less than 10 ms

In the past 15 years, hard disk capacity has increased about 5000x. However, the ability to seek has only increased 3.5x.

In those same 15 years, DRAM capacity has also increased dramatically, probably more than 5000x. My friend just bought a computer with 32 GB of RAM -- that's 320x the capacity of the 1991 hard disk!

Here's another important stat:

1991: strlen("Paul Buchheit") = 13

2007: strlen("Paul Buchheit") = 13

Surprised? Probably not. My point? Our databases are still storing a lot of the same things they were in 1991 (or 1970).

The problem? Most databases are still storing my name using the same techniques that they did 15 or more years ago -- they seek the disk head to some specific location and then read or write my name to that location (this seek can be deferred with write ahead logging, but it will need to happen eventually). These databases rely on the one operation that DID NOT dramatically improve in the past 15 years! They still perform like it's 1991.

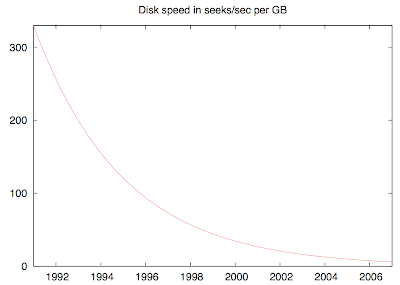

Here's another way of looking at what has happened:

Relative to storage capacity, seeks/sec is approaching zero. Disks should no longer be thought of as a "random access" devices.

Some people try to beat that exponential curve by simply buying more disks. Here are a few more stats to consider (actual measurements from my computer):

Disk - sequential read/write: 52 MB/sec

Disk - random seeks: 100/sec

DRAM - sequential read/write: 3237 MB/sec

DRAM - random read/write: 10,000,000/sec

For sequential access, DRAM is about 62x the speed of disk -- disk is way slower, but only by a few orders of magnitude.

However, for random access, DRAM is 100,000 times the speed of disk! Buying and maintaining 100,000 disks would certainly be a hassle -- I don't recommend it.

What can you do instead of buying 100,000 disks? Keep your data in memory, or at least all of the small items, such as names, tags, etc. Fortunately, there are some easy tools available for doing that, such as memcached. If your db is rarely updated, that may be enough. However, if you also have frequent updates, then your database will be back to thrashing the disk around updating its b-trees, and you will be back to 1991 performance. To fix that, you may need to switch to a different method of storing data, one which is log based (meaning that the db updates are all written to sequential locations instead of random locations). Maybe I'll address that in another post.

Finally, one more interesting stat: 8 GB of flash memory cost about $80

Flash has some weird performance characteristics, but those can be overcome with smarter controllers. I expect that flash will replace disk for all applications other than large object storage (such as video streams) and backup.

Update: I'm not suggesting that everyone should get rid of their databases and replace them with some kind of custom data storage. That would be a big mistake. If your database is working fine, then you shouldn't waste much time worrying about these issues. The intent of this post is to help you understand the performance challenges faced by databases that rely on disk. If your database is having performance problems, the correct solution will depend on your situation, and may be as simple as tuning the db configuration.

I also neglected to mention something very important, which is that most databases have a configurable buffer cache. Increasing the size of that cache may be one of the easiest and most effective ways to improve db performance, since it can reduce the number of disk reads.

6 comments:

1991: strlen("Paul Buchheit") = 13

i highly suspect this data point is manufactured. unless of course you have a time machine, one that doesn't involve little dinosaurs wearing hats, part of the 'un'

You really are right. I initially resisted it but the more I think about it the more it makes sense. That said, I still think it's ok to get something out the door and then later optimize the known bottlenecks rather than guessing in advance. There are certainly cases where it's obvious something will need special handling, but I've been surprised many times so far.

Like you said, the best storage location for data depends very much on its type and its access and usage patterns. I used to be in the "database first, then change if needed" mindset, but I'm coming around to a "RAM first, then change if needed" perspective.

The biggest blocker for me is still the lack of a well tested framework for doing this sort of thing (with backup, replication, and hot fail-over implemented already). Having to roll my own allowed me to dismiss the idea as too expensive in terms of development time, but I think I'm going to do it anyway now.

-Nick

Paul, I absolutely agree with this. But it seems to me that the right way to solve this, not for google-scale websites, but for everyone else, is for someone to make a database that caches its data in memory much more aggressively. It sounds like an opportunity, as surely it's silly for everyone to roll their own MySQL/Memcached combination solution.

Any thoughts on why this hasn't happened yet?

snowmaker, People have been using timesten (an in-memory database) for ~10 years. What's new is the cheap internet startup, where it's not worth paying for an expensive DB.

For these folks I agree there is a market need to be fulfilled :)

Hello. I'd like to cite and translate into czech language parts of your article. And then use them in a post on my blog at blog.red-pill.cz. I thought that it's a good idea to ask you for permission. I wanted to write this using more personal channel, like email or ICQ, but I failed to found any contact information, so I had to write it here. Hope it won't be considered comment spam :) My email is [email protected]. Or reply here, I will check it regulary. Thanks in advance. Tomas Fejfar

Avaranger,

That's fine with me.

Post a Comment