I will be running another iteration of my Linux/UNIX System Programming course in Munich, Germany, during the week of 21-25 October 2013. The course is intended for programmers developing system-level, embedded, or network applications for Linux and UNIX systems, or programmers porting such applications from other operating systems (e.g., Windows) to Linux or UNIX. Among the topics covered in the course are low-level file I/O, signals, timers, creating processes and executing programs, programming with POSIX threads, interprocess communication (pipes, FIFOs, message queues,

semaphores, shared memory), and network programming using sockets. You can expect to work fairly hard (and also learn a lot)

during the week

For detailed information about the content of the course, prerequisites, and other details, see http://man7.org/training/.

In addition to course materials, all participants will receive a copy of my book, The Linux Programming Interface.

The course will be priced (lower than usual) at 2000 euro plus VAT, and the class size will be kept quite small (I've yet to determine if the maximum will be 6 or 8). If you are interested in the course, please email me at [email protected] and I'll send you information for course registration. Questions regarding the course can be sent to the same address.

2013-09-23

2013-07-17

Fourth print run (and request for bug reports)

The publisher will shortly be preparing a fourth print run of The Linux Programming Interface. That print run will incorporate all of the outstanding errata.

If you've been reading TLPI and noticed any errors or typos, now would be a good time to report them, so that fixes can be included in the fourth printing.

If you've been reading TLPI and noticed any errors or typos, now would be a good time to report them, so that fixes can be included in the fourth printing.

2013-05-14

Adding further man pages to the HTML renderings on man7.org

As noted in my last post, I've expanded the set of HTML man page renderings at man7.org/linux/man-pages/ to include some projects other than man-pages. Currently, man pages from 37 projects are now rendered, with about 1750 pages in all. The projects that I have so far included have a bias that matches my interests: man-pages, projects related to low-level C and system programming (e.g., the ACL and extended attribute libraries), toolchain projects (e.g., gcc, gdb, Git, coreutils, binutils, util-linux), and other relevant tools (kmod, strace, ltrace, procps, expect) and tools relevant to manual pages (e.g., groff, man-db).

Although there are some other sites around that have renderings of a much larger set of pages, I am (so far) resisting the temptation to take a kitchen-sink approach on man7.org. Nevertheless, I'm open to adding further projects to the set, if they seem relevant. If you think there is a project that should be added to the rendered set, drop a note to [email protected] with the following information:

Although there are some other sites around that have renderings of a much larger set of pages, I am (so far) resisting the temptation to take a kitchen-sink approach on man7.org. Nevertheless, I'm open to adding further projects to the set, if they seem relevant. If you think there is a project that should be added to the rendered set, drop a note to [email protected] with the following information:

- Name of the project.

- Project description.

- URL for the web site the project.

- (If you know it:) URL of a web page that provides information on how to report bugs in the man pages (or email list address).

- Source URL for the man pages of the project. The project should provide pages by one of the following means one of the following:

- Ideally: the URL of a Git repository for the project.

- The URL of a Bazaar or Mercurial repository.

- An HTTP or FTP address for the location that is updated with the latest release tarball on each release of the project.

- If nothing else: the URL of a CVS or Subversion repository. Note: if there is a Git read-only mirror of the CVS or Subversion repository, that is preferred.

- Instructions on how to build the man pages for the project. These instructions should be minimal, in the sense that they require the minimum CPU effort to build just the man pages. In other words, if possible, I'd like to avoid building the entire project just to obtain the manual pages.

- Approximate number of manual pages in the project (actual pages, excluding links).

More man pages now rendered on man7.org

There are several web sites that provide renderings of a comprehensive set of Linux man pages.

However, those sites typically have a number of faults.

For example, the renderings are either for out-of-date versions of the man pages (on one site, the rendered pages are close to ten years old) or the pages provide no timestamp information indicating the age of the pages. In many other cases, sites provide no information about the origin of the rendered pages, give no information about the extraction date or the project version from which the manual pages were taken, and provide no information on how to report manual page bugs to the corresponding upstream projects. Providing that information was the main goal when I started adding the COLOPHON sections to the man-pages pages in December 2007 (man-pages-2.69).

Furthermore, the renderings on many sites are either unattractive (obviously, a subjective judgement) or simply broken (for example, it looks like none of the groff tables in the pages at http://linux.die.net/man/ are rendered, so that, for example, the table of systems calls in the syscalls(2) man page does not appear, making the page essentially useless). Finally, most of the sites provide no obvious information on how to report bugs in the man page renderings.

One of the few sites that does a reasonable job on the above criteria is the http://manpages.courier-mta.org/ site maintained by Sam Varshavchik. It is probably no coincidence that Sam has also provided numerous bug reports on formatting issues in the man-pages page set over the last several years. However, Sam provides a rather less comprehensive set of pages than on the other sites, taking pages from just seven projects.

So, it seems there's room out there for a web site that does a better job on many of the above criteria by providing a comprehensive set of page renderings that: (a) are up to date; (b) carefully document the origin of the rendered pages; (c) describe where to report bugs in the man pages; and (d) explain where to report bugs in the renderings.

With those goals in mind, I've extended the set of pages that are rendered at http://man7.org/linux/man-pages/ to include pages in addition to those provided by the man-pages project. Each page includes a COLOPHON section that shows the name and URL of the project from which the page comes, the URL of the tarball or source code repository from which the page was extracted, the date when the page was extracted, and (where I know it) information on where to report bugs in the manual page. So far, I've added about 35 projects to the set, with the pages for each project being taken either from the latest release tarball or directly from the project's source code repository. This raises the number of rendered pages at man7.org from the around 950 pages in man-pages to around 1750 with the addition of the other projects. (More projects may be added in the future; I'll say more on that later.) A full list of the projects and pages that are rendered can be found in the project page directory.

Sometimes different projects provide the same manual page. On all sites that I know of, where such conflicts occur, just one version of the page is rendered. I've dealt with such conflicts in a different way. One of the versions is treated as canonical (here, I've currently followed the lead of Fedora by choosing the page that it treats as canonical, though I may adjust that approach in the future), but I provide renderings of the other versions at different URLs, with cross page links between the various versions. Thus, for example, three of the projects that I handle provide versions of the kill(1) man page, and the three version are rendered at the following URLs:

For example, the renderings are either for out-of-date versions of the man pages (on one site, the rendered pages are close to ten years old) or the pages provide no timestamp information indicating the age of the pages. In many other cases, sites provide no information about the origin of the rendered pages, give no information about the extraction date or the project version from which the manual pages were taken, and provide no information on how to report manual page bugs to the corresponding upstream projects. Providing that information was the main goal when I started adding the COLOPHON sections to the man-pages pages in December 2007 (man-pages-2.69).

Furthermore, the renderings on many sites are either unattractive (obviously, a subjective judgement) or simply broken (for example, it looks like none of the groff tables in the pages at http://linux.die.net/man/ are rendered, so that, for example, the table of systems calls in the syscalls(2) man page does not appear, making the page essentially useless). Finally, most of the sites provide no obvious information on how to report bugs in the man page renderings.

One of the few sites that does a reasonable job on the above criteria is the http://manpages.courier-mta.org/ site maintained by Sam Varshavchik. It is probably no coincidence that Sam has also provided numerous bug reports on formatting issues in the man-pages page set over the last several years. However, Sam provides a rather less comprehensive set of pages than on the other sites, taking pages from just seven projects.

So, it seems there's room out there for a web site that does a better job on many of the above criteria by providing a comprehensive set of page renderings that: (a) are up to date; (b) carefully document the origin of the rendered pages; (c) describe where to report bugs in the man pages; and (d) explain where to report bugs in the renderings.

With those goals in mind, I've extended the set of pages that are rendered at http://man7.org/linux/man-pages/ to include pages in addition to those provided by the man-pages project. Each page includes a COLOPHON section that shows the name and URL of the project from which the page comes, the URL of the tarball or source code repository from which the page was extracted, the date when the page was extracted, and (where I know it) information on where to report bugs in the manual page. So far, I've added about 35 projects to the set, with the pages for each project being taken either from the latest release tarball or directly from the project's source code repository. This raises the number of rendered pages at man7.org from the around 950 pages in man-pages to around 1750 with the addition of the other projects. (More projects may be added in the future; I'll say more on that later.) A full list of the projects and pages that are rendered can be found in the project page directory.

Sometimes different projects provide the same manual page. On all sites that I know of, where such conflicts occur, just one version of the page is rendered. I've dealt with such conflicts in a different way. One of the versions is treated as canonical (here, I've currently followed the lead of Fedora by choosing the page that it treats as canonical, though I may adjust that approach in the future), but I provide renderings of the other versions at different URLs, with cross page links between the various versions. Thus, for example, three of the projects that I handle provide versions of the kill(1) man page, and the three version are rendered at the following URLs:

- http://man7.org/linux/man-pages/man1/kill.1.html: the "canonical" version of the page, from the util-linux project.

- http://man7.org/linux/man-pages/man1/[email protected]: the version from the coreutils project.

- http://man7.org/linux/man-pages/man1/[email protected]: the version of the page from the procps project.

2013-03-12

Revisiting kernel capability usage statistics

Revisiting my earlier statistics on capability use in the Linux 3.2 kernel source, things are not getting better for CAP_SYS_ADMIN. The statistics below are for Linux 3.9-rc2. By comparison with Linux 3.2, total uses of CAP_* constants in the kernel sources have risen by 10.6% (1242 versus 1167) and total uses of CAP_SYS_ADMIN have risen by slightly more: 11.1% (502 versus 451). This article remains relevant, and digging a bit deeper, overly broad range seems to be a problem that afflicts not just CAP_SYS_ADMIN and CAP_NET_ADMIN about also (at least) CAP_SYS_RAWIO, as the discussion in this thread on the proposed CAP_COMPROMISE_KERNEL capability shows.

| Capability | #uses | #files |

| CAP_AUDIT_CONTROL | 2 | 2 |

| CAP_AUDIT_WRITE | 1 | 1 |

| CAP_BLOCK_SUSPEND | 3 | 2 |

| CAP_CHOWN | 4 | 2 |

| CAP_DAC_OVERRIDE | 2 | 1 |

| CAP_DAC_READ_SEARCH | 5 | 3 |

| CAP_FOWNER | 11 | 8 |

| CAP_FSETID | 9 | 7 |

| CAP_IPC_LOCK | 14 | 9 |

| CAP_IPC_OWNER | 1 | 1 |

| CAP_KILL | 2 | 2 |

| CAP_LEASE | 1 | 1 |

| CAP_LINUX_IMMUTABLE | 14 | 14 |

| CAP_MAC_ADMIN | 28 | 5 |

| CAP_MAC_OVERRIDE | 5 | 2 |

| CAP_MKNOD | 3 | 3 |

| CAP_NET_ADMIN | 399 | 188 |

| CAP_NET_BIND_SERVICE | 15 | 12 |

| CAP_NET_BROADCAST | 0 | 0 |

| CAP_NET_RAW | 20 | 12 |

| CAP_SETFCAP | 3 | 2 |

| CAP_SETGID | 11 | 6 |

| CAP_SETPCAP | 2 | 2 |

| CAP_SETUID | 9 | 4 |

| CAP_SYS_ADMIN | 502 | 257 |

| CAP_SYS_BOOT | 2 | 2 |

| CAP_SYS_CHROOT | 2 | 2 |

| CAP_SYSLOG | 2 | 2 |

| CAP_SYS_MODULE | 5 | 3 |

| CAP_SYS_NICE | 14 | 8 |

| CAP_SYS_PACCT | 1 | 1 |

| CAP_SYS_PTRACE | 11 | 5 |

| CAP_SYS_RAWIO | 69 | 43 |

| CAP_SYS_RESOURCE | 38 | 25 |

| CAP_SYS_TIME | 19 | 11 |

| CAP_SYS_TTY_CONFIG | 11 | 5 |

| CAP_WAKE_ALARM | 2 | 1 |

| Total | 1242 | 654 |

2012-11-18

Japanese translation of TLPI will be published in December

The Japanese translation of TLPI (published by O'Reilly Japan) will come out next month. Japanese readers can read (the Japanese translation of) a short preface that I wrote for the translation.

Although I will never be able to read it, I have a couple of reasons to believe that the translation will be excellent. First, the translation was done by a kernel developer, Junjiro Okajima (developer of the aufs file system). Second, along the way Junjiro has been extremely attentive in reading TLPI and checking details. As a consequence, he contributed a large number of errata reports (making up more than 20% of all current errata reports for TLPI), ranging from typos to corrections and improvements to rather deep technical details presented in the English text.

Although I will never be able to read it, I have a couple of reasons to believe that the translation will be excellent. First, the translation was done by a kernel developer, Junjiro Okajima (developer of the aufs file system). Second, along the way Junjiro has been extremely attentive in reading TLPI and checking details. As a consequence, he contributed a large number of errata reports (making up more than 20% of all current errata reports for TLPI), ranging from typos to corrections and improvements to rather deep technical details presented in the English text.

2012-11-06

Nominated for NZ Open Source Award

To my pleasant surprise, I'm informed that I'm not merely nominated, but am also a finalist for for a New Zealand Open Source Award, in the Open Source Contributor category, for "contributions to Linux

documentation" (sounds about right!). But, being in Barcelona for LinuxCon Europe / ELCE, I won't be able to be present for the awards event. Old friend Dan Randow has kindly agreed to proxy for me at the award event tomorrow.

(Update, 2012-11-15: Congratulations to Grant McLean, who won the award.)

(Update, 2012-11-15: Congratulations to Grant McLean, who won the award.)

2012-10-05

How much do __builtin_expect(), likely(), and unlikely() improve performance?

I got curious about how much __builtin_expect() helps performance and when its use is justified, so wrote a small test program to study its effects.

As the gcc documentation says, you can use this compiler built-in function to give the optimizer a clue about the likely result of an integer (or Boolean) expression. In the context of an if statement, this enables the optimizer to reorder the code in a way that gives best performance, by ensuring that the code that is most likely to execute after the conditional immediately follows the conditional when the instruction stream is fed to the CPU pipeline.

The __builtin_expect() function takes two arguments: a value to be tested, and the expected result. Both of these are integral values. The interface is a little clumsy for most uses, since the common case is that we want to test for "true" (non-zero) or "false" (zero). Thus, the Linux kernel defines two simpler interfaces: likely() and unlikely() (in include/linux/compiler.h):

#define likely(x) __builtin_expect(!!(x), 1)

#define unlikely(x) __builtin_expect(!!(x), 0)

In other words, likely(x) means "I expect x is true", and and unlikely(x) means "I expect x is false".

Here's my test program. The comments should be enough to help you understand some of the more obscure pieces. Below, I'll just skip to looking at the test results.

(You can download the test code here.)

The program essentially repeatedly scans a one-million-element integer array whose contents are zero (in the default case). Using the program, we can time the results of the checks that are performed either with or without using __builtin_expect().

For example, here we scan the array without __builtin_expect():

Here's what happens if we employ __builtin_expect(), telling the compiler that the expected result of the test is 0.

Well and good. What if we tell __builtin_expect() to expect the wrong value?

In this test, the program first injects some nonzero values into the array before doing tests for zero using __builtin_expect(). Nonzero values are placed at every tenth element in the array:

The example program above does have a very predictable, repeatable flow of execution. Let's see what happens when we use compiler-assisted optimization. Building the programming now involves two steps: a profiling phase and an optimized compile. In the profiling phase, we build and run an instrumented version of the executable. We build as follows:

We then run the executable, which generates profiling information that is stored in a file (with the extension .gcda).

It's left as an exercise for the reader to show that employing __builtin_expect() (to expect 0) in conjunction with compiler-assisted optimization doesn't improve things: the compiler already optimizes the if branching as well as the programmer-directed optimization. One other interesting exercise is, of course, to compare the assembler (cc -S) code generated for each of the above cases.

As the gcc documentation says, you can use this compiler built-in function to give the optimizer a clue about the likely result of an integer (or Boolean) expression. In the context of an if statement, this enables the optimizer to reorder the code in a way that gives best performance, by ensuring that the code that is most likely to execute after the conditional immediately follows the conditional when the instruction stream is fed to the CPU pipeline.

The __builtin_expect() function takes two arguments: a value to be tested, and the expected result. Both of these are integral values. The interface is a little clumsy for most uses, since the common case is that we want to test for "true" (non-zero) or "false" (zero). Thus, the Linux kernel defines two simpler interfaces: likely() and unlikely() (in include/linux/compiler.h):

#define likely(x) __builtin_expect(!!(x), 1)

#define unlikely(x) __builtin_expect(!!(x), 0)

In other words, likely(x) means "I expect x is true", and and unlikely(x) means "I expect x is false".

Here's my test program. The comments should be enough to help you understand some of the more obscure pieces. Below, I'll just skip to looking at the test results.

/* builtin_expect_test.c */

#include <stdio.h>

#include <unistd.h>

#include <stdlib.h>

#include <string.h>

#define BSIZE 1000000

#ifndef BINCR

#define BINCR 1

#endif

#if defined(EXPECT_RESULT) && defined(DONT_EXPECT)

#error "Specifying both EXPECT_RESULT and DONT_EXPECT makes no sense"

#endif

/* We add some seemingly unneeded complexity to the code, simply to

make the opimizer's task tricky enough that it won't optimize away

the effect of __builtin_expect(). In this particular program, all

of the following are needed:

* Calling an *non-inline* function inside the loop in main().

* Looping over an array in main() (rather than checking a

single variable).

* Dynamically allocating the array with calloc(), rather than

declaring an array and initializing with memset().

* Acting on two different variables (m1, m2) in each branch

of the 'if' statement in main() (if the two branches after

the 'if' execute the same code, gcc is clever enough to

recognize this and optimize the 'if' away).

* Printing the resulting values of the variables modified in

the loop (otherwise gcc may optimize away the entire loop

inside main()).

Compile with at least -O2 (on x86) to see a difference in

performance due to __builtin_expect().

*/

static __attribute__ ((noinline)) int

f(int a)

{

return a;

}

int

main(int argc, char *argv[])

{

int *p;

int j, k, m1, m2, nloops;

if (argc != 2 || strcmp(argv[1], "--help") == 0) {

fprintf(stderr, "Usage: %s num-loops\n", argv[0]);

exit(EXIT_FAILURE);

}

m1 = m2 = 0;

nloops = atoi(argv[1]);

/* calloc() allocates an array and zeros its contents */

p = calloc(BSIZE, sizeof(int));

if (p == NULL) {

perror("calloc");

exit(EXIT_FAILURE);

}

#if defined(BREAK_STEP) && BREAK_STEP > 0

/* This provides us with a way to inject some values into the

array that differ from our expected test value, in order

to get an idea of how how much the __builtin_expect()

optimization is negatively affected by unexpected values. */

for (k = 0, j = 0; j < BSIZE; j += BREAK_STEP) {

p[j] += BINCR;

k++;

}

printf("Adjusted %d items by %d\n", k, BINCR);

#endif

for (j = 0; j < nloops; j++) {

for (k = 0; k < BSIZE; k++) {

#ifdef DONT_EXPECT

if (p[k]) {

#else

if (__builtin_expect(p[k], EXPECT_RESULT)) {

#endif

m1 = f(++m1);

} else {

m2 = f(++m2);

}

}

}

printf("%d, %d\n", m1, m2);

exit(EXIT_SUCCESS);

}

(You can download the test code here.)

The program essentially repeatedly scans a one-million-element integer array whose contents are zero (in the default case). Using the program, we can time the results of the checks that are performed either with or without using __builtin_expect().

For example, here we scan the array without __builtin_expect():

$ cc -DDONT_EXPECT -O3 builtin_expect_test.c -o bn

$ time -f "%E real, %U user, %S sys" ./bn 1000

0, 1000000000

0:02.68 real, 2.67 user, 0.00 sys

In this case, the program looped one thousand times through the array, to perform a total of one billion checks, and the real time for execution was 2.68 seconds. (The test machine is an Intel Core Duo 2.53GHz, and the gcc version is 4.6.3.)Here's what happens if we employ __builtin_expect(), telling the compiler that the expected result of the test is 0.

$ cc -DEXPECT_RESULT=0 -O3 builtin_expect_test.c -o b0

$ time -f "%E real, %U user, %S sys" ./b0 1000

0, 1000000000

0:02.28 real, 2.28 user, 0.00 sys

The execution time fell to 2.28 seconds. In other words (for this particular CPU, compiler version, and program), __builtin_expect() improved the execution time of each check by 0.4 nanoseconds (0.4 seconds for one billion checks).Well and good. What if we tell __builtin_expect() to expect the wrong value?

$ cc -DEXPECT_RESULT=1 -O3 builtin_expect_test.c -o b1

$ time -f "%E real, %U user, %S sys" ./b1 1000

0, 1000000000

0:04.19 real, 4.18 user, 0.00 sys

In this case, unsurprisingly, we made each check run slower, by about 1.5 (i.e., 4.19 - 2.68) nanoseconds.So, should you use __builtin_expect()?

You should only use __builtin_expect()—or the Linux kernel's likely() and unlikely()—if it's "very likely" that your code will follow the predicted branch. How much is "very likely"? If you're looking for actual numbers, the answer will depend on your compiler version, CPU, and code. But to illustrate that you should generally avoid these optimizations unless your code is very likely to follow one branch, here's some further tests using the above code.In this test, the program first injects some nonzero values into the array before doing tests for zero using __builtin_expect(). Nonzero values are placed at every tenth element in the array:

$ cc -DEXPECT_RESULT=0 -DBREAK_STEP=10 -O3 builtin_expect_test.c -o b0

$ time -f "%E real, %U user, %S sys" ./b0 1000

100000000, 900000000

0:02.79 real, 2.76 user, 0.01 sys

Note what happened. Even though most array elements contained the expected zero value, execution speed was actually worse (2.79 seconds versus 2.69 seconds) than not using __builtin_expect() at all! In fact, even when only one in ten thousand values is nonzero, we're still at only roughly the break-even point: $ cc -DEXPECT_RESULT=0 -DBREAK_STEP=10000 -O3 builtin_expect_test.c -o b0

$ time -f "%E real, %U user, %S sys" ./b0 1000

100000, 999900000

0:02.66 real, 2.64 user, 0.00 sys

The point where using these optimizations becomes worthwhile will depend on the factors mention above, but the point is that you should really only use them when your predicted path is very likely, and if your predicted path is not very likely, then you're better off avoiding them, as you'll actually slow your code down a little.Compiler-assisted run-time profiling

The gcc documentation contains the following advice regarding the use of __builtin_expect():In general, you should prefer to use actual profile feedback for this (-fprofile-arcs), as programmers are notoriously bad at predicting how their programs actually perform. However, there are applications in which this data is hard to collect.That's good concise advice. To put things another way, the only time you should use __builtin_expect() is when you can't use compiler-assisted runtime optimization (perhaps because your program has no easily repeatable pattern of execution—the Linux kernel is an obvious example) and you are certain that your predicted code path is very (very) likely to be the one that will be taken.

The example program above does have a very predictable, repeatable flow of execution. Let's see what happens when we use compiler-assisted optimization. Building the programming now involves two steps: a profiling phase and an optimized compile. In the profiling phase, we build and run an instrumented version of the executable. We build as follows:

$ cc -O3 -DDONT_EXPECT -fprofile-generate builtin_expect_test.c -o bn.prof(The -fprofile-generate option implies -fprofile-arcs, as well as one or two other profiling options.)

We then run the executable, which generates profiling information that is stored in a file (with the extension .gcda).

$ time -f "%E real, %U user, %S sys" ./bn.prof 1000

0, 1000000000

0:05.39 real, 5.37 user, 0.00 sys

Note that, because of the instrumentation code, the profiled version runs rather slower that the normally compiled code. Running this code created a file containing the profiling results: $ ls *.gcda

builtin_expect_test.gcda

We then employ the -fprofile-use compiler option,which (implicitly) uses the profiling results to create an optimized executable.$ cc -O3 -DDONT_EXPECT -fprofile-use builtin_expect_test.c -o bn.optAnd then we run the optimized program:

$ time -f "%E real, %U user, %S sys" ./bn.opt 1000

0, 1000000000

0:01.95 real, 1.94 user, 0.00 sys

This optimized version runs significantly faster (1.95 versus 2.28 seconds) than our version that used __builtin_expect(). This is because, in addition to the branching in the if statement, the branching in the for loops was also optimized.It's left as an exercise for the reader to show that employing __builtin_expect() (to expect 0) in conjunction with compiler-assisted optimization doesn't improve things: the compiler already optimizes the if branching as well as the programmer-directed optimization. One other interesting exercise is, of course, to compare the assembler (cc -S) code generated for each of the above cases.

2012-07-14

The Korean translation of TLPI is out

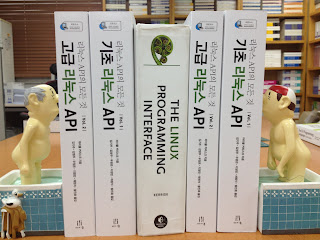

The Korean translation of The Linux Programming Interface came out on 10 July. You can find further information about the translation my earlier blog post and on the translations page of the TLPI web site.

The publisher kindly sent me a few photographs of the finished work:

(The table on the left corresponds to page 194 in the English original, and the figure on the right corresponds to page 902.)

(You have to love the bookends...)

The publisher kindly sent me a few photographs of the finished work:

2012-06-25

Some licensing changes to the source code

When originally published, the code from TLPI was licensed under the GNU Affero General Public License, version 3.

One reader commented that they are using one of my library functions as part of their everyday "toolbox" of handy pieces of code. As things currently stand, that would mean that if they redistributed any code that linked against that function or ran that code as part of a larger network application, then the complete source code of their application would also need to be licensed under the Affero GPL (i.e., all source code linked to my library functions would also need to be distributed).

That scenario wasn't really my intention (I'd overlooked the case of library functions in my code). So, I've now relicensed the library functions in TLPI under the GNU Lesser General Public License, version 3. The relicensing applies to all files in the lib/ directory of the source code distribution, and the changed licenses are already present in the latest source code tarball and online versions of the programs that went up in an update of the web site a couple of weeks ago.

Aside from the licensing changes, it's worth mentioning that since publication of TLPI, I've made a number of small fixes to various example programs. The fixes are listed in the CHANGES file that is distributed as part of the source code tarball.

One reader commented that they are using one of my library functions as part of their everyday "toolbox" of handy pieces of code. As things currently stand, that would mean that if they redistributed any code that linked against that function or ran that code as part of a larger network application, then the complete source code of their application would also need to be licensed under the Affero GPL (i.e., all source code linked to my library functions would also need to be distributed).

That scenario wasn't really my intention (I'd overlooked the case of library functions in my code). So, I've now relicensed the library functions in TLPI under the GNU Lesser General Public License, version 3. The relicensing applies to all files in the lib/ directory of the source code distribution, and the changed licenses are already present in the latest source code tarball and online versions of the programs that went up in an update of the web site a couple of weeks ago.

Aside from the licensing changes, it's worth mentioning that since publication of TLPI, I've made a number of small fixes to various example programs. The fixes are listed in the CHANGES file that is distributed as part of the source code tarball.

2012-06-22

Korean translation of TLPI available soon

The Korean publisher Acorn is completing the final pieces in the production of the Korean translation of TLPI, which should be published next month.

The translation will be published in two volumes that together run to nearly 2000 pages. As well as splitting the book into two volumes, the chapters are reorganized somewhat. Chapters 29 to 33 (POSIX threads) of the English original move to a later position in the translation, so that they form the start of the second volume. (In a two-volume version, this reordering seemed a little better both to the publisher and to me.) The two volumes are thus:

Considering that translation work began just 18 months ago, the speed of publication is impressive. One of the things that assisted was that the translation was the work of a team of translators.

It is of course an honor and a pleasure to see my book translated, for which I thank both the publisher and translators. Among the translators, a special thanks to Kiju Kim (nontechnical private blog), who kept me up to date on progress and also submitted a number of error reports on the English original while translating. And though I'll never be able to read them, I look forward to being able to hold copies of the Korean translation in some weeks.

The translation will be published in two volumes that together run to nearly 2000 pages. As well as splitting the book into two volumes, the chapters are reorganized somewhat. Chapters 29 to 33 (POSIX threads) of the English original move to a later position in the translation, so that they form the start of the second volume. (In a two-volume version, this reordering seemed a little better both to the publisher and to me.) The two volumes are thus:

- Volume 1 (Files, memory, processes, signals, timers), which corresponds to Chapters 1 to 28 and 34 to 42 of the English original. (1112 pages)

- Volume 2 (Threads, IPC, sockets, advanced I/O), which corresponds to Chapters 29 to 33 and 43 to 64 of the English original. (848 pages)

Considering that translation work began just 18 months ago, the speed of publication is impressive. One of the things that assisted was that the translation was the work of a team of translators.

It is of course an honor and a pleasure to see my book translated, for which I thank both the publisher and translators. Among the translators, a special thanks to Kiju Kim (nontechnical private blog), who kept me up to date on progress and also submitted a number of error reports on the English original while translating. And though I'll never be able to read them, I look forward to being able to hold copies of the Korean translation in some weeks.

2012-04-09

TLPI third print run now available

I'm happy to announce that the third print run of TLPI is now printed, and should be available for sale shortly. The third print run incorporates these 131 errata shown on the errata page. (If that seems like a lot of errata, take a look at this FAQ.)

Subscribe to:

Posts (Atom)