Are you looking to get your hands dirty with Auto-GPT? Look no further! In this article, we'll guide you through the straightforward installation process, enabling you to effortlessly set up Auto-GPT and unlock its powerful capabilities. Say goodbye to complex setups and hello to enhanced language generation in just a few simple steps.

To use Auto-GPT, users need to have Python installed on their computer, as well as an OpenAI API key. This key allows Auto-GPT to access the GPT-4 and GPT-3.5 APIs, as well as other resources such as internet search engines and popular websites. Once it is configured, users can interact with Auto-GPT using natural language commands, and the AI agent will automatically perform the requested task.

We will show practically how to set up and run Auto-GPT using Docker. We will also be showing steps to other popular methods towards the end.

Benefits of using Docker for running Auto-GPT

Docker is a containerization technology that allows developers to create, deploy, and run applications in a consistent and isolated environment. It enables the packaging of an application and all its dependencies into a single container, which can be easily distributed and run on any machine that has Docker installed.

Using Docker to run Auto-GPT provides several benefits:

- It allows you to run Auto-GPT in an isolated and reproducible environment, which ensures that the dependencies and configurations required to run Auto-GPT are consistent across different machines. This can be especially useful when collaborating on a project or when deploying Auto-GPT to a production environment.

- Docker provides a secure sandboxed environment, which can help prevent any potential harm to your computer from continuous mode malfunctions or accidental damage from commands.

- Docker simplifies the installation and configuration process of Auto-GPT by packaging it in a container that includes all the necessary dependencies and libraries. This means you don't have to manually install and configure these dependencies, which can be time-consuming and error prone.

Overall, using Docker to run Auto-GPT provides a convenient and secure solution for developing and deploying Auto-GPT in a consistent and reproducible manner.

Software Requirements

Getting an API key

Fig 1. Creating API key

Setting up Auto-GPT with Docker

Here first we will showcase step by step by guide to set up Auto-GPT using docker.

1. Make sure you have Python and Docker are installed on your system and its daemon is running, see requirements

Fig 2. Command Prompt

2. Open CMD and Pull the latest image from Docker Hub using following command:

docker pull significantgravitas/auto-gpt

Fig 3. Pulling image from dockerhub

Please note if docker daemon is not running it will throw an error.

Fig 4. Docker Image

Once pulled using above command, you can find the significantgravitas/auto-gpt image on your docker.

3. Create a folder for Auto-GPT

4. In the folder, create a file named docker-compose.yml with the following contents:

version: "3.9"

services:

auto-gpt:

image: significantgravitas/auto-gpt

depends_on:

- redis

env_file:

- .env

environment:

MEMORY_BACKEND: ${MEMORY_BACKEND:-redis}

REDIS_HOST: ${REDIS_HOST:-redis}

profiles: ["exclude-from-up"]

volumes:

- ./auto_gpt_workspace:/app/auto_gpt_workspace

- ./data:/app/data

## allow auto-gpt to write logs to disk

- ./logs:/app/logs

## uncomment following lines if you have / want to make use of these files

#- ./azure.yaml:/app/azure.yaml

#- ./ai_settings.yaml:/app/ai_settings.yaml

redis:

image: "redis/redis-stack-server:latest"

5. Download Source code(zip) from the latest stable release

6. Extract the zip-file into a folder.

Unlock access to the largest independent learning library in Tech for FREE!

Get unlimited access to 7500+ expert-authored eBooks and video courses covering every tech area you can think of.

Renews at $19.99/month. Cancel anytime

Fig 5. Source folder

Configuration using Docker

1. After downloading and unzipping the folder, find the file named .env.template in the main Auto-GPT folder. This file may be hidden by default in some operating systems due to the dot prefix. To reveal hidden files, follow the instructions for your specific operating system: Windows, macOS

2. Create a copy of .env.template and call it .env; if you're already in a command prompt/terminal window: use cp .env.template .env

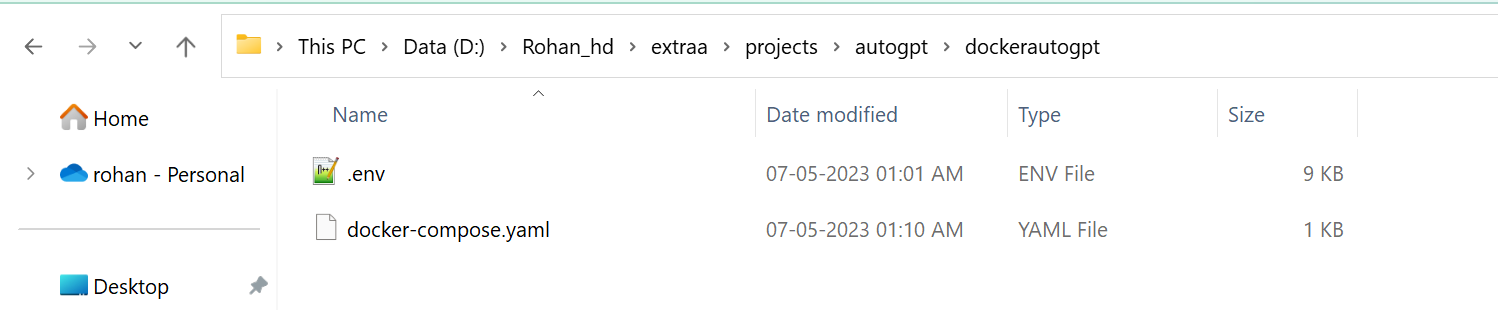

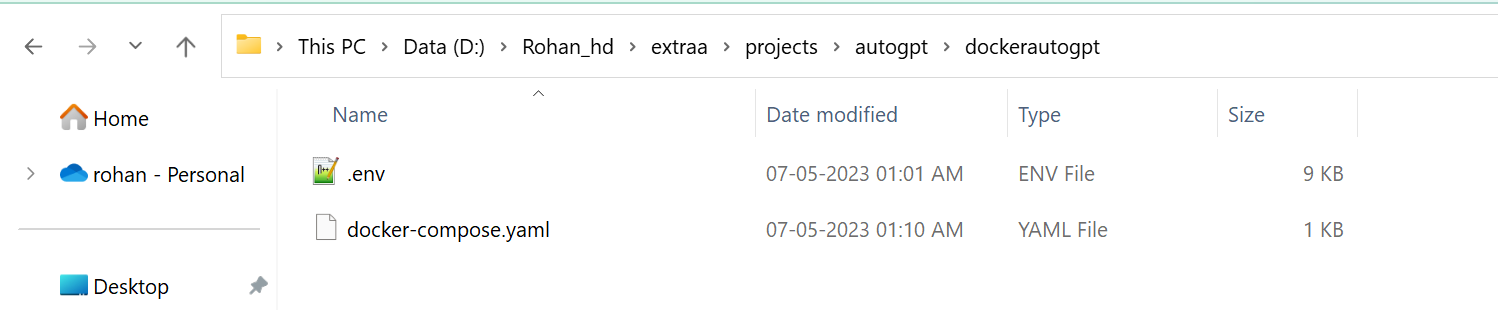

3. Now you should have only two files in your folder – docker-compose.yml and .env

Fig 6. Docker-compose and .env files

4. Open the .env file in a text editor

5. Find the line that says OPENAI_API_KEY=

6. After the =, enter your unique OpenAI API Key without any quotes or spaces.

7. Extracting API key is discussed in step 1 (discussed above).

8. Save and close .env file

Running Auto-GPT with Docker

Easiest is to use docker-compose. Run the commands below in your Auto-GPT folder.

1. Build the image. If you have pulled the image from Docker Hub, skip this step

docker-compose build auto-gpt

2. Run Auto-GPT

docker-compose run --rm auto-gpt

3. By default, this will also start and attach a Redis memory backend. If you do not want this, comment or remove the depends: - redis and redis: sections from docker-compose.yml

4. You can pass extra arguments, e.g., running with --gpt3only and --continuous:

docker-compose run --rm auto-gpt --gpt3only –continuous

Fig 7. Auto-GPT Installed

Other methods without Docker

Setting up Auto-GPT with Git

1. Make sure you have Git installed for your OS

2. To execute the given commands, open a CMD, Bash, or PowerShell window. On Windows: press Win+X and select Terminal, or Win+R and enter cmd

3. First clone the repository using following command:

git clone -b stable https://github.com/Significant-Gravitas/Auto-GPT.git

4. Navigate to the directory where you downloaded the repository

cd Auto-GPT

Manual Setup

1. Download Source code (zip) from the latest stable release

2. Extract the zip-file into a folder

Configuration

1. Find the file named .env.template in the main Auto-GPT folder. This file may be hidden by default in some operating systems due to the dot prefix. To reveal hidden files, follow the instructions for your specific operating system: Windows, macOS

2. Create a copy of .env.template and call it .env; if you're already in a command prompt/terminal window: cp .env.template .env

3. Open the .env file in a text editor

4. Find the line that says OPENAI_API_KEY=

5. After the =, enter your unique OpenAI API Key without any quotes or spaces

6. Save and close the .env file

Run Auto-GPT without Docker

Simply run the startup script in your terminal. This will install any necessary Python packages and launch Auto-GPT. Please note, if the above configuration is not properly setup, then it will throw an error, hence recommended and easiest way to run is using docker.

./run.sh

.\run.bat

If this gives errors, make sure you have a compatible Python version installed.

Conclusion

In conclusion, if you're looking for a hassle-free way to install Auto-GPT, Docker is the recommended choice. By following our comprehensive guide, you can effortlessly set up Auto-GPT using Docker, ensuring a streamlined installation process, consistent environment configuration, and seamless deployment on different platforms. With Docker, bid farewell to compatibility concerns and embrace a straightforward and efficient Auto-GPT installation experience. Empower your language generation capabilities today with the power of Docker and Auto-GPT.

Author Bio

Rohan is an accomplished AI Architect professional with a post-graduate in Machine Learning and Artificial Intelligence. With almost a decade of experience, he has successfully developed deep learning and machine learning models for various business applications. Rohan's expertise spans multiple domains, and he excels in programming languages such as R and Python, as well as analytics techniques like regression analysis and data mining. In addition to his technical prowess, he is an effective communicator, mentor, and team leader. Rohan's passion lies in machine learning, deep learning, and computer vision.

You can follow Rohan on LinkedIn

United States

United States

United Kingdom

United Kingdom

India

India

Germany

Germany

France

France

Canada

Canada

Russia

Russia

Spain

Spain

Brazil

Brazil

Australia

Australia

Argentina

Argentina

Austria

Austria

Belgium

Belgium

Bulgaria

Bulgaria

Chile

Chile

Colombia

Colombia

Cyprus

Cyprus

Czechia

Czechia

Denmark

Denmark

Ecuador

Ecuador

Egypt

Egypt

Estonia

Estonia

Finland

Finland

Greece

Greece

Hungary

Hungary

Indonesia

Indonesia

Ireland

Ireland

Italy

Italy

Japan

Japan

Latvia

Latvia

Lithuania

Lithuania

Luxembourg

Luxembourg

Malaysia

Malaysia

Malta

Malta

Mexico

Mexico

Netherlands

Netherlands

New Zealand

New Zealand

Norway

Norway

Philippines

Philippines

Poland

Poland

Portugal

Portugal

Romania

Romania

Singapore

Singapore

Slovakia

Slovakia

Slovenia

Slovenia

South Africa

South Africa

South Korea

South Korea

Sweden

Sweden

Switzerland

Switzerland

Taiwan

Taiwan

Thailand

Thailand

Turkey

Turkey

Ukraine

Ukraine