Threat Actors are Interested in Generative AI, but Use Remains Limited

Mandiant

Written by: Michelle Cantos, Sam Riddell, Alice Revelli

Since at least 2019, Mandiant has tracked threat actor interest in, and use of, AI capabilities to facilitate a variety of malicious activity. Based on our own observations and open source accounts, adoption of AI in intrusion operations remains limited and primarily related to social engineering.

In contrast, information operations actors of diverse motivations and capabilities have increasingly leveraged AI-generated content, particularly imagery and video, in their campaigns, likely due at least in part to the readily apparent applications of such fabrications in disinformation. Additionally, the release of multiple generative AI tools in the last year has led to a renewed interest in the impact of these capabilities.

We anticipate that generative AI tools will accelerate threat actor incorporation of AI into information operations and intrusion activity. Mandiant judges that such technologies have the potential to significantly augment malicious operations in the future, enabling threat actors with limited resources and capabilities, similar to the advantages provided by exploit frameworks including Metasploit or Cobalt Strike. And while adversaries are already experimenting, and we expect to see more use of AI tools over time, effective operational use remains limited.

Augmenting Unreality: Leveraging Generative AI in Information Operations

Mandiant judges that generative AI technologies have the potential to significantly augment information operations actors’ capabilities in two key aspects: the efficient scaling of activity beyond the actors’ inherent means; and their ability to produce realistic fabricated content toward deceptive ends.

Generative AI will enable information operations actors with limited resources and capabilities to produce higher quality content at scale

- Models could be used to create content like articles or political cartoons in line with a specific narrative or to produce benign filler content to backstop inauthentic personas.

- Outputs from conversational AI chatbots based on large language models (LLMs) may also reduce linguistic barriers previously faced by operators targeting audiences in a foreign language.

Hyper-realistic AI-generated content may have a stronger persuasive effect on target audiences than content previously fabricated without the benefit of AI technology

- We have previously observed threat actors post fabricated content to support desired narratives; for example, the Russian “hacktivist” group CyberBerkut has claimed to leak phone call recordings revealing malevolent activity by various organizations. AI models trained on real individuals' voices could allow for the creation of audio fabrications in a similar vein, including inflammatory content or fake public service announcements.

Information Operations Actors’ Adoption of Generative AI Technologies

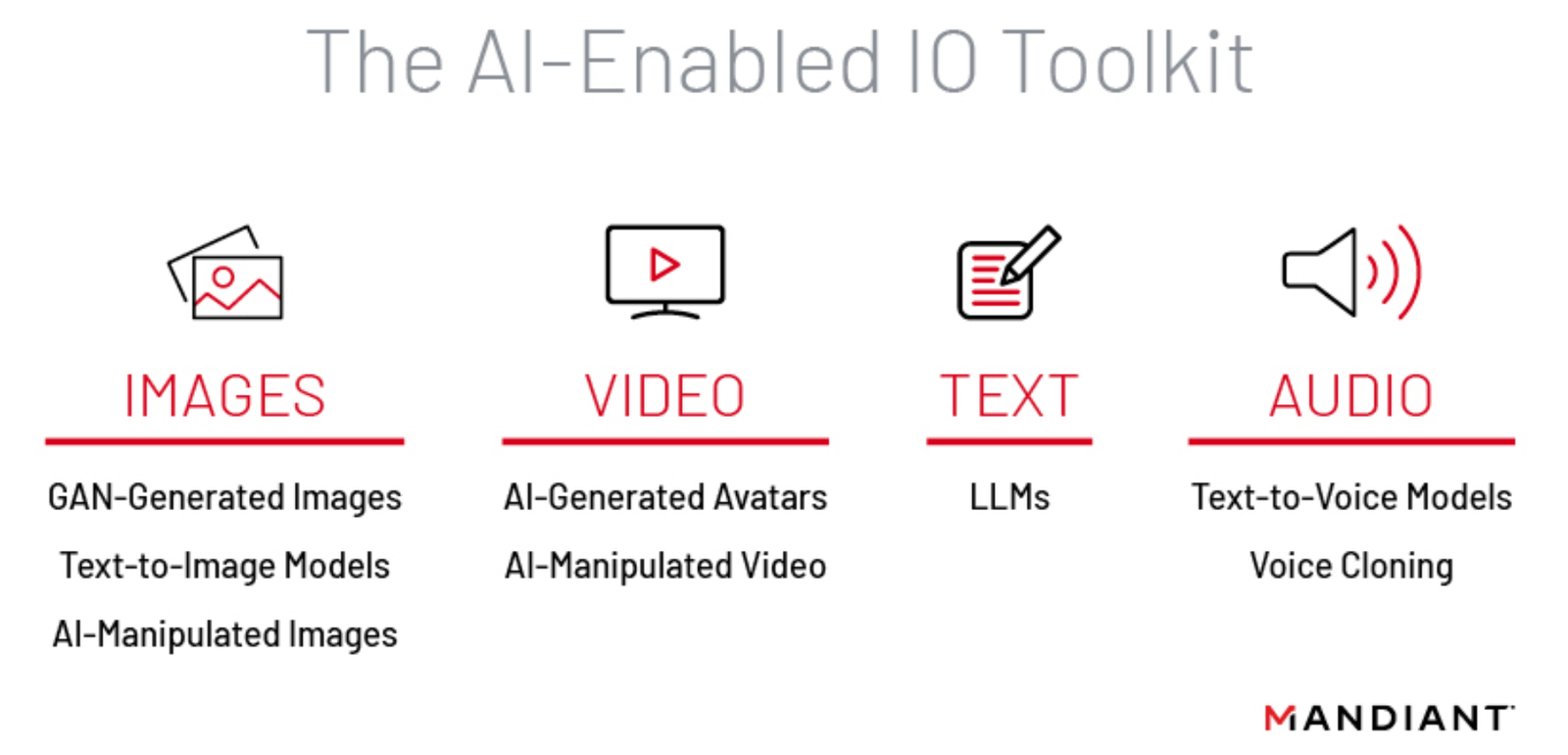

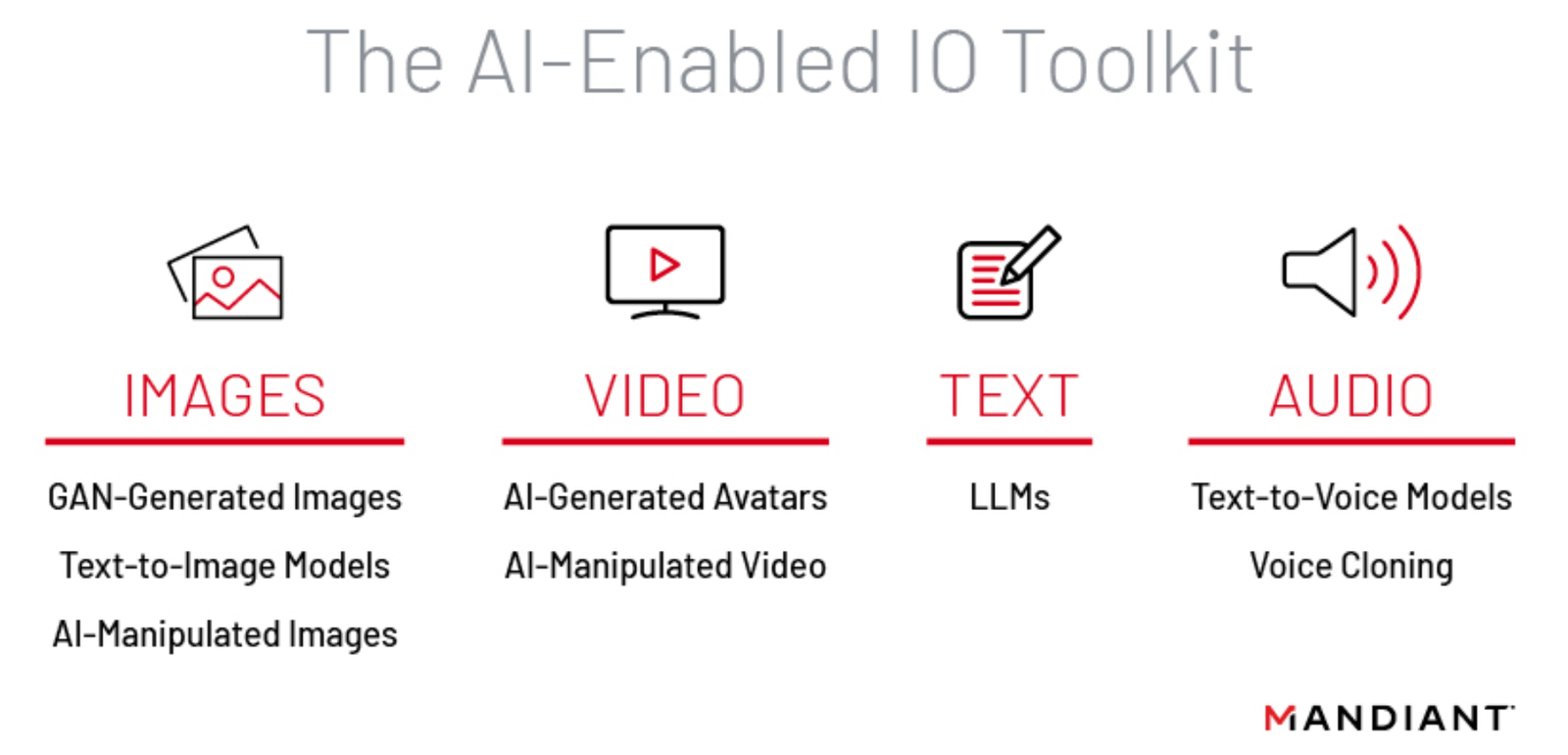

Mandiant anticipates that information operations actors' adoption of generative AI will vary by media form – text, images, audio, and video – due to factors including the availability and capabilities of publicly available tools and the effectiveness of each media form to invoke an emotional response (Figure 1). We believe that AI-generated images and videos are most likely to be employed in the near term; and while we have not yet observed operations using LLMs, we anticipate that their potential applications could lead to their rapid adoption.

Figure 1: AI-enabled IO toolkit leveraging various media forms

AI-Generated Imagery

Current image generation capabilities can broadly be broken into two categories: Generative adversarial networks (GANs) and generative text-to-image models:

- GANs produce realistic headshots of human subjects.

- Generative text-to-image models create customized images based on text prompts.

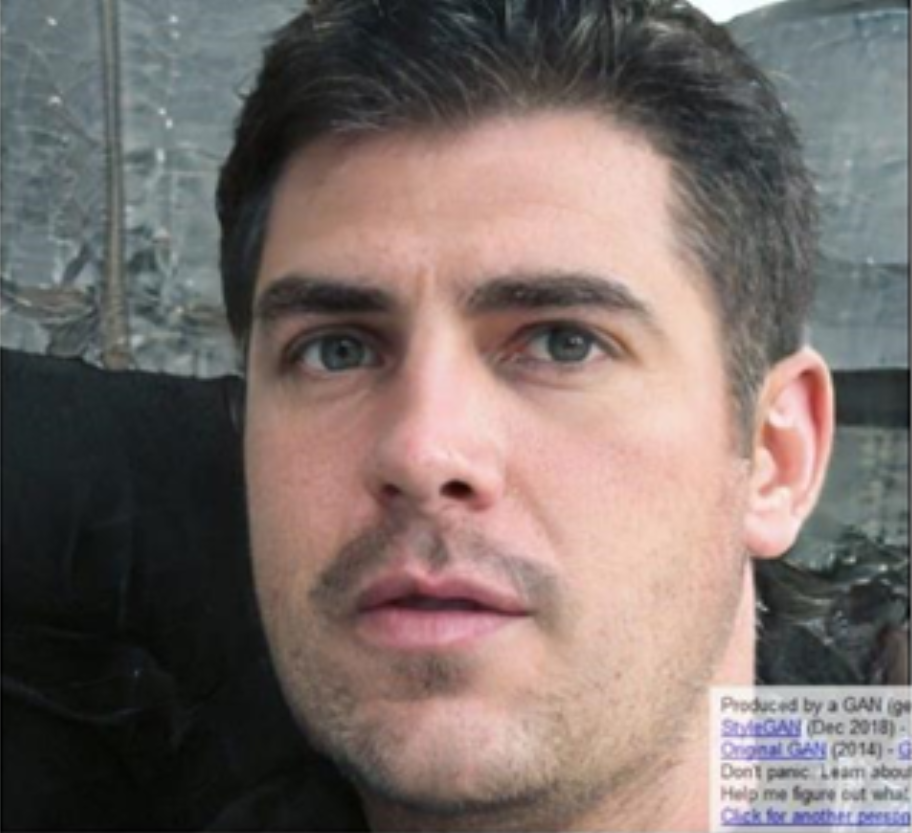

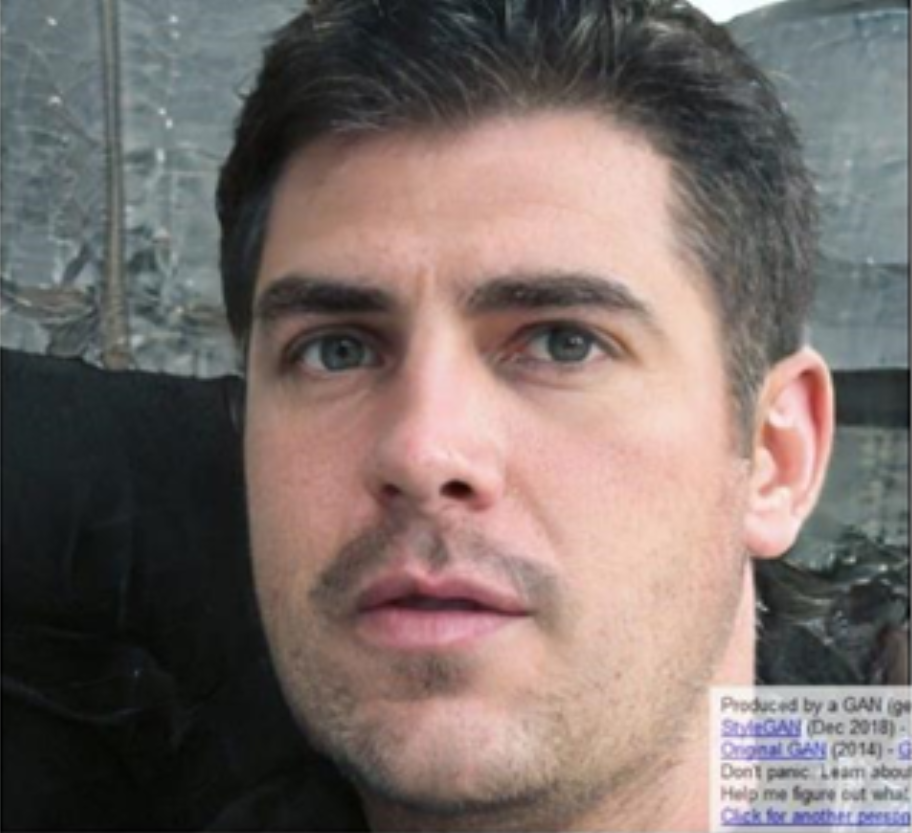

Since 2019, Mandiant has identified numerous instances of information operations leveraging GANs, typically for use in profile photos of inauthentic personas, including by actors aligned with nation-states including Russia, the People's Republic of China (PRC), Iran, Ethiopia, Indonesia, Cuba, Argentina, Mexico, Ecuador, and El Salvador, along with non-state actors such as individuals on the 4chan forum. We judge that the publicly available nature of GAN-generated image tools such as the website thispersondoesnotexist.com has likely contributed to their frequent usage in information operations (Figure 2). Actors have also taken steps to obfuscate the AI-generated origin of their profile photos through tactics like adding filters or retouching facial features.

Figure 2: An AI-generated image used as a profile photo by a persona in a pro-Cuban government network displayed a text box showing that the image was generated using the website thispersondoesnotexist.com

While we have not observed the same plethora of activity leveraging text-to-image models, we anticipate that their use will increase over time due to recent publicly available tools, and due to improvements in the technology.

Text-to-image models likely also pose a more significant deceptive threat than GANs:

- Text-to-image models may be applied to a wider range of use cases. While we have observed GANs used primarily to assist in persona building, text-to-image models may be used more broadly to support various narratives and fabrications.

- Content generated by text-to-image models may be harder to detect than that by GANs, both by individuals using manual techniques and by AI detection models.

We have observed disinformation spread via text-to-image models in various instances, including by the pro-PRC DRAGONBRIDGE influence campaign.

- In March 2023, DRAGONBRIDGE leveraged several AI-generated images in order to support narratives negatively portraying U.S. leaders (Figure 3). One such image used by DRAGONBRIDGE was originally produced by the journalist Eliot Higgins, who stated in a tweet that he used Midjourney to generate the images, suggesting that he did so to demonstrate the tool’s potential uses.

- As with typical DRAGONBRIDGE activity, we did not observe these messages to garner significant engagement.

- In May 2023, U.S. stock market prices briefly dropped after Twitter accounts, including the Russian state media outlet, RT, and the verified account, @BloombergFeed, which posed as an account associated with the Bloomberg Media Group, shared an AI-generated image depicting an alleged explosion near the Pentagon.

Figure 3: Suspected DRAGONBRIDGE tweet (left) containing AI-generated image created by Eliot Higgins (right) using Midjourney

AI-Generated and Manipulated Video

Publicly available AI-generated and AI-manipulated video technology currently consists primarily of the following formats:

- Video templates featuring customizable AI-generated human avatars reciting voice-to-text speech, often used to support presentation-format media such as news broadcasts;

- AI-enabled video manipulation tools such as face swap tools, which superimpose the faces of individuals onto those in existing videos.

Mandiant has observed information operations leveraging such technologies to promote desired narratives since 2021, including those detailed here. As other forms of AI-generated video technology improve, such as those involving the impersonation of individuals, we expect to see them increasingly leveraged.

- In May 2023, Mandiant reported to customers on DRAGONBRIDGE’s use of an AI-generated "news presenter" in a short news segment-style video. We assessed that this video was likely created using a self-service platform offered by the AI company, D-ID (Figure 4).

- In 2022, the social media analytics firm, Graphika, separately reported that DRAGONBRIDGE used AI-generated presenters to produce a different video generation service, Synthesia, suggesting the campaign used at least two AI video generation services.

- In March 2022, following the Russian invasion of Ukraine, an information operation promoted a fabricated message alleging Ukraine's capitulation to Russia through various means, including via a deepfake video of Ukrainian President Volodymyr Zelensky (Figure 5).

- In June 2021, Telegram assets tied to the Belarus-linked Ghostwriter campaign published AI-manipulated videoclips of politicians' images superimposed into video clips from movies or manipulated to look as though they were singing songs. At least one of those posts had a watermark indicating it was "made with Reface App."

- In April 2021, Mandiant identified an information operation in which social media accounts promoted a video appearing to leverage AI technology to insert a Mexican gubernatorial candidate's face into a movie scene to portray him as using drugs.

Figure 4: Screenshots from video containing AI-generated "news presenter" promoted by DRAGONBRIDGE, likely created using a platform offered by D-ID

Figure 5: A screenshot from a deepfake video showing Ukrainian President Zelensky announcing the country's capitulation to Russia in March 2022

AI-Generated Text

While Mandiant has thus far observed only a single instance of information operations actors referencing AI-generated text or LLMs, we anticipate that the recent emergence of publicly accessible tools and their ease of use could lead to their rapid adoption.

- In February 2023, DRAGONBRIDGE accounts accused ChatGPT, a conversational chatbot developed by OpenAI, of applying double standards to China and the U.S., citing a purported exchange in which the chatbot stated that China would be prohibited from shooting down civilian aircraft, including U.S. civilian-use balloons, over its territory.

AI-Generated Audio

Mandiant has observed little to no use of AI-generated audio in concerted information operations to date, although we have observed users of such technologies, such as those on 4chan, create audio tracks of public figures seemingly making violent, racist, and transphobic statements. AI-generated audio tools have existed for years, and possess capabilities like text-to-voice models and voice cloning and impersonation. Text-to-voice generation also comprises a key feature of AI-generated video software.

Improving Social Engineering

Reconnaissance

Mandiant has previously highlighted different ways threat actors can leverage AI-based tools to support their operations, or use these applications to help process open source information, as well as data that has already been stolen for reconnaissance and target selection.

- State-sponsored intelligence services can apply machine learning and data science tools to massive quantities of stolen and open-source data to improve data processing and analysis, allowing espionage actors to operationalize collected information with greater speed and efficiency. These tools can also identify patterns to improve tradecraft techniques identifying foreign individuals for intelligence recruitment in traditional espionage operations and crafting effective social engineering campaigns.

- At Black Hat USA 2016, researchers presented an AI tool called the Social Network Automated Phishing with Reconnaissance system, or SNAP_R, which suggests high-value targets and analyzes users' past Twitter activity to generate personalized phishing material based on a user's old tweets (see Figure 6).

- Mandiant has previously demonstrated how threat actors can leverage neural networks to generate inauthentic content for information operations.

- We identified indications of North Korean cyber espionage actor APT43 interest in LLMs, specifically Mandiant observed evidence suggesting the group has logged on to widely available LLM tools. The group may potentially leverage LLMs to enable their operations, however the intended purpose is unclear.

- In 2023 security researchers at Black Hat USA highlighted how prompt injection attacks integrated into LLMs could potentially support various stages of the attack lifecycle.

Figure 6: AI-generated tweet in 2016 that created lure content based on target's own previous tweets (source)

Lure Material

Threat actors can also use LLMs to generate more compelling material tailored for a target audience, regardless of the theat actor’s ability to understand the target’s language. LLMs can help malicious operators create text output that reflect natural human speech patterns, making more effective material for phishing campaigns and successful initial compromises.

- Open source reports suggest threat actors could use generative AI to create effective lure material to increase the likelihood of successful compromises, and that they may be using LLMs to enhance the complexity of the language used for their preexisting operations.

- Mandiant has noted evidence of financially motivated actors using manipulated video and voice content in business email compromise (BEC) scams, North Korean cyber espionage actors using manipulated images to defeat know your customer (KYC) requirements, and voice changing technology used in social engineering targeting Israeli soldiers.

- Media reports indicate financially-motivated actors have developed a generative AI tool, WormGPT, which allows malicious operators to create more persuasive BEC messages. Additionally, this tool can allow threats to write customizable malware code.

- In March 2023, multiple media outlets reported how a Canadian couple were scammed out of $21,000 when someone using an AI-generated voice impersonated their son as well as their son’s representing attorney for allegedly killing a diplomat in a car accident.

- Additionally, we have observed financially motivated actors advertising AI capabilities, including deepfake technology services, in underground forums to potentially increase the effectiveness of cybercriminal operations, such as social engineering, fraud, and extortion, by making these malicious operations seem more personal in nature through the use of deepfake capabilities.

Developing Malware

Mandiant anticipates that threat actors will increase their use of LLMs to support malware development. LLMs can help threat actors write new malware and improve existing malware, regardless of an attacker's technical proficiency or language fluency. While media reports suggest LLMs possess shortcomings in their malware generation that may require human intervention for correction, the ability of these tools to significantly assist in malware creation can still augment proficient malware developers, and enable those who might lack technical sophistication.

- Open source reports suggest financially motivated actors are advertising services in underground forums related to bypassing restrictions on LLMs that are designed to prevent LLMs from being used for malware development and propagation, as well as the generation of lure materials.

- Mandiant observed threat actors in underground forums advertising LLM services, sales, and API access as well as LLM-generated code between January and March 2023.

- A user uploaded a video claiming to bypass a LLM's safety features to get it to write malware that was able to circumvent one particular endpoint detection and response (EDR) solution’s defenses. The user then received payment after submitting this to a bug bounty program in late February 2023.

Outlook and Implications

Threat actors regularly evolve their tactics, leveraging and responding to new technologies as part of the constantly changing cyber threat landscape. Mandiant anticipates that threat actors of diverse origins and motivations will increasingly leverage generative AI as awareness and capabilities surrounding such technologies develop. For instance, we expect malicious actors will continue to capitalize on the general public’s inability to differentiate between what is authentic and what is counterfeit and users and enterprises alike should be cautious about the information they ingest as generative AI has led to a more pliable reality. However, while there is certainly threat actor interest in this technology, adoption has been limited thus far, and may remain so in the near term.

NOTE: While threat actor use of generative AI remains nascent, the security community has an opportunity to outpace threat actors with defensive advancements, including generative AI adoption, to benefit practitioners and users. See here for more on how Mandiant defenders are using generative AI in their day-to-day work to identify threats faster, eliminate toil, and better solve for talent-related tasks that increase the speed and skill across these workflows.

Google has adopted policies across our products and services that address mis- and disinformation in the context of AI. For further reading on securing AI systems, check out Google SAIF, a conceptual framework to help collaboratively secure AI technology as it continues to grow. Inspired by security best practices Google has applied to software development, SAIF offers practitioners guidance on detection and response, adapting controls, automating defenses and more, specific to AI systems.