Today’s the day! We are excited to announce the mentor organizations accepted for this year’s Google Summer of Code (GSoC). Every year we receive more applications than we can accept and 2017 was no exception. After carefully reviewing almost 400 applications, we have chosen 201 open source projects and organizations, 18% of which are new to the program. Please see the program website for a complete list of the accepted organizations.

Interested in participating as a student? We will begin accepting student applications on Monday, March 20, 2017 at 16:00 UTC and the deadline is Monday, April 3, 2017 at 16:00 UTC.

Over the next three weeks, students who’d like to participate in Google Summer of Code should research the organizations and their Ideas Lists to explore which organizations are a good fit for their interests and skills and learn how they might contribute. Some of the most successful proposals have been completely new ideas submitted by students, so if you don’t see a project that appeals to you, don’t hesitate to suggest a new idea to the organization! There are contacts listed for each organization on their Ideas List — students should contact the organization directly to discuss their ideas. We also strongly encourage all interested students to reach out to and become familiar with the organization before applying.

You can find more information on our website, including a full timeline of important dates and program milestones. We also highly recommend all interested students read the Student Manual, FAQ and the Program Rules.

Congratulations to all of our mentor organizations! We look forward to working with all of you during Google Summer of Code 2017.

By Josh Simmons, Open Source Programs Office

Posts from February 2017

Google Code-in 2016: even more young developers

Thursday, February 23, 2017

Google Code-in (GCI), our contest introducing 13-17 year olds to open source software development, wrapped up last month with our largest contest to date: 1,340 students from 62 countries completed an impressive 6,379 tasks! Working with 17 open source organizations, students wrote code, created and edited documentation, designed UI elements and logos, conducted research, developed screencasts and videos teaching others about open source software, and helped find (and fix!) hundreds of bugs.

In June we will welcome all 34 grand prize winners (along with a mentor from each participating organization) for a fun-filled trip to the Bay Area. The trip will include meeting with Google engineers to hear about new and exciting projects, tours of the Google campuses and a fun day exploring San Francisco.

Keep an eye on the Google Open Source Blog in coming weeks for more stats on Google Code-in 2016, plus posts from the mentoring organizations describing some of their experiences with the contests and the work done by “their” students.

We are thrilled that Google Code-in was so popular this year. We hope to continue to grow and expand this contest in the future to introduce even more teenagers to the world of open source software development.

By Stephanie Taylor, Google Code-in Program Manager

General statistics

- 56.4% of students completed three or more tasks (earning themselves a fun Google Code-in 2016 t-shirt)

- 21% of students were female

- 30% of the participants from the USA were female

- This was the first Google Code-in for 1,143 students (85.3%)

Student age

Participating schools

Students from 550 schools competed in this year’s contest. While Google Code-in is a program for individuals, every year some schools emerge as hot spots of participation. This year, these five schools had the most students taking part:| School Name | Country | Number of Participants |

| Dunman High School | Singapore | 185 |

| Sacred Heart Convent Senior Secondary School | India | 29 |

| Jayshree Periwal International School | India | 26 |

| Colegiul National Aurel Vlaicu | Romania | 23 |

| Ly Tu Trong Specialized High Schools | Vietnam | 14 |

Countries

We are pleased to have a new country participating in GCI this year: Mauritius! The chart below displays the ten countries with the most students completing at least 1 task.In June we will welcome all 34 grand prize winners (along with a mentor from each participating organization) for a fun-filled trip to the Bay Area. The trip will include meeting with Google engineers to hear about new and exciting projects, tours of the Google campuses and a fun day exploring San Francisco.

Keep an eye on the Google Open Source Blog in coming weeks for more stats on Google Code-in 2016, plus posts from the mentoring organizations describing some of their experiences with the contests and the work done by “their” students.

We are thrilled that Google Code-in was so popular this year. We hope to continue to grow and expand this contest in the future to introduce even more teenagers to the world of open source software development.

By Stephanie Taylor, Google Code-in Program Manager

Announcing TensorFlow 1.0

Wednesday, February 15, 2017

Originally posted on the Google Developer Blog

In just its first year, TensorFlow has helped researchers, engineers, artists, students, and many others make progress with everything from language translation to early detection of skin cancer and preventing blindness in diabetics. We're excited to see people using TensorFlow in over 6000 open source repositories online.

Today, as part of the first annual TensorFlow Developer Summit, hosted in Mountain View and livestreamed around the world, we're announcing TensorFlow 1.0:

It's faster: TensorFlow 1.0 is incredibly fast! XLA lays the groundwork for even more performance improvements in the future, and tensorflow.org now includes tips & tricks for tuning your models to achieve maximum speed. We'll soon publish updated implementations of several popular models to show how to take full advantage of TensorFlow 1.0 - including a 7.3x speedup on 8 GPUs for Inception v3 and 58x speedup for distributed Inception v3 training on 64 GPUs!

It's more flexible: TensorFlow 1.0 introduces a high-level API for TensorFlow, with tf.layers, tf.metrics, and tf.losses modules. We've also announced the inclusion of a new tf.keras module that provides full compatibility with Keras, another popular high-level neural networks library.

It's more production-ready than ever: TensorFlow 1.0 promises Python API stability (details here), making it easier to pick up new features without worrying about breaking your existing code.

Other highlights from TensorFlow 1.0:

By Amy McDonald Sandjideh, Technical Program Manager, TensorFlow

In just its first year, TensorFlow has helped researchers, engineers, artists, students, and many others make progress with everything from language translation to early detection of skin cancer and preventing blindness in diabetics. We're excited to see people using TensorFlow in over 6000 open source repositories online.

Today, as part of the first annual TensorFlow Developer Summit, hosted in Mountain View and livestreamed around the world, we're announcing TensorFlow 1.0:

It's faster: TensorFlow 1.0 is incredibly fast! XLA lays the groundwork for even more performance improvements in the future, and tensorflow.org now includes tips & tricks for tuning your models to achieve maximum speed. We'll soon publish updated implementations of several popular models to show how to take full advantage of TensorFlow 1.0 - including a 7.3x speedup on 8 GPUs for Inception v3 and 58x speedup for distributed Inception v3 training on 64 GPUs!

It's more flexible: TensorFlow 1.0 introduces a high-level API for TensorFlow, with tf.layers, tf.metrics, and tf.losses modules. We've also announced the inclusion of a new tf.keras module that provides full compatibility with Keras, another popular high-level neural networks library.

It's more production-ready than ever: TensorFlow 1.0 promises Python API stability (details here), making it easier to pick up new features without worrying about breaking your existing code.

Other highlights from TensorFlow 1.0:

- Python APIs have been changed to resemble NumPy more closely. For this and other backwards-incompatible changes made to support API stability going forward, please use our handy migration guide and conversion script.

- Experimental APIs for Java and Go

- Higher-level API modules tf.layers, tf.metrics, and tf.losses - brought over from tf.contrib.learn after incorporating skflow and TF Slim

- Experimental release of XLA, a domain-specific compiler for TensorFlow graphs, that targets CPUs and GPUs. XLA is rapidly evolving - expect to see more progress in upcoming releases.

- Introduction of the TensorFlow Debugger (tfdbg), a command-line interface and API for debugging live TensorFlow programs.

- New Android demos for object detection and localization, and camera-based image stylization.

- Installation improvements:

Python 3 docker images have been added, and TensorFlow's pip packages are now

PyPI compliant. This means TensorFlow can now be installed with a simple

invocation of

pip install tensorflow.

We're thrilled to see the pace of development in the TensorFlow community around

the world. To hear more about TensorFlow 1.0 and how it's being used, you can

watch the TensorFlow

Developer Summit talks on YouTube, covering recent updates from higher-level

APIs to TensorFlow on mobile to our new XLA compiler, as well as

the exciting ways that TensorFlow is being used:

The TensorFlow ecosystem continues to grow with new techniques like Fold

for dynamic batching and tools like the Embedding

Projector along with updatesto our existing tools like TensorFlow Serving. We're incredibly grateful to

the community of contributors, educators, and researchers who have made advances

in deep learning available to everyone. We look forward to working with you on

forums like GitHub

issues, Stack

Overflow, @TensorFlow, the [email protected]

group, and at future events.

| Click here for a link to the livestream and video playlist (individual talks will be posted online later in the day). |

By Amy McDonald Sandjideh, Technical Program Manager, TensorFlow

Google Summer of Code 2016 wrap-up: LabLua

Wednesday, February 8, 2017

This is the final guest post from the students, mentors and organization administrators that participated in Google Summer of Code (GSoC) 2016. We’ve seen recaps of student work and lessons learned, which you can check out the rest of the series as we gear up for this year’s program.

LabLua is a lab at PUC-Rio dedicated to research on programming languages, with emphasis on the Lua language. Lua is a powerful, fast, lightweight, embeddable scripting language that is used in many industrial applications, and on many embedded systems and games.

We were very happy to participate in Google Summer of Code (GSoC) for the third time, and to mentor eight fine students that all completed their projects successfully. We thank them, and Google, for this extraordinary contribution to our research and development work.

Here is a brief summary of this year's projects:

Next Generation of the LuaRocks test suite - Robert Karasek

LuaRocks is the package manager for Lua modules. Its test suite was implemented as a big shell script that performed only black-box testing and ran only on Linux. The goal for this project was to port the test suite to Lua, improving its portability and allowing more types of tests so we could improve test coverage.

Robert ported the test suite to Lua using Busted. His new test suite, now merged into LuaRocks, runs on Linux and Mac OS X, accessible via Travis CI, as well as Windows, accessible via AppVeyor.

This was a welcome addition, bringing greater confidence to developers. Robert improved the checks in existing tests and wrote many new ones, including a new mock-server for testing a client API for uploading packages to the repository.

Typed Lua Typechecker - Tomasz Dyczek

Typed Lua provides static type checking for the Lua language. Typed Lua extends the syntax of Lua 5.3 to introduce type annotations, and performs local type inference for more precise detection of unannotated expressions.

Tomasz implemented the core of Typed Lua in Haskell. Tomasz's implementation parses code written in a syntax close to the abstract syntax of Typed Lua, then type checks the generated AST. Besides providing a support for testing and reasoning about new features, Tomasz's typechecker can be also used to validate tests to be included in Typed Lua's test suite.

Classes and Generics for Typed Lua - Kevin Clancy

Kevin worked on the implementation of a class system for Typed Lua. He also added parametric polymorphism (generics) for classes and existing Typed Lua types, such as functions and tables.

Kevin's work currently lives in its own branch, but will be merged into the main branch soon. Meanwhile, Kevin has written a detailed post explaining all the features he implemented

Improving Error Reporting in PEG Parsers - Matthew Allen Go

LPegLabel is an extension of LPeg, a pattern matching tool for Lua, based on Parsing Expression Grammars (PEGs). LPegLabel supports labeled failures, a facility that improves error reporting and recovery for PEG-based parsers.

The goal of this project was to use LPegLabel to write parsers with good error reporting. These parsers could then be used by the Lua community and also serve as a guide for LPegLabel users. Because LPegLabel is a young tool, another important contribution was to improve the tool's usability.

Matthew achieved both goals. He developed a parser for Lua 5.3, which has been incorporated into the new release of lua-parser (1.0.0), and improved LPegLabel’s usability with work on its API and documentation.

Improving elasticsearch-lua tests and build - Dhaval Kapil

Elasticsearch is a distributed and scalable search engine written in Java that offers a REST API accessed through JSON. During GSoC 2015, Dhaval implemented elasticsearch-lua, a client for the Lua language following a model similar to clients written in Python and PHP.

During GSoC 2016, Dhaval worked on improving elasticsearch-lua. He added a test suite, documented the entire codebase, and updated the current client to work with the newest version of Elasticsearch.

Dhaval went above and beyond, creating a new library called luaver. This work was motivated by having to frequently switch between different versions of Lua while developing the test suite. A full blog post about his project can be found here.

Admin Center and Elasticsearch integration for Sailor - Nikhil Ramesh

Sailor is a web framework with a model-view-controller (MVC) architecture. Like other web frameworks, such as Ruby on Rails and Django, it is designed to make development faster by making some assumptions and conventions and encouraging principles like Don’t Repeat Yourself (DRY).

Nikhil focused on extending Sailor. The first feature he worked on was an Admin Center, which is a web interface for configuring an application. He also integrated Sailor and elasticsearch-lua, allowing Elasticsearch indexes to be stored as Sailor Models. His work is currently pending as a pull request and will soon be merged.

Extending the online tutorial of Céu with Emscripten and SDL - Margarit Vicentiu

Céu is a language for developing reactive applications such as video games and embedded systems. Its compiler generates output in plain C to integrate easily with the underlying platform (e.g. Arduino, SDL). For this project, we wanted to integrate Céu with Emscripten in order to run applications in a web browser.

Vicentiu started with Céu’s online tutorial, which is a server-side application: the user types code in a text area and hits the send button; the server receives the code, executes it, and sends the output back to the user. During the summer, Vicentiu made most of the examples compile with Emscripten and run in real-time on the user’s screen.

Our next goal is to make the graphical examples with user interactions also work in the browser, and Vicentiu plans to continue contributing to the project to achieve this goal.

An automatic generator of WSDL documents for LuaSOAP - Victor Dias

LuaSOAP is a library for working with the Simple Object Access Protocol (SOAP). WSDL is an XML format for describing network services; it is used to describe operations, messages and types offered by Web Services.

This summer Victor extended LuaSOAP's WSDL support by building a software layer for the automatic generation of WSDL documents. This new layer eases the description of most WSDL "bureaucracy" -- types, operations, ports, messages -- which have no counterparts in Lua. He also improved the test suite and the documentation. Victor's work will be integrated into the next version of LuaSOAP.

By Ana Lúcia de Moura, Organization Administrator for LabLua

Google Summer of Code 2016 wrap-up: CloudCV

Monday, February 6, 2017

This guest post is part of our ongoing series of posts from the students, mentors and organization administrators who participated in Google Summer of Code (GSoC), a program which gets university students contributing to open source software.

Google Summer of Code 2016 was a memorable one for CloudCV. Despite being a relatively “young” organization (this is just our second year as a mentor organization), there were many excellent applicants who put a tremendous amount of effort into their proposals and ramp-up tasks. It was difficult to choose!

CloudCV began in the summer of 2014 as a research project within the Machine Learning and Perception Lab at Virginia Tech, with the ambitious goal of democratizing computer vision and machine learning. We’re run exclusively by students and are working to enable developers, researchers, and fellow students to leverage artificial intelligence technology as a service and to share state of the art algorithms with the research community.

In line with this goal, we decided to build two tools that cater to computer vision researchers and hobbyists alike: CloudCV-fy your code and CloudCV-IDE. Though building two new platforms from the ground up was going to be challenging, our students’ motivation was overwhelming and their performance surpassed all expectations. We even demonstrated their work at CVPR 2016, a top-tier computer vision conference!

CloudCV-fy

A recurring use case for computer vision researchers, and many others, is to build a web-based demo and REST API to demonstrate the capabilities of their creations to the world. But web development involves writing hundred of lines of additional code across multiple languages (HTML, CSS, JavaScript, etc), which takes time away from research.

Our first student, Ashish Chaudhary, took on this problem by building CloudCV-fy. Over many iterations of design and development, Ashish delivered a tool that allows a user to simply write lightweight wrappers around their machine learning model/library and be done. CloudCV-fy automatically builds web-based interactive demos for them -- no need to tinker with HTML, CSS or JavaScript. Code to demo. Done.

The demo can be hosted on our servers, the user’s own server or any third party cloud service. As a result of this, researchers can focus on what they do best: designing and training models. CloudCV handles the rest. You can learn more in the write-up Ashish did on his blog.

CloudCV-IDE

There has been an explosion in the number of deep learning frameworks and it is difficult for researchers to keep up with all the latest tools. CloudCV-IDE, built by student Gaurav Gupta, addresses this by allowing a user to build a deep learning network with a drag-and-drop interface, then export to the deep learning framework of their choice (Caffe, TensorFlow, etc).

Gaurav also added support to import model configuration files in order to visualize any architecture. This is one of the first attempts to do this.

By the end of the summer, Gaurav delivered a great UI to visualize models with robust support for Caffe and TensorFlow back-ends. This was a successful start that we plan to build on by supporting more frameworks and facilitating collaborative building of deep learning models.

Overall, this was a highly productive GSoC for CloudCV. Our tools are under active development and we welcome contributions and ideas for new features.

We will definitely apply for GSoC 2017. If you are a student interested in participating we encourage you to get involved early! Feel free to reach out to us on our Gitter channel or on our mailing list.

By Viraj Prabhu, Organization Administrator for CloudCV

Google Summer of Code 2016 was a memorable one for CloudCV. Despite being a relatively “young” organization (this is just our second year as a mentor organization), there were many excellent applicants who put a tremendous amount of effort into their proposals and ramp-up tasks. It was difficult to choose!

CloudCV began in the summer of 2014 as a research project within the Machine Learning and Perception Lab at Virginia Tech, with the ambitious goal of democratizing computer vision and machine learning. We’re run exclusively by students and are working to enable developers, researchers, and fellow students to leverage artificial intelligence technology as a service and to share state of the art algorithms with the research community.

In line with this goal, we decided to build two tools that cater to computer vision researchers and hobbyists alike: CloudCV-fy your code and CloudCV-IDE. Though building two new platforms from the ground up was going to be challenging, our students’ motivation was overwhelming and their performance surpassed all expectations. We even demonstrated their work at CVPR 2016, a top-tier computer vision conference!

CloudCV-fy

A recurring use case for computer vision researchers, and many others, is to build a web-based demo and REST API to demonstrate the capabilities of their creations to the world. But web development involves writing hundred of lines of additional code across multiple languages (HTML, CSS, JavaScript, etc), which takes time away from research.

Our first student, Ashish Chaudhary, took on this problem by building CloudCV-fy. Over many iterations of design and development, Ashish delivered a tool that allows a user to simply write lightweight wrappers around their machine learning model/library and be done. CloudCV-fy automatically builds web-based interactive demos for them -- no need to tinker with HTML, CSS or JavaScript. Code to demo. Done.

The demo can be hosted on our servers, the user’s own server or any third party cloud service. As a result of this, researchers can focus on what they do best: designing and training models. CloudCV handles the rest. You can learn more in the write-up Ashish did on his blog.

CloudCV-IDE

There has been an explosion in the number of deep learning frameworks and it is difficult for researchers to keep up with all the latest tools. CloudCV-IDE, built by student Gaurav Gupta, addresses this by allowing a user to build a deep learning network with a drag-and-drop interface, then export to the deep learning framework of their choice (Caffe, TensorFlow, etc).

Gaurav also added support to import model configuration files in order to visualize any architecture. This is one of the first attempts to do this.

By the end of the summer, Gaurav delivered a great UI to visualize models with robust support for Caffe and TensorFlow back-ends. This was a successful start that we plan to build on by supporting more frameworks and facilitating collaborative building of deep learning models.

Overall, this was a highly productive GSoC for CloudCV. Our tools are under active development and we welcome contributions and ideas for new features.

We will definitely apply for GSoC 2017. If you are a student interested in participating we encourage you to get involved early! Feel free to reach out to us on our Gitter channel or on our mailing list.

By Viraj Prabhu, Organization Administrator for CloudCV

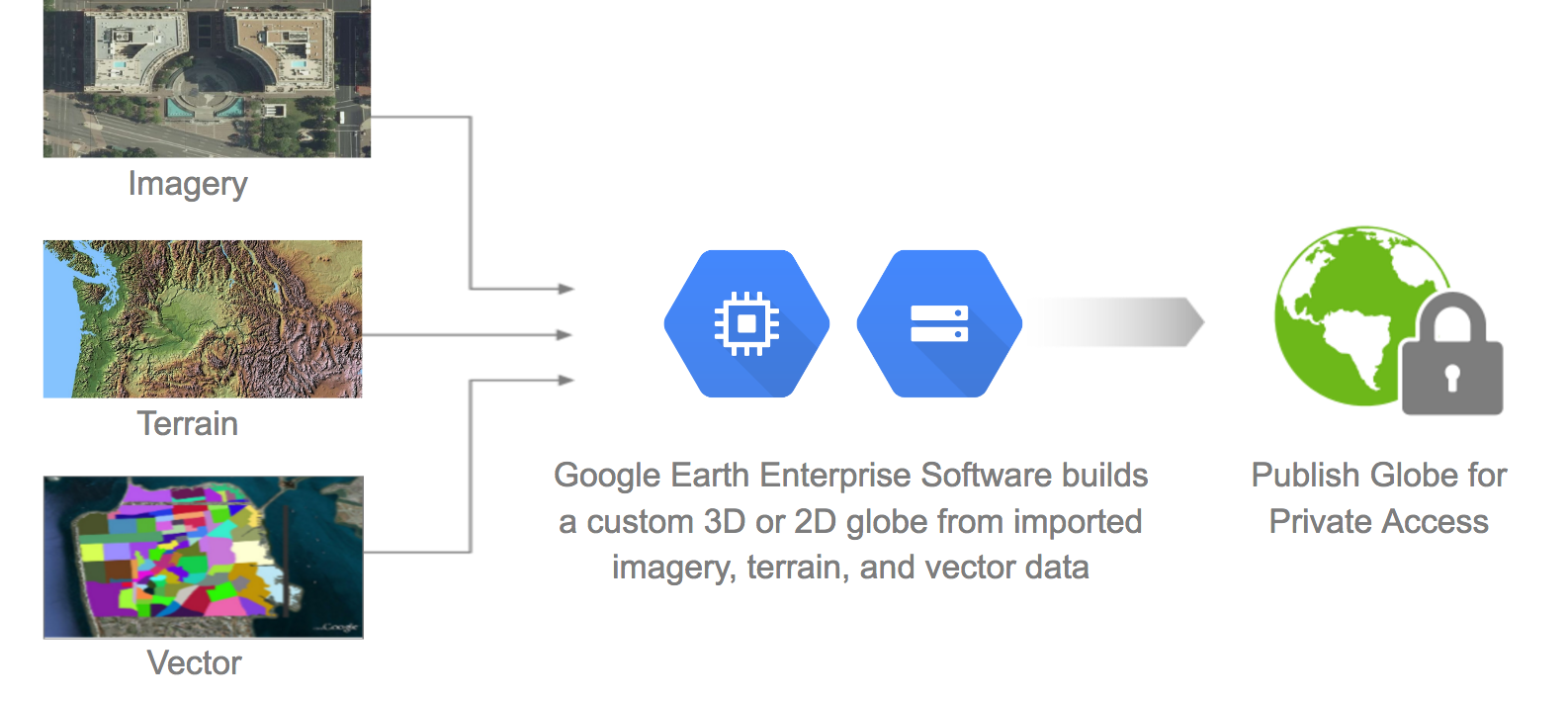

Open-sourcing Google Earth Enterprise

Wednesday, February 1, 2017

(originally posted on the Geo Developers blog)

We are excited to announce that we are open-sourcing Google Earth Enterprise (GEE), the enterprise product that allows developers to build and host their own private maps and 3D globes. With this release, GEE Fusion, GEE Server, and GEE Portable Server source code (all 470,000+ lines!) will be published on GitHub under the Apache2 license in March.

Originally launched in 2006, Google Earth Enterprise provides customers the ability to build and host private, on-premise versions of Google Earth and Google Maps. In March 2015, we announced the deprecation of the product and the end of all sales. To provide ample time for customers to transition, we have provided a two year maintenance period ending on March 22, 2017. During this maintenance period, product updates have been regularly shipped and technical support has been available to licensed customers.

Originally launched in 2006, Google Earth Enterprise provides customers the ability to build and host private, on-premise versions of Google Earth and Google Maps. In March 2015, we announced the deprecation of the product and the end of all sales. To provide ample time for customers to transition, we have provided a two year maintenance period ending on March 22, 2017. During this maintenance period, product updates have been regularly shipped and technical support has been available to licensed customers.

Feedback is important to us and we’ve heard from our customers that GEE remains in-use in mission-critical applications. Many customers have not transitioned to other technologies. Open-sourcing GEE allows our customer community to continue to improve and evolve the project in perpetuity. Note that the implementations for Google Earth Enterprise Client, Google Maps JavaScript® API V3 and Google Earth API will not be open sourced. The Enterprise Client will continue to be made available and updated. However, since GEE Fusion and GEE Server are being open-sourced, the imagery and terrain quadtree implementations used in these products will allow third-party developers to build viewers that can consume GEE Server Databases.

We’re thankful for the help of our GEE partners in preparing the codebase to be migrated to GitHub. It’s a lot of work and we cannot do it without them. It is our hope that their passion for GEE and GEE customers will serve to lead the project into its next chapter.

Looking forward, GEE customers can use Google Cloud Platform (GCP) instead of legacy on-premises enterprise servers to run their GEE instances. For many customers, GCP provides a scalable and affordable infrastructure as a service where they can securely run GEE. Other GEE customers will be able to continue to operate the software in disconnected environments. However, we believe that the advantages of incorporating even some of the workloads on GCP will become apparent (such as processing large imagery or terrain assets on GCP that can be downloaded and brought to internal networks, or standing up user-facing Portable Globe Factories).

Moreover, GCP is increasingly used as a source for geospatial data. Google’s Earth Engine has made available over a petabyte of raster datasets which are readily accessible and available to the public on Google Cloud Storage. Additionally, Google uses Cloud Storage to provide data to customers who purchase Google Imagery today. Having access to massive amounts of geospatial data, on the same platform as your flexible compute and storage, makes generating high quality Google Earth Enterprise Databases and Portables easier and faster than ever.

We will be sharing a series of white papers and other technical resources to make it as frictionless as possible to get open source GEE up and running on Google Cloud Platform. We are excited about the possibilities that open-sourcing enables, and we trust this is good news for our community. We will be sharing more information when we launch the code in March on GitHub. For general product information, visit the Google Earth Enterprise Help Center. Review the essential and advanced training for how to use Google Earth Enterprise, or learn more about the benefits of Google Cloud Platform.

Posted by Avnish Bhatnagar, Senior Technical Solutions Engineer, Google Cloud

We are excited to announce that we are open-sourcing Google Earth Enterprise (GEE), the enterprise product that allows developers to build and host their own private maps and 3D globes. With this release, GEE Fusion, GEE Server, and GEE Portable Server source code (all 470,000+ lines!) will be published on GitHub under the Apache2 license in March.

Feedback is important to us and we’ve heard from our customers that GEE remains in-use in mission-critical applications. Many customers have not transitioned to other technologies. Open-sourcing GEE allows our customer community to continue to improve and evolve the project in perpetuity. Note that the implementations for Google Earth Enterprise Client, Google Maps JavaScript® API V3 and Google Earth API will not be open sourced. The Enterprise Client will continue to be made available and updated. However, since GEE Fusion and GEE Server are being open-sourced, the imagery and terrain quadtree implementations used in these products will allow third-party developers to build viewers that can consume GEE Server Databases.

We’re thankful for the help of our GEE partners in preparing the codebase to be migrated to GitHub. It’s a lot of work and we cannot do it without them. It is our hope that their passion for GEE and GEE customers will serve to lead the project into its next chapter.

Looking forward, GEE customers can use Google Cloud Platform (GCP) instead of legacy on-premises enterprise servers to run their GEE instances. For many customers, GCP provides a scalable and affordable infrastructure as a service where they can securely run GEE. Other GEE customers will be able to continue to operate the software in disconnected environments. However, we believe that the advantages of incorporating even some of the workloads on GCP will become apparent (such as processing large imagery or terrain assets on GCP that can be downloaded and brought to internal networks, or standing up user-facing Portable Globe Factories).

Moreover, GCP is increasingly used as a source for geospatial data. Google’s Earth Engine has made available over a petabyte of raster datasets which are readily accessible and available to the public on Google Cloud Storage. Additionally, Google uses Cloud Storage to provide data to customers who purchase Google Imagery today. Having access to massive amounts of geospatial data, on the same platform as your flexible compute and storage, makes generating high quality Google Earth Enterprise Databases and Portables easier and faster than ever.

We will be sharing a series of white papers and other technical resources to make it as frictionless as possible to get open source GEE up and running on Google Cloud Platform. We are excited about the possibilities that open-sourcing enables, and we trust this is good news for our community. We will be sharing more information when we launch the code in March on GitHub. For general product information, visit the Google Earth Enterprise Help Center. Review the essential and advanced training for how to use Google Earth Enterprise, or learn more about the benefits of Google Cloud Platform.

Posted by Avnish Bhatnagar, Senior Technical Solutions Engineer, Google Cloud

Googlers on the road: FOSDEM 2017

The new year is off to an excellent start as we wrap up the 7th year of Google Code-in, ramp up for the 13th year of Google Summer of Code, and return from connecting with our compatriots in the open source community down under at Linux.conf.au. Next up? We’re headed to FOSDEM, Europe’s famed non-commercial and volunteer-organized open source conference.

FOSDEM is hosted in Brussels on the Université libre de Bruxelles campus and runs the weekend of February 4-5. It’s a unique event in the spirit of the free and open source software and is free to the public. This year they are expecting 8,000+ attendees.

We’re looking forward to talking face-to-face with some of the thousands of former students, mentors and organization administrators who have participated in our student programs. A few of them will even be giving talks about their recent Google Summer of Code experience.

If you’d like to say hello or chat about our programs, you’ll be sure to find a Googler or two at our table. You’ll also find a number of Googlers in the program schedule:

Saturday, February 4th

2:00pm Bazel: How to build at Google scale by Klaus Aehlig

3:25pm Copyleft in Commerce: How GPLv3 keeps Samba relevant in the marketplace by Jeremy Allison

Sunday, February 5th

10:40am gRPC 101: Building fast and efficient microservices by Ray Tsang

10:50am Is the GPL a copyright license or a contract under U.S. law? by Max Sills

12:45pm The state of Go: What to expect in Go 1.8 by Francesc Campoy

1:00pm Analyze terabytes of OS code with one query by Felipe Hoffa (more info)

2:50pm Like the ants: Turn individuals into a large contributing community by Dan Franc

See you there!

By Josh Simmons, Open Source Programs Office

| FOSDEM logo licensed under CC BY. |

FOSDEM is hosted in Brussels on the Université libre de Bruxelles campus and runs the weekend of February 4-5. It’s a unique event in the spirit of the free and open source software and is free to the public. This year they are expecting 8,000+ attendees.

We’re looking forward to talking face-to-face with some of the thousands of former students, mentors and organization administrators who have participated in our student programs. A few of them will even be giving talks about their recent Google Summer of Code experience.

If you’d like to say hello or chat about our programs, you’ll be sure to find a Googler or two at our table. You’ll also find a number of Googlers in the program schedule:

Saturday, February 4th

2:00pm Bazel: How to build at Google scale by Klaus Aehlig

3:25pm Copyleft in Commerce: How GPLv3 keeps Samba relevant in the marketplace by Jeremy Allison

Sunday, February 5th

10:40am gRPC 101: Building fast and efficient microservices by Ray Tsang

10:50am Is the GPL a copyright license or a contract under U.S. law? by Max Sills

12:45pm The state of Go: What to expect in Go 1.8 by Francesc Campoy

1:00pm Analyze terabytes of OS code with one query by Felipe Hoffa (more info)

2:50pm Like the ants: Turn individuals into a large contributing community by Dan Franc

See you there!

By Josh Simmons, Open Source Programs Office