-

Notifications

You must be signed in to change notification settings - Fork 951

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

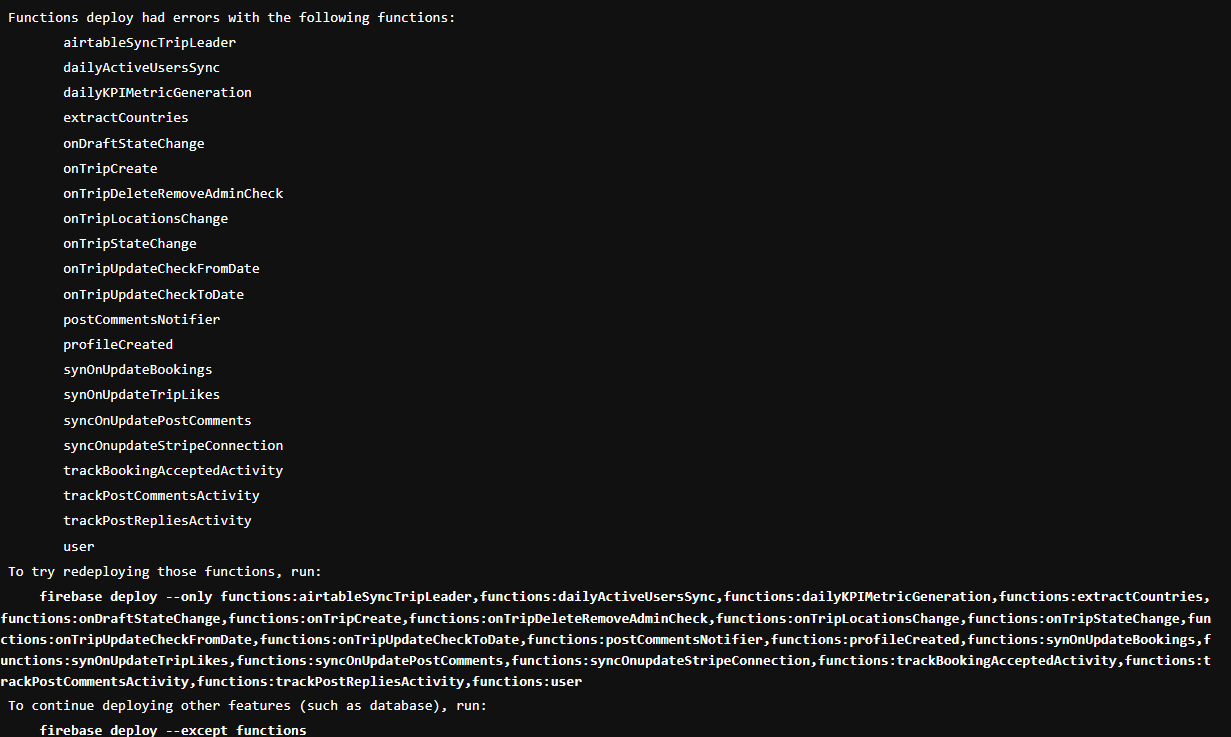

Request: Automatically retry failed function deploys that fail due to "You have exceeded your deployment quota" #2606

Comments

|

@joehan is there something we could do in deploy to rate limit or retry in these cases? |

|

I have 122 functions that I deploy and after updating my firebase-tools to v 8.10.0, firebase-tools no longer tells me which functions failed to deploy because of the rate limiting. Having something that retries the failed functions would be fantastic. |

|

Agree, nice idea |

|

Definitely it would be awesome to have it. We have the same problem (we have 74 functions) and have to redeploy them manually. It's a big pain and stops us from doing automatic deployments for firebase functions. |

|

I have the same problem with aprox 90 functions. Not being able to rely on CI deploy is very limiting. |

|

@joehan friendly ping, did you get a change to take a look yet? I'm happy to take a stab at this in a MR if it has a chance of being accepted |

|

Hey @kamshak, thanks for the reminder, this one slipped past me! With the recent switch to using Cloud Build, I don't see any reason we shouldn't add retries here. I would be more than happy to review a PR for this. |

|

Not sure if this is a bug or not, but did anyone else notice that sometimes the CLI tells you which functions failed to deploy due to rate limiting, but then other times the CLI doesn't tell you (even though some failed due to rate limiting)? |

|

@KyleFoleyImajion how many functions do you have in your project currently? I've started looking into this and there are 2 cases when you can run into rate limits:

The solution is therefore a bit more complicated than I thought originally. The code right now is also written in a way that makes it a bit difficult to add retries. I've run into two problems:

@joehan I'm not sure in how far you are OK with larger changes to the codebase here. Currently I'm thinking the best solution would be to refactor it a little bit. My Idea right now would be:

|

|

Currently at 119 functions, and I deploy using the Thanks for taking a look at this issue! |

|

@kamshak I'm open to larger changes in this part of the codebase - its one of our older and less healthy flows at this point. Fair warning, I am planning on making some large refactors to this path later this year that might make these suggested changes obsolete - totally understand if that changes your appetite for refactoring this. To your specific suggestions:

Agreed that these, along with the heavy reliance on long promise chains, are the main problems with this code.

I'm not sure if you'll be able to see when the Cloud Build itself starts from the CRUDFunction and GetOperation calls. In the interest of being as clear as possible, I think the logging here should be more along the lines of "Function deploy started" as opposed to "cloud build started". We also still support Node8 deploys, which don't use Cloud Build in the same way. The rest of the design sounds good to me at a high level, particularly reusing the Queue/Throttler code |

|

We have the same problem with only around ~30 functions. In our case we deploy several times per day, or even several times per hour if we are really moving. We also leverage cloud build for other things (Cloud Run, Google App Engine), which perhaps makes the problem worse. |

|

We are facing the same issue of lately. With around ~60 functions that we deploy automatically from a CI/CD pipeline, the deployment fails randomly for 1-3 functions. Though checking the limits in GCP clearly shows that rate limit was never hit for any of the deployments, so not sure what's going on in here. We have 2 identical projects, one for our staging and one for production and so far this issue seems to be happening only on staging project, and everything seem to work fine on production setup. So not sure what's going on in there. It's a bit cryptic to debug and resolve this issue without knowing where could the underlying problem be. We have had issues with Cloud Functions in the past and most of the time it turned out to be an issue on the GCP side itself which was confirmed by the Firebase Support Team. Not sure should we already reach out to the Firebase Support Team or what 🤷 |

|

Has anyone explored the idea of querying the Functions API for a checksum for the function? Then using the function's hash, calculate if the local version has changed and only upload modified functions. If a hash isn't available, then perhaps the functions API might have something similar perhaps? I know this wouldn't fix the main issue raised in the ticket here, but for me I think a process which only publishes updated code would solve 90% of the quota issues that I experience. |

|

Despite using the new update still getting this issue on 40%-60% of deployments... :( |

|

This is still happening to me @joehan |

|

This just started happening to me as well again, was working perfectly for a while. |

|

I am having similar issues and it doesn't seem to be retrying anymore. |

|

This also has some weird side effects. Normally when I first time deploy an onCall function it has allow |

|

I'm having the same problem. This was fixed but now it's happening again. |

|

I am having the same issue than @antonstefer. When deploying about 70 GCF, some of them fails with functions: got "Quota Exceeded" error while trying to update and the permission principal |

|

Still looks like an issue... Does Google/Firebase team have any recommendations here? Never deploy all functions at the same time? Deploying 55 functions as part of our CI/CD, some functions randomly fail to deploy with |

|

Running in the same issue with about 130 GCFs. For function groups the official recommendation sais to not deploy more than 10 at a time. Deploying in batches fixes CI deploy but the pipeline takes forever. |

|

This is what I do. I love shipping my software 10% at a time |

|

We're running into this issue consistently deploying 72 functions using |

|

We also have this issue deploying about ~90 functions - currently on 4.1.0 of firebase-functions. Would love a solution or recommendation. |

|

This issue has been going on since 2020. |

|

Here is a simple python script to deploy all of your functions in batches of 10 import subprocess

import re

import datetime

# Read functions/index.js file

with open('./functions/index.js', 'r') as f:

index_file = f.read()

# Scrape function names

function_names = re.findall(r'exports\.([^\s]+)\s*=\s*functions\.', index_file)

# Deploy Firebase functions in batches of 10

def deploy_functions():

batches = [function_names[i:i+10] for i in range(0, len(function_names), 10)]

for batch in batches:

functions_str = ','.join([f'functions:{fn}' for fn in batch])

command = f'firebase deploy --only \"{functions_str}\"'

print(f'Deploying functions: {", ".join(batch)}...')

print(command)

try:

# Create log file with current timestamp

timestamp = datetime.datetime.now().strftime('%Y-%m-%d-%H-%M')

log_file = f'deploy_logs/{timestamp}.log'

# Execute command and write output to log file

with open(log_file, 'w') as f:

result = subprocess.run(command, shell=True, check=True, stdout=f, stderr=subprocess.STDOUT)

print(f'Functions: {", ".join(batch)} deployed successfully. Log file: {log_file}')

except subprocess.CalledProcessError as error:

print(f'Error deploying functions: {batch}. Log file: {log_file}')

print(f'{error}')

deploy_functions()It took 25 minutes to deploy 125 functions |

|

Having the same problem with 300 functions |

|

Initially the 'retry' update fixed this for us, but the errors have recently resurfaced despite the retries. Deploying around 100 functions |

|

We are also getting failures with the newest firebase cli 13.11.4 |

|

An ongoing Firebase deployment issue where 9/10 times you need to re-deploy several times. got "Quota Exceeded" error while trying to update This really needs to be fixed. |

|

Hope the "Quota Exceeded" errors would get retried properly in the future but in the meanwhile I fixed it with retries. It works because the deployment will skip functions which have no code changes ( for try in {1..3}; do

echo "Deploying functions (try $try)"

if firebase deploy --force --only functions; then

break

fi

done |

Problem & Background

We have a deployment of ~70 functions and deploy them as part of CI at once. Whenever we deploy a random set of functions fails to deploy due to "You have exceeded your deployment quota". This used to block us completely a few weeks ago when there still was a quota on build time. Since this has been moved to Cloud Build as far as I can tell there are no more limits and you simply pay for your build time. Which is awesome, no more blocking the build due to quotas!

Unfortunately there still seems to be some other quotas/rate limits that are causing the deploy to fail. From what I can tell these might be simply too many write requests against the Cloud Functions API (or perhaps cloud build?).

The error message suggests to deploy with --only - Unfortunately it is not easy for us to split these up into separately deployed functions. It is also impossible to do when a dependency is updated or we change a data model or utility library that is used by different functions. Analyzing which function changed and has to be redeployed is not possible (for us) automatically and has to be done manually. This then brings new pains, where automated deploys after code review become impossible.

Right now this means our pipelines all fail regularily - and we manually retry them until every functions deployed successfully once.

Suggestion

Since retrying the failed functions right after always works, my suggestion would be to retry failed deploys that hit code 8 here:

firebase-tools/src/deploy/functions/release.js

Lines 97 to 105 in 3994bf7

I suppose this could be implemented here:

firebase-tools/src/deploy/functions/release.js

Line 526 in 3994bf7

If this would be a way forward I'm happy to create a PR for this.

The text was updated successfully, but these errors were encountered: