In the rapidly evolving landscape of AI and data science, the demand for scalable, efficient, and flexible infrastructure has never been higher. Traditional infrastructure can often struggle to meet the demands of modern AI workloads, leading to bottlenecks in development and deployment processes. As organizations strive to deploy AI models and data-intensive applications at scale, cloud-native technologies have emerged as a game-changer.

To help organizations with their AI application development process, NVIDIA has developed and validated NVIDIA Cloud Native Stack (CNS), an open-source reference architecture used by NVIDIA to test and certify all supported AI software.

CNS enables you to run and test containerized GPU-accelerated applications orchestrated by Kubernetes, with easy access to features such as Multi-Instance GPU (MIG) and GPUDirect RDMA on platforms that support these features. CNS is for development and testing purposes, but applications developed on CNS can subsequently be run in production on enterprise Kubernetes-based platforms.

This post explores the following key areas:

- The components and benefits of CNS

- How KServe on CNS enhances AI model evaluation and deployment

- Implementing NVIDIA NIM with these solutions in your AI infrastructure

CNS overview

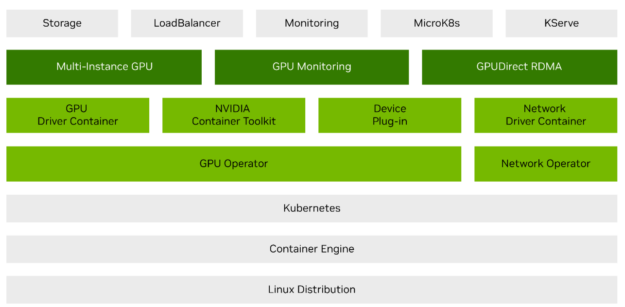

CNS provides a reference architecture that includes various versioned software components tested together to ensure optimal operation, including the following:

- Kubernetes

- Helm

- Containerd

- NVIDIA GPU Operator

- NVIDIA Network Operator

NVIDIA GPU Operator simplifies the ability to run AI workloads on cloud-native technologies, providing an easy way to experience the latest NVIDIA features:

- Multi-Instance GPU (MIG)

- GPUDirect RDMA

- GPUDirect Storage

- GPU monitoring capabilities

CNS also includes optional add-on tools:

- microK8s

- Storage

- LoadBalancer

- Monitoring

- KServe

CNS abstracts away much of the complexity involved in setting up and maintaining these environments, enabling you to focus on prototyping and testing AI applications, rather than assembling and managing the underlying software infrastructure.

Applications developed on CNS are assured to be compatible with deployments based on NVIDIA AI Enterprise, providing a smooth transition from development to production. In addition, Kubernetes platforms that adhere to the versions of components as defined in this stack are assured to run NVIDIA AI software in a supported manner.

CNS can be deployed on bare metal, cloud, or VM-based environments. It is available in Install Guides for manual installations and Ansible Playbooks for automated installations. For more information, see Getting Started.

Add-on tools are disabled by default. For more information about enabling add-on tools, see NVIDIA Cloud Native Stack Installation.

Interested in a pre-provisioned CNS environment? NVIDIA LaunchPad provides pre-provisioned environments to get you started quickly.

Enhancing AI model evaluation

KServe is a powerful tool that enables organizations to serve machine learning models efficiently in a cloud-native environment. By using the scalability, resilience, and flexibility of Kubernetes, KServe simplifies the prototyping and development of sophisticated AI models and applications.

NNS with KServe enables the deployment of Kubernetes clusters that can handle the complex workflows associated with AI model training and inference.

Deploying NVIDIA NIM with KServe

Deploying NVIDIA NIM on CNS with KServe not only simplifies the development process but also ensures that your AI workflows are scalable, resilient, and easy to manage. By using Kubernetes and KServe, you can seamlessly integrate NVIDIA NIM with other microservices, creating a robust and efficient AI application development platform. For more information, see KServe Providers Dish Up NIMble Inference in Clouds and Data Centers.

Follow the instructions to install the CNS with KServe. After KServe is deployed on the cluster, we recommend enabling the storage and monitoring option to monitor the performance of the deployed model and scale the services as needed.

Then, follow the steps for Deploying NIM on Kserve. For more information about different ways to deploy NIM, see NIM-Deploy, which contains examples of how NIM can be deployed using KServe and Helm charts as well.

Conclusion

CNS is a reference architecture that is intended for development and testing purposes. It represents a significant advancement in the deployment and management of generative AI and data science workloads because the software stack from CNS has been fully tested to work seamlessly together.

CNS, combined with KServe, provides a robust solution for simplifying AI model and application development. With this validated reference architecture, you can overcome the complexities of infrastructure management and focus on driving innovation in your AI initiatives. The flexibility to run on bare metal, cloud, or VM-based environments; scalability; and ease of use make CNS an ideal choice for organizations of all sizes.

Whether you’re deploying NIM microservices, using KServe for model serving, or integrating advanced GPU features, CNS provides the tools and capabilities needed to accelerate AI innovation and provides a path to bring powerful solutions to production with greater efficiency and ease.