Generative AI technologies are revolutionizing how games are conceived, produced, and played. Game developers are exploring how these technologies impact 2D and 3D content-creation pipelines during production. Part of the excitement comes from the ability to create gaming experiences at runtime that would have been impossible using earlier solutions.

The creation of non-playable characters (NPCs) has evolved as games have become more sophisticated. The number of pre-recorded lines has grown, the number of options a player has to interact with NPCs has increased, and facial animations have become more realistic.

Yet player interactions with NPCs still tend to be transactional, scripted, and short-lived, as dialogue options exhaust quickly, serving only to push the story forward. Now, generative AI can make NPCs more intelligent by improving their conversational skills, creating persistent personalities that evolve over time, and enabling dynamic responses that are unique to the player.

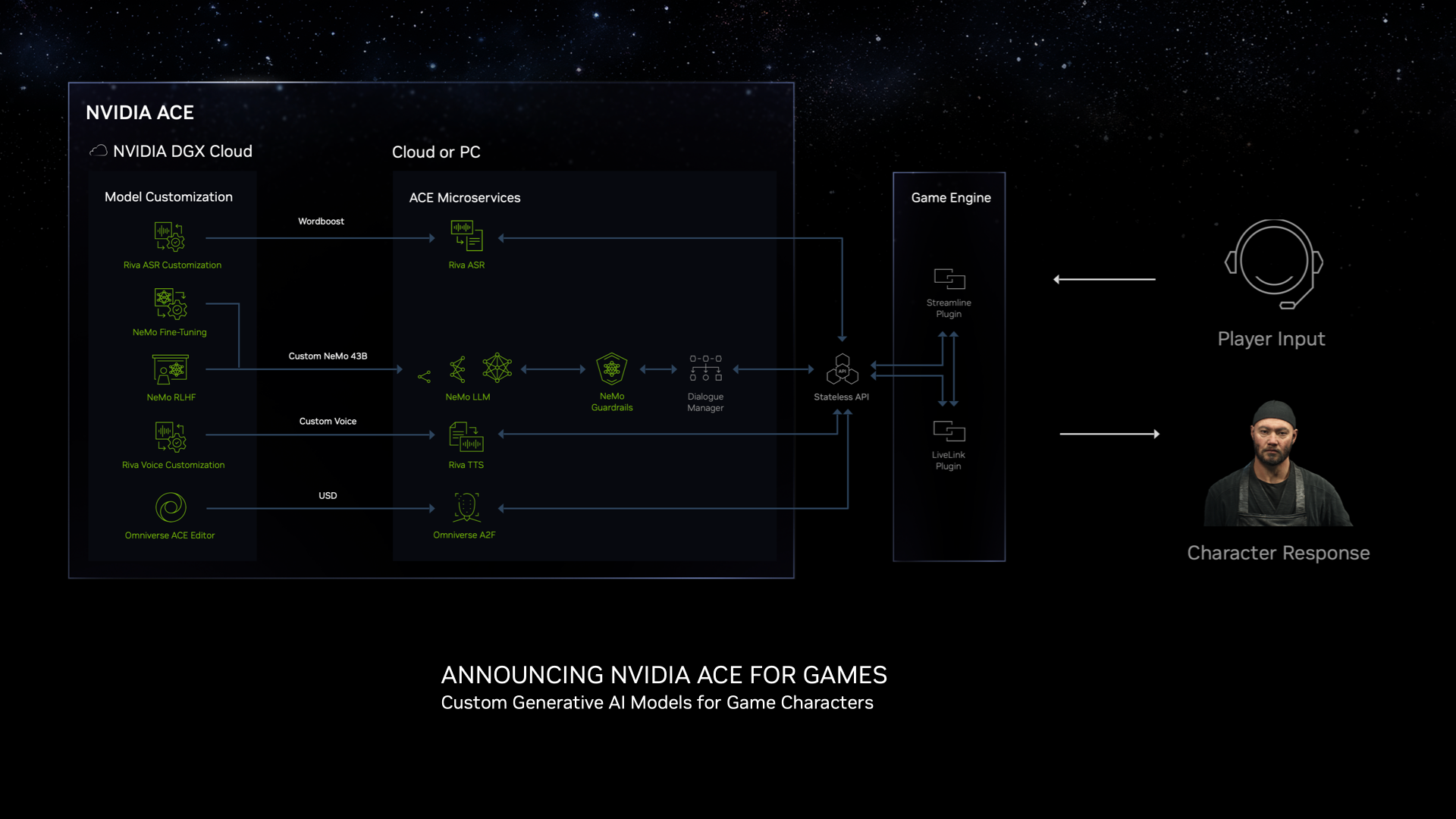

At COMPUTEX 2023, NVIDIA announced the future of NPCs with the NVIDIA Avatar Cloud Engine (ACE) for Games. NVIDIA ACE for Games is a custom AI model foundry service that aims to transform games by bringing intelligence to NPCs through AI-powered natural language interactions.

Developers of middleware, tools, and games can use NVIDIA ACE for Games to build and deploy customized speech, conversation, and animation AI models in software and games.

Generate NPCs with the latest breakthroughs in AI foundation models

The optimized AI foundation models include the following:

- NVIDIA NeMo: Provides foundation language models and model customization tools so you can further tune the models for game characters. The models can be integrated end-to-end or in any combination, depending on need. This customizable large language model (LLM) enables specific character backstories and personalities that fit the game world.

- NVIDIA Riva: Provides automatic speech recognition (ASR) and text-to-speech (TTS) capabilities to enable live speech conversation with NVIDIA NeMo.

- NVIDIA Omniverse Audio2Face: Instantly creates expressive facial animation for game characters from just an audio source. Audio2Face features Omniverse connectors for Unreal Engine 5, so you can add facial animation directly to MetaHuman characters.

You can bring life to NPCs through NeMo model alignment techniques. First, employ behavior cloning to enable the base language model to perform role-playing tasks according to instructions. To further align the NPC’s behavior with expectations, in the future, you can apply reinforcement learning from human feedback (RLHF) to receive real-time feedback from designers during the development process.

After the NPC is fully aligned, the final step is to apply NeMo Guardrails, which adds programmable rules for NPCs. This toolkit assists you in building accurate, appropriate, on-topic, and secure game characters. NeMo Guardrails natively supports LangChain, a toolkit for developing LLM-powered applications.

NVIDIA offers flexible deployment methods for middleware, tools, and game developers of all sizes. The neural networks enabling NVIDIA ACE for Games are optimized for different capabilities, with various size, performance, and quality trade-offs.

The ACE for Games foundry service will help you fine-tune models for your games and then deploy them through NVIDIA DGX Cloud, GeForce RTX PCs, or on-premises for real-time inferencing. You can also validate the quality of the models in real time and test performance and latency to ensure that they meet specific standards before deployment.

Create end-to-end avatar solutions for games

To showcase how you can leverage ACE for Games to build NPCs, NVIDIA partnered with Convai, a startup building a platform for creating and deploying AI characters in games and virtual worlds, to help optimize and integrate ACE modules into their offering.

“With NVIDIA ACE for Games, Convai’s tools can achieve the latency and quality needed to make AI non-playable characters available to nearly every developer in a cost-efficient way,” said Purnendu Mukherjee, founder and CEO at Convai.

Convai used NVIDIA Riva for speech-to-text and text-to-speech capabilities, NVIDIA NeMo for the LLM that drives the conversation, and Audio2Face for AI-powered facial animation from voice inputs.

As shown in Video 1, these modules were integrated seamlessly into the Convai services platform and fed into Unreal Engine 5 and MetaHuman to bring the immersive NPC Jin to life. The ramen shop scene, created by the NVIDIA Lightspeed Studios art team, runs in the NVIDIA RTX Branch of Unreal Engine 5 (NvRTX 5.1). The scene is rendered using RTX Direct Illumination (RTXDI) for ray-traced lighting and shadows alongside NVIDIA DLSS 3 for maximum performance.

Game developers are already using existing NVIDIA generative AI technologies for game development:

- GSC Game World, one of Europe’s leading game developers, is adopting Audio2Face in its upcoming game, S.T.A.L.K.E.R. 2: Heart of Chornobyl.

- Fallen Leaf, an indie game developer, is also using Audio2Face for character facial animation in Fort Solis, a third-person sci-fi thriller game that takes place on Mars.

- Generative AI-focused companies such as Charisma.ai are leveraging Audio2Face to power the animation in their conversation engine.

Subscribe to learn more about NVIDIA ACE for Games, future developments, and early access programs. For more information about their features, use cases, and integrations, see Convai. For more information about integrating NVIDIA RTX and AI technologies into games, see NVIDIA Game Development Resources.