In the rapidly evolving landscape of AI-driven applications, re-ranking has emerged as a pivotal technique to enhance the precision and relevance of enterprise search results. By using advanced machine learning algorithms, re-ranking refines initial search outputs to better align with user intent and context, thereby significantly improving the effectiveness of semantic search. This enhances user satisfaction by delivering more accurate and contextually relevant results and also boosts conversion rates and engagement metrics.

Re-ranking also plays a crucial role in optimizing retrieval-augmented generation (RAG) pipelines, where it ensures that large language models (LLMs) work with the most pertinent and high-quality information. This dual benefit of re-ranking—enhancing both semantic search and RAG pipelines—makes it an indispensable tool for enterprises aiming to deliver superior search experiences and maintain a competitive edge in the digital marketplace.

In this post, I use the NVIDIA NeMo Retriever reranking NIM. It’s a transformer encoder: a LoRA fine-tuned version of Mistral-7B that uses only the first 16 layers for higher throughput. The last embedding output by the decoder model is used as a pooling strategy, and a binary classification head is fine-tuned for the ranking task.

What is re-ranking?

Re-ranking is a sophisticated technique used to enhance the relevance of search results by using the advanced language understanding capabilities of LLMs.

Initially, a set of candidate documents or passages is retrieved using traditional information retrieval methods like BM25 or vector similarity search. These candidates are then fed into an LLM that analyzes the semantic relevance between the query and each document. The LLM assigns relevance scores, enabling the re-ordering of documents to prioritize the most pertinent ones.

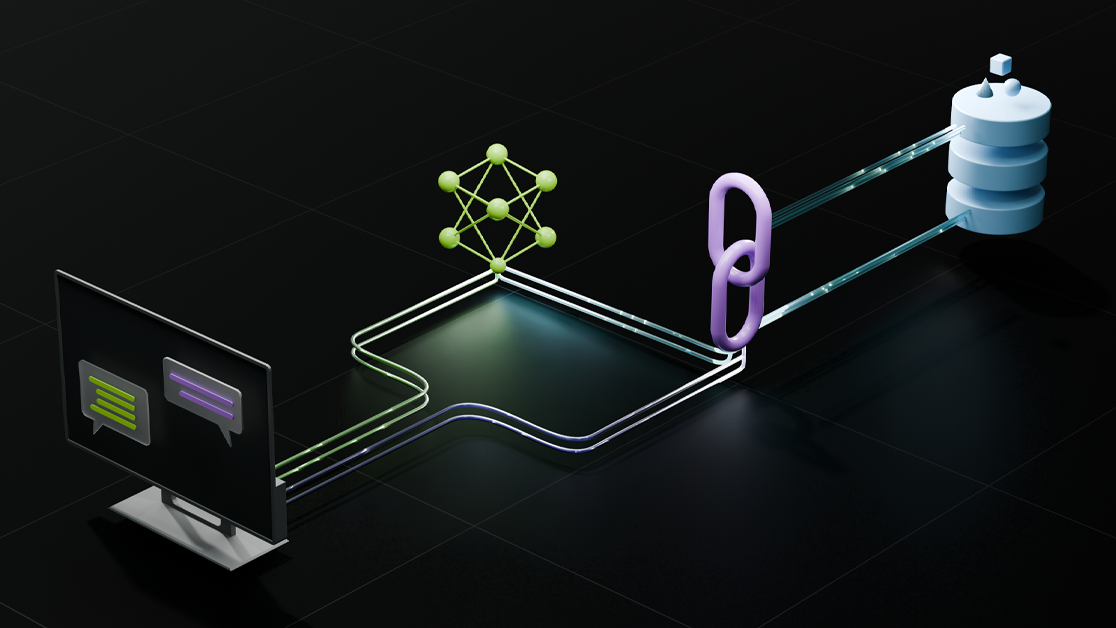

This process significantly improves the quality of search results by going beyond mere keyword matching to understand the context and meaning of the query and documents. Re-ranking is typically used as a second stage after an initial fast retrieval step, ensuring that only the most relevant documents are presented to the user. It can also combine results from multiple data sources, as well as integrate in a RAG pipeline to further ensure that context is ideally tuned for the specific query.

To access the NVIDIA NeMo Retriever collection of world-class information retrieval microservices, see the NVIDIA API Catalog.

Tutorial prerequisites

To make the best use of this tutorial, you need a basic knowledge of LLM inference pipelines along with the following resources:

Set up

To get started, create a free account with the NVIDIA API Catalog and follow these steps:

- Select any model.

- Choose Python, Get API Key.

- Save the generated key as

NVIDIA_API_KEY.

From there, you should have access to the endpoints.

Now, install LangChain, NVIDIA AI Endpoints, and FAISS:

pip install langchain

pip install langchain_nvidia_ai_endpoints

pip install faiss-gpu

Load relevant documents

For this example, load the recent NVIDIA publication on multi-modal LLMs, VILA: On Pre-training for Visual Language Models. Use this single PDF for all the examples in the post, but the code can be easily extended to load multiple documents.

from langchain_community.document_loaders import PyPDFLoader

document = PyPDFLoader("2312.07533v4.pdf").load()

Split into chunks

Next, split the documents into separate chunks.

Make sure to pay attention to the chunk_size parameter in TextSplitter. Setting the right chunk size is critical for RAG performance, as much of a RAG pipeline’s success is based on the retrieval step finding the right context for generation. The retrieval step typically examines smaller chunks of the original text rather than all documents.

The entire prompt (retrieved chunks plus the user query) must fit within the LLM’s context window. Don’t specify chunk sizes too big and balance them out with the estimated query size. Experiment with different chunk sizes, but typical values should be 100-600 tokens, depending on the LLM.

from langchain_text_splitters import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(chunk_size=800, chunk_overlap=200)

texts = text_splitter.split_documents(document)

Generate embeddings

Next, generate embeddings using NVIDIA AI Foundation endpoints and save the embeddings to an offline vector store in the /embed directory for future re-use.

For this task, use FAISS, a library for efficient similarity search and clustering of dense vectors. It contains algorithms that search in sets of vectors of any size, up to ones that possibly do not fit in RAM.

from langchain_nvidia_ai_endpoints import NVIDIAEmbeddings

from langchain_community.vectorstores import FAISS

embeddings = NVIDIAEmbeddings()

db = FAISS.from_documents(texts, embeddings)

Create a basic retriever

Now create a basic retriever based on the document and search for the most relevant chunks for your query. This code outputs the 45 most relevant chunks to your query based on a simple retrieval algorithm:

retriever = db.as_retriever(search_kwargs={"k": 45})

query = "Where is the A100 GPU used?"

docs = retriever.invoke(query)

Add a re-ranking step

Now add a re-ranking step using the NeMo Retriever reranking NIM. This is a GPU-accelerated model optimized for providing a probability score that a given passage contains the information to answer a question. This re-ranks the previously fetched chunks according to which is most relevant using the same query.

You use the NIM as input to the LangChain contextual compression retriever, which improves retrieval by compressing and filtering documents based on the query context before returning them.

from langchain_nvidia_ai_endpoints import NVIDIARerank

from langchain.retrievers.contextual_compression import ContextualCompressionRetriever

reranker = NVIDIARerank()

compression_retriever = ContextualCompressionRetriever(

base_compressor=reranker, base_retriever=retriever

)

reranked_chunks = compression_retriever.compress_documents(query)

The reranking NIM recognizes the most relevant chunk as the paragraph related to training cost at the end of the paper, which specifies the A100 GPU:

Table 10. The SFT blend we used during the ablation study.

B. Training Cost

We perform training on 16 A100 GPU nodes, each node

has 8 GPUs. The training hours for each stage of the 7B

model are: projector initialization: 4 hours; visual language

pre-training: 30 hours; visual instruction-tuning: 6 hours.

The training corresponds to a total of 5.1k GPU hours. Most

of the computation is spent on the pre-training stage.

We have not performed training throughput optimizations

like sample packing [ 32] or sample length clustering. We

believe we can reduce at least 30% of the training time with

proper optimization. We also notice that the training time is

much longer as we used a high image resolution of 336 ×336

(corresponding to 576 tokens/image). We should be able to

Combining results from multiple data sources

In addition to enhancing accuracy for a single data source, you can use re-ranking to combine multiple data sources in a RAG pipeline.

Consider a pipeline with data from a semantic store, such as the previous example, as well as a BM25 store. Each store is queried independently and returns results that the individual store considers to be highly relevant. Figuring out the overall relevance of the results is where re-ranking comes into play.

The following code example combines the previous semantic search results with BM25 results. The results in combined_docs are ordered by their relevance to the query by the reranking NIM.

all_docs = docs + bm25_docs

reranker.top_n = 5

combined_docs = reranker.compress_documents(query=query, documents=all_docs)

For more information, including the setup of a BM25 store, see the full notebook in the /langchain-ai/langchain-nvidia GitHub repo.

Connect to a RAG pipeline

In addition to using re-ranking independently, you can add it to a RAG pipeline to further enhance responses by ensuring that they use the most relevant chunks for augmenting the original query.

In this case, connect the compression_retriever object from the previous step to the RAG pipeline.

from langchain.chains import RetrievalQA

from langchain_nvidia_ai_endpoints import ChatNVIDIA

chain = RetrievalQA.from_chain_type(

llm=ChatNVIDIA(temperature=0), retriever=compression_retriever

)

result = chain({"query": query})

print(result.get("result"))

The RAG pipeline now uses the correct top-ranked chunk and summarizes the main insights:

The A100 GPU is used for training the 7B model in the supervised

fine-tuning/instruction tuning ablation study. The training is

performed on 16 A100 GPU nodes, with each node having 8 GPUs. The

training hours for each stage of the 7B model are: projector

initialization: 4 hours; visual language pre-training: 30 hours;

and visual instruction-tuning: 6 hours. The total training time

corresponds to 5.1k GPU hours, with most of the computation being

spent on the pre-training stage. The training time could potentially

be reduced by at least 30% with proper optimization. The high image

resolution of 336 ×336 used in the training corresponds to 576

tokens/image.

Conclusion

RAG has emerged as a powerful approach, combining the strengths of LLMs and dense vector representations. By using dense vector representations, RAG models can scale efficiently, making them well-suited for large-scale enterprise applications, such as multilingual customer service chatbots and code generation agents.

As LLMs continue to evolve, it is clear that RAG will play an increasingly important role in driving innovation and delivering high-quality, intelligent systems that can understand and generate human-like language.

When building your own RAG pipeline, it’s important to correctly split the vector store documents into chunks by optimizing the chunk size for your specific content and selecting an LLM with a suitable context length. In some cases, complex chains of multiple LLMs may be required. To optimize RAG performance and measure success, use a collection of robust evaluators and metrics.

For more information about additional models and chains, see NVIDIA AI LangChain endpoints.