Toyota shifts into overdrive: Developing an AI platform for enhanced manufacturing efficiency

Kohdai Gotoh

AI Group, Production Digital Transformation Dept, Toyota Motor Corporation

The automotive industry is facing a profound transformation, driven by the rise of CASE, — connected cars, autonomous and automated driving, shared mobility, and electrification. Simultaneously, manufacturers face the imperative to further increase efficiency, automate manufacturing, and improve quality. AI has emerged as a critical enabler of this evolution. In this dynamic landscape, Toyota turned to Google Cloud's AI Infrastructure to build an innovative AI Platform that empowers factory workers to develop and deploy machine learning models across key use cases.

Toyota's renowned production system, Toyota Production System, rooted in the principles of "Jidoka" (automation with a human touch) and "Just-in-Time" inventory management, has long been the gold standard in manufacturing efficiency. However, certain parts of this system are resistant to conventional automation.

We started experimenting with using AI internally in 2018. However, a shortage of employees with the expertise required for AI development created a bottleneck in promoting its wider use. Seeking to overcome these limitations, Toyota's Production Digital Transformation Office embarked on a mission to democratize AI development within its factories in 2022.

Our goal was to build an AI Platform that enabled factory floor employees, regardless of their AI expertise, to create machine learning models with ease. This would facilitate the automation of manual, labor-intensive tasks, freeing up human workers to focus on higher-value activities such as process optimization, AI implementation in other production areas, and data-driven decision-making.

AI Platform is the collective term for the AI technologies we have developed, including web applications that enable easy creation of learning models on the manufacturing floor, compatible equipment on the manufacturing line, and the systems that support these technologies.

By the time we completed implementing the AI platform earlier this year, we found it would be able to save us as many as 10,000 hours of mundane work annually through manufacturing efficiency and process optimization.

For this company-wide initiative, we brought the development in-house to accumulate know-how. It was also important to stay up-to-date with the latest technologies so we could accelerate development and broaden opportunities to deploy AI. Finally, it was crucial to democratize our AI technology into a truly easy-to-use platform. We knew we needed to be led by those working on the manufacturing floor if we wanted them to use the AI more proactively; while at the same time, we wanted to improve the development experience for our software engineers.

Hybrid Architecture Brings Us Numerous Advantages

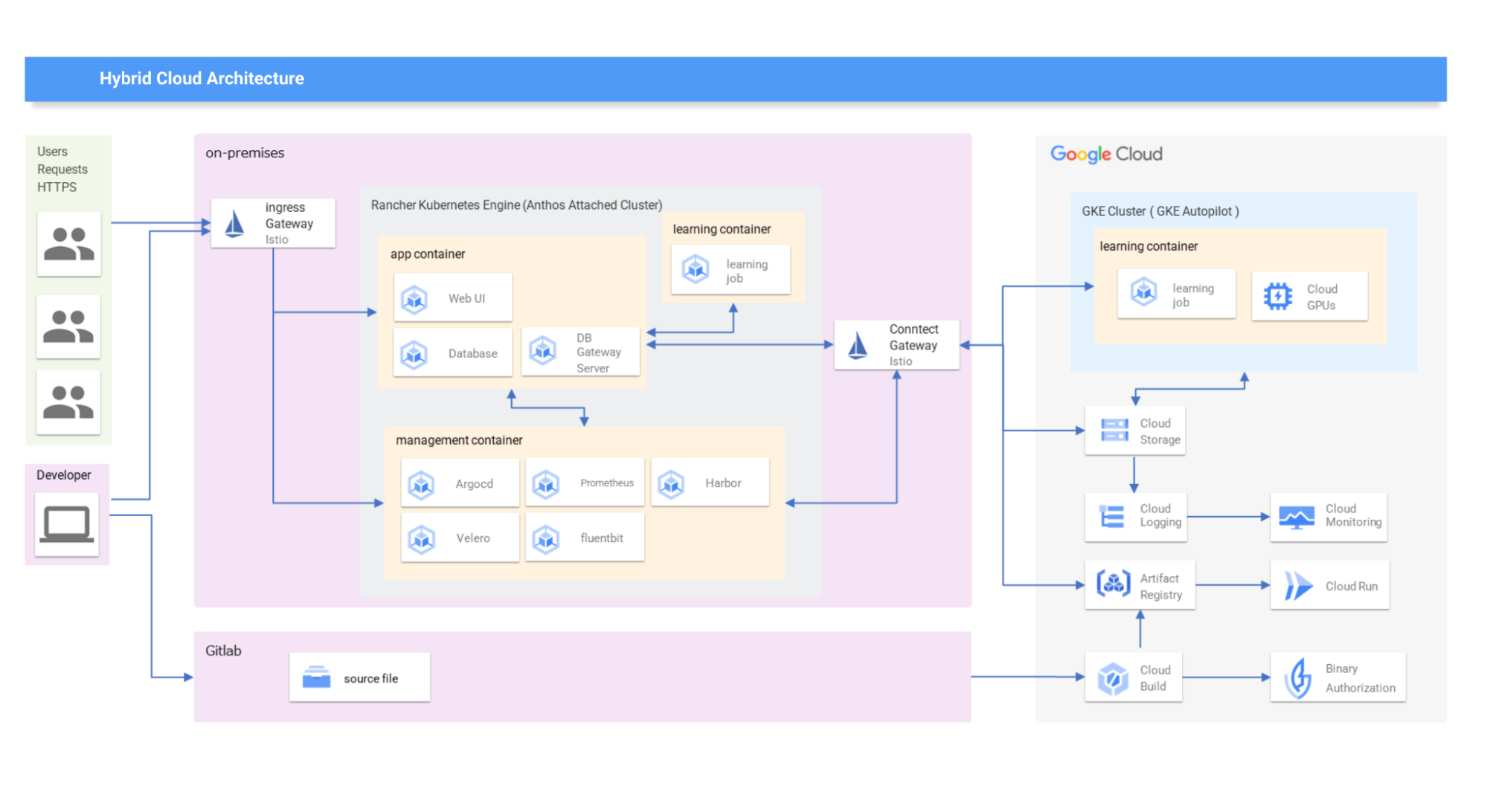

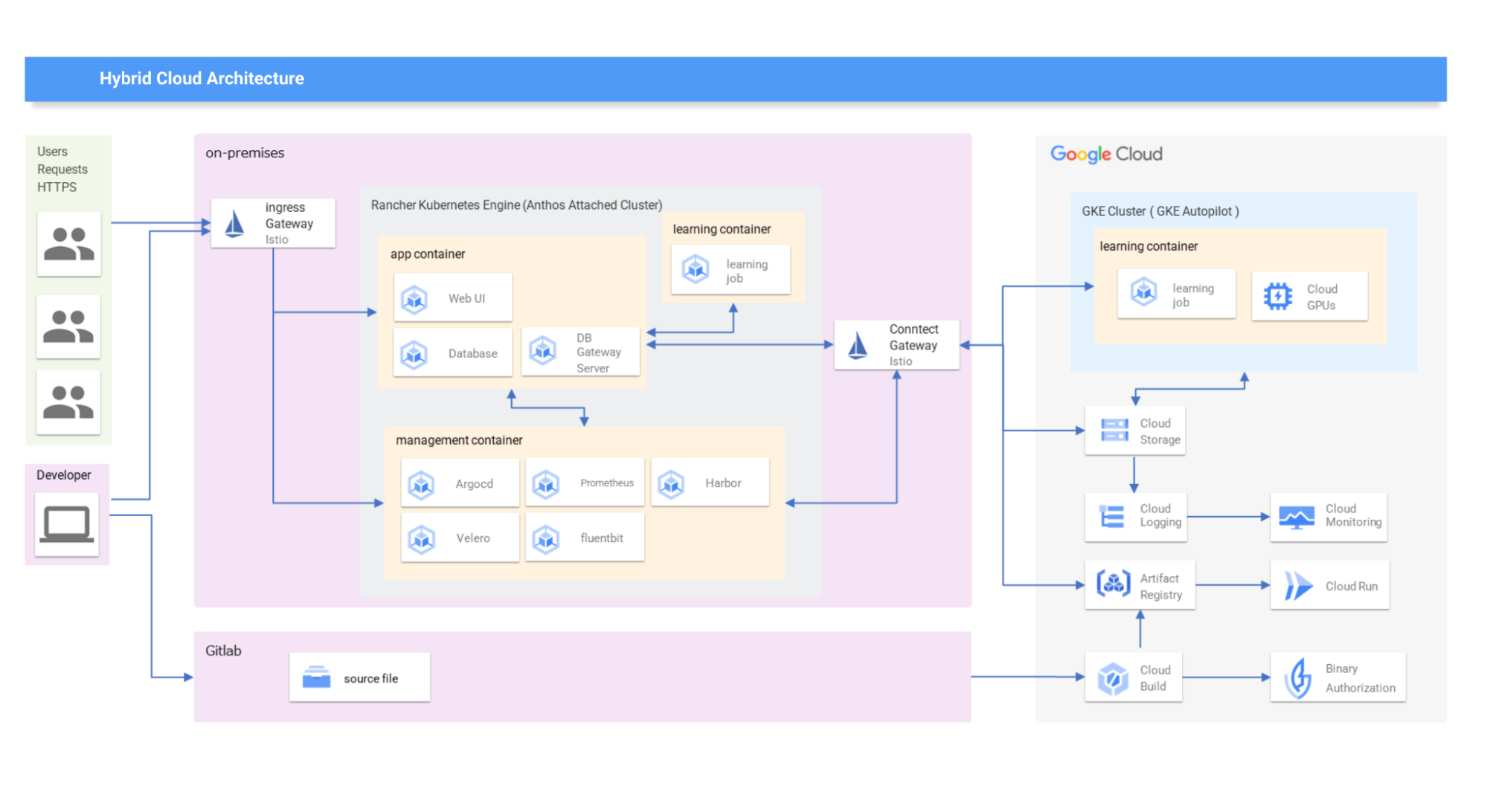

To power our AI Platform, we opted for a hybrid architecture that combines our on-premises infrastructure and cloud computing.

The first objective was to promote agile development. The hybrid cloud environment, coupled with a microservices-based architecture and agile development methodologies, allowed us to rapidly iterate and deploy new features while maintaining robust security. The path for a microservices architecture arose from the need to flexibly respond to changes in services and libraries, and as part of this shift, our team also adopted a development method called "SCRUM" where we release features incrementally in short cycles of a few weeks, ultimately resulting in streamlined workflows.

If we had developed machine learning systems solely on-premises with the aim to ensure security, we would need to perform security checks on a large amount of middleware, including dependencies, whenever we add a new feature or library. On the other hand, with the hybrid cloud, we can quickly build complex, high-volume container images while maintaining a high level of security.

The second objective is to use resources effectively. The manufacturing floor, where AI models are created, is now also facing strict cost efficiency requirements.

With a hybrid cloud approach, we can use on-premises resources during normal operations and scale to the cloud during peak demand, thus reducing GPU usage costs and optimizing performance. This allows us to flexibly adapt to an expected increase in the number of users of AI Platform in the future, as well.

Furthermore, adapting a hybrid cloud helps us to achieve cost savings on facility investments. By leveraging the cloud for scaling capacity, we minimized the need for extensive on-premises hardware investments. In a traditional on-premises environment, we would need to set up high-performance servers with GPUs in every factory. With a hybrid cloud, we can reduce the number of on-premises servers to one and use the cloud to cover the additional processing capacity whenever needed. The hybrid cloud’s concept of "using resources when and only as needed" aligns well with our "Just-in-Time" method.

The Reasons We Chose Google Cloud AI Hypercomputer

Several factors influenced our decision when choosing a cloud partner for the development of the Toyota AI Platform’s hybrid architecture and ultimately, we chose Google Cloud.

The first is the flexibility of using GPUs. In addition to the availability of using high-performance GPUs from one unit, we could use A2 VMs with Google Cloud's unique features like multi-instance GPUs and time-sharing GPUs. This flexibility reduces idle compute resources and optimizes costs, leading to increased business value over a given time by allowing scarce GPUs to perform more machine learning trainings. Plus Dynamic Workload Scheduler helps us efficiently manage and schedule GPU resources to help us optimize running costs.

Next is ease of use. We anticipate that we will be required to secure more GPU resources across multiple regions in the future. With Google Cloud, we can manage GPU resources through a single VPC, avoiding network complexity. When considering the system to deploy, only Google Cloud had this capability.

The speed of build and processing was also a big appeal for us . In particular, Google Kubernetes Engine (GKE), its Autopilot and Image Streaming provide flexibility and speed, thereby allowing us to improve cost-effectiveness in terms of operational burden. We measured the communication speed of containerization during the system evaluation process, and found that Google Cloud was four times faster scaling from zero than other existing services. The speed of communication and processing is extremely important, as we use up to 10,000 images when creating the learning model. When we first started developing AI technology in-house, we struggled with flexible system scaling and operations. In this regard, too, using Google Cloud was the ideal choice.

Completed Large-scale Development in 1.5 Years with 6 Members

With Google Cloud's support, a small team of six developers achieved a remarkable feat by successfully building and deploying the AI Platform in about half the time it would take for a standard system development project at Toyota. This rapid development was facilitated by Google Cloud's user-friendly tools, collaborative approach, and alignment with Toyota's automation-focused culture.

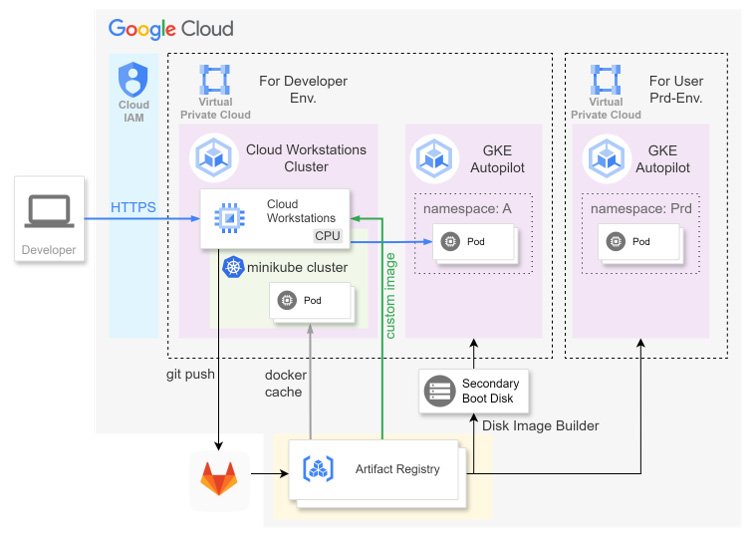

After choosing Google Cloud, we began discussing the architecture with the Google Cloud team. We then worked on modifying the web app architecture for the cloud lift, building the hybrid cloud, developing human resources within the company, while learning skills for the "internalization of technology (acquisition and accumulation of new know-how)".During the implementation process, we divided the workloads into on-premises and cloud architectures, and implemented best practices to monitor communications and resources. This process also involved migrating CI/CD pipelines and image data to the cloud. By performing builds in the cloud and caching images on-premises, we ensured quick start-up and flexible operations.

In addition to the ease of development of Google Cloud products, cultural factors also contributed greatly to the success of this project. Our objective of making the manufacturing process automated as much as possible, is in line with Google's concept of SRE (Site Reliability Engineering). So, we shared the same sense of purpose.

Currently, in the hybrid cloud, we deploy a GKE Enterprise cluster on-premises and link it to the GKE cluster on Google Cloud. When we develop our AI Platform and web apps, we run Cloud Build with Git CI triggers, verify container image vulnerabilities with Artifact Registry and Container Analysis, and ensure a secure environment with Binary Authorization. At the manufacturing floor, structural data such as numerical data and unstructured data such as images are deployed on GKE via a web app, and learning models are created on N1 VMs with NVIDIA T4 GPUs and A2 VMs which include NVIDIA A100 GPUs.

Remarkable Results Achieved Through Operating AI Platform

We have achieved remarkable results with this operational structure.

Enhanced Developer Experience: First, with regard to the development experience, waiting time for tasks have been reduced, and operational and security burdens have been lifted, allowing us to focus even more on development.

Increased User Adoption: Additionally, the use of our AI Platform on the manufacturing floor is also growing. Creating a learning model can typically take 10 to 15 minutes in the shortest, and up to 10 hours in the longest. GKE's Image Streaming streamlined pod initialization and accelerated learning, resulting in a 20% reduction in learning model creation time. This improvement has enhanced the user experience (UX) on the manufacturing floor, leading to a surge in the number of users. Consequently, the number of models created in manufacturing has steadily increased, rising from 8,000 in 2023 to 10,000 in 2024. The widespread adoption of this technology has allowed for a substantial reduction of over 10,000 man-hours per year in the actual manufacturing process, optimizing efficiency, and productivity.

Expanding Impact: AI Platform is already in use at all of our car and unit manufacturing factories (total 10 factories), and its range of applications is expanding. At the Takaoka factory, the platform is used not only to inspect finished parts, but also in the manufacturing process; inspect the application of adhesives used to attach glass to back doors, and to detect abnormalities in injection molding machines used for manufacturing bumpers and other parts. Meanwhile, the number of active users in the company has increased to nearly 1,200, and more than 400 employees participate in in-house training programs each year.

Recently, there have been cases where people who were developing in other departments became interested in Google Cloud and joined our development team. Furthermore, this project has sparked an unprecedented shift within the company: the resistance to the cloud technology itself diminishing and other departments beginning to consider adopting it.

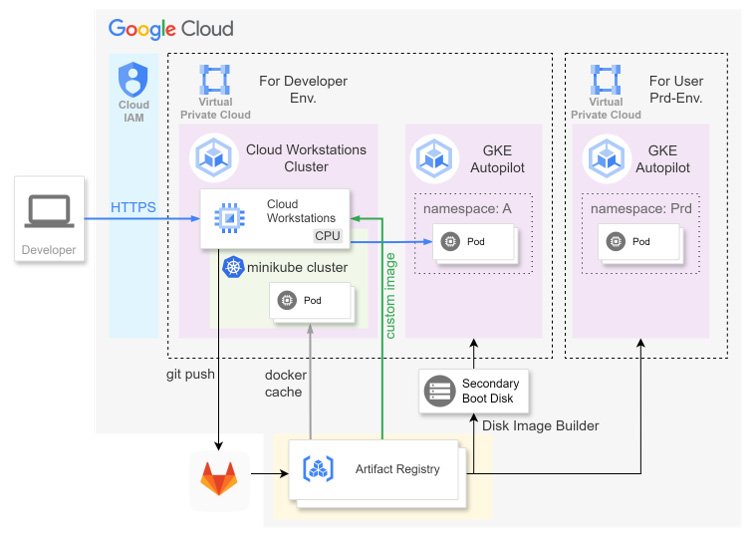

Utilizing Cloud Workstations for Further Productivity With an Eye on Generative AI

For the AI Platform, we plan to develop an AI model that can set more detailed criteria for detection, implement it in an automated picking process, and use it for maintenance and predictive management of the entire production line. We are also developing original infrastructure models based on the big data collected on the platform, and expect to use the AI Platform more proactively in the future.Currently, the development team compiles work logs and feedback from the manufacturing floor, and we believe that the day will soon come when we will start utilizing generative AI. For example, the team is considering using AI to create images for testing machine learning during the production preparation stage, which has been challenging due to a lack of data. In addition, we are considering using Gemini Code Assist to improve the developer experience, or using Gemini to convert past knowledge into RAG and implement a recommendation feature.In March 2024, we joined Google Cloud's Tech Acceleration Program (TAP) and implemented Cloud Workstations. This also aims to achieve the goals we have been pursuing: to improve efficiency, reduce workload, and create a more comfortable work environment by using managed services.

Through this project, led by the manufacturing floor, we have established a "new way of manufacturing" where anyone can easily create and utilize AI learning models, and significantly increase the business impact for our company. This was enabled by the cutting-edge technology and services provided by Google Cloud.

Like "Jidoka (auto’no’mation)" of production lines and "Just-in-Time" method, the AI Platform has now become an indispensable part of our manufacturing operations. Leveraging Google Cloud, we will continue our efforts to make ever-better cars.