New Cloud Functions min instances reduces serverless cold starts

Vinod Ramachandran

Senior Product Manager

Kelsey Hightower

Principal Engineer

Try Google Cloud

Start building on Google Cloud with $300 in free credits and 20+ always free products.

Free trialCloud Functions, Google Cloud’s Function as a Service (FaaS) offering, is a lightweight compute platform for creating single-purpose, standalone functions that respond to events, without needing an administrator to manage a server or runtime environment.

Over the past year we have shipped many new important capabilities on Cloud Functions: new runtimes (Java, .NET, Ruby, PHP), new regions (now up to 22), an enhanced user and developer experience, fine-grained security, and cost and scaling controls. But as we continue to expand the capabilities of Cloud Functions, the number-one friction point of FaaS is the “startup tax,” a.k.a. cold starts: if your function has been scaled down to zero, it can take a few seconds for it to initialize and start serving requests.

Today, we’re excited to announce minimum (“min”) instances for Cloud Functions. By specifying a minimum number of instances of your application to keep online during periods of low demand, this new feature can dramatically improve performance for your serverless applications and workflows, minimizing your cold starts.

Min instances in action

Let’s take a deeper look at min instances with a popular, real-world use case: recording, transforming and serving a podcast. When you record a podcast, you need to get the audio in the right format (mp3, wav), and then make the podcast accessible so that users can easily access, download and listen to it. It’s also important to make your podcast accessible to the widest audience possible including those with trouble hearing and those who would prefer to read the transcript of the podcast.

In this post, we show a demo application that takes a recorded podcast, transcribes the audio, stores the text in Cloud Storage, and then emails an end user with a link to the transcribed file, both with and without min instances.

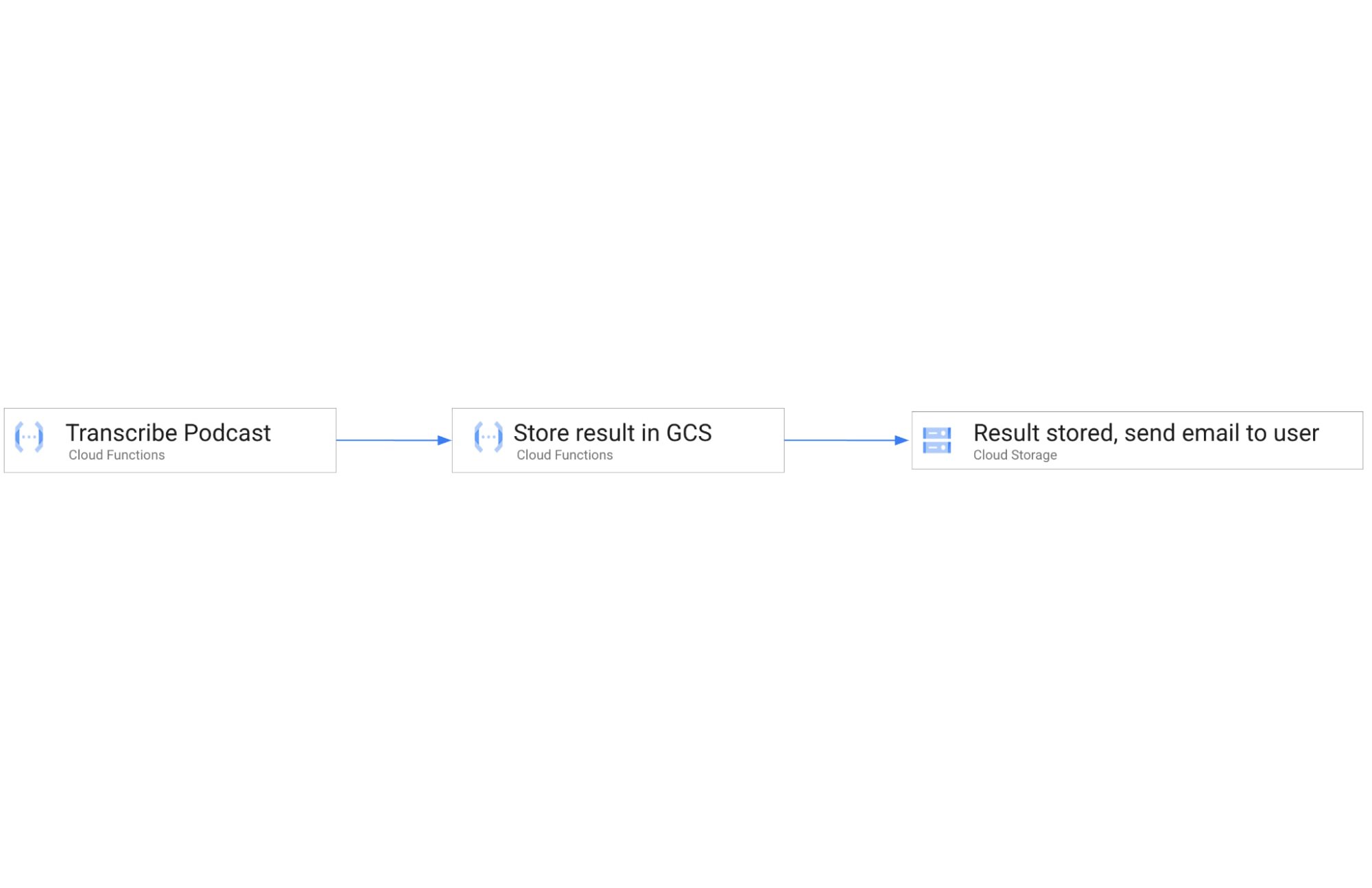

Approach 1: Building the application with Cloud Functions and Cloud Workflows

In this approach, we use Cloud Functions and Google Cloud Workflows to chain together three individual cloud functions. The first function (transcribe) transcribes the podcast, the second function (store-transcription) consumes the result of the first function in the workflow and stores it in Cloud Storage, and the third function (send-email) is triggered by Cloud Storage when the transcribed result is stored and sends an email to the user to inform them that the workflow is complete.

Cloud Workflows executes the functions in the right order and can be extended to add additional steps in the workflow in the future. While the architecture in this approach is simple, extensible and easy to understand, the cold start problem remains, impacting end-to-end latency.

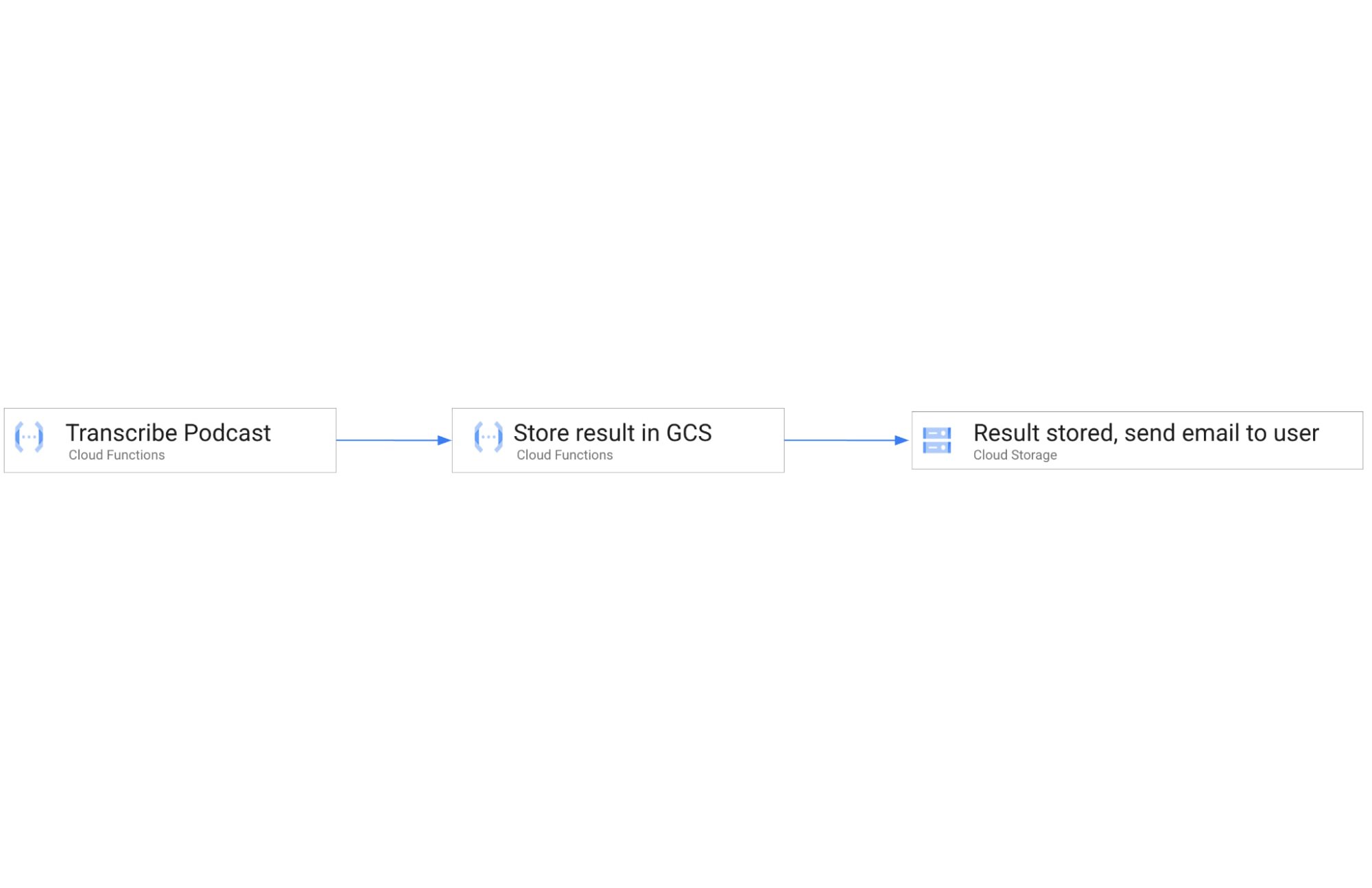

Approach 2: Building the application with Cloud Functions, Cloud Workflows and min instances

In this approach, we follow all the same steps as in Approach 1, with a slightly modified configuration that enables a set of min instances for each of the functions in the given workflow.

This approach presents the best of both worlds. It has the simplicity and elegance of wiring up the application architecture using Cloud Workflows and Cloud Functions. Further, each of the functions in this architecture leverages a set of min instances to mitigate the cold-start problem and time to transcribe the podcast.

Comparison of cold start performance

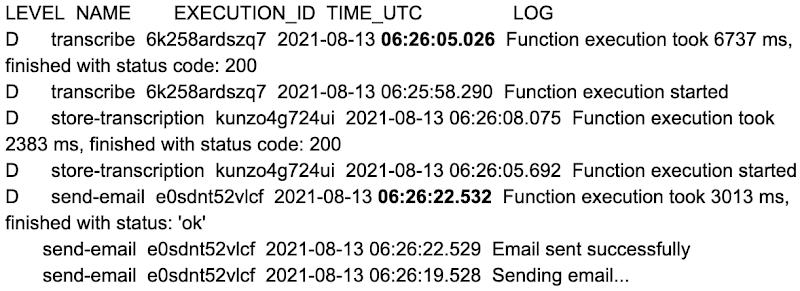

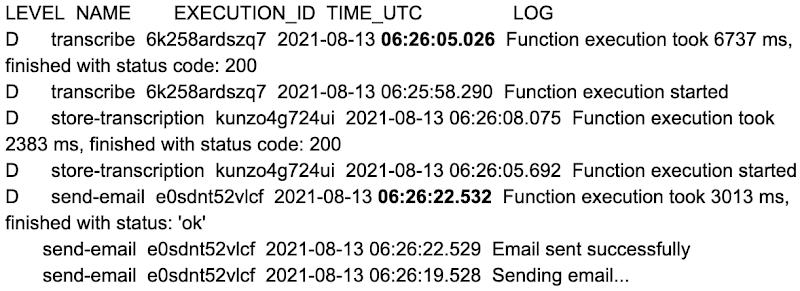

Now consider executing the Podcast transcription workflow using Approach 1, where no min instances are set on the functions that make up the app. Here is an instance of this run with a snapshot of the log entries. The start and end timestamps are highlighted to show the execution of the run. You can see here that the total runtime in Approach 1 took 17 s.

Approach 1: Execution Time (without Min Instances)

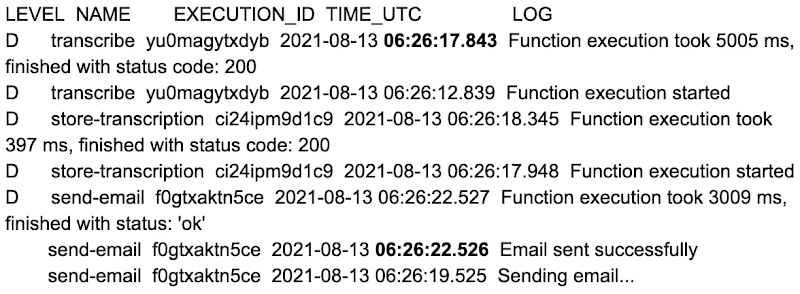

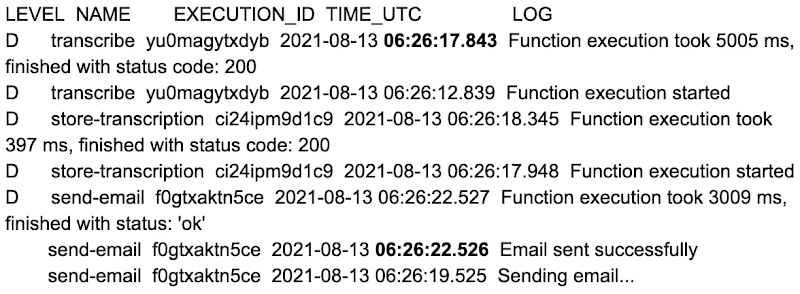

Now consider executing the podcast transformation workflow using Approach 2, where min instances are set on the functions. Here is an instance of this run with a snapshot of the log entries. The start and end timestamps are highlighted to show the execution of the run, for a total of 6 s.

Approach 2: Execution Time (with Min Instances)

That’s an 11 second difference between the two approaches. The example set of functions are hardcoded with a 2 to 3 second sleep during function initialization, and when combined with average platform cold-start times, you can clearly see the cost of not using min instances.

You can reproduce the above experiment in your own environment using the tutorial here.

Check out min instances on Cloud Functions

We are super excited to ship min instances on Cloud Functions, which will allow you to run more latency-sensitive applications such as podcast transcription workflows in the serverless model. You can also learn more about Cloud Functions and Cloud Workflows in the following Quickstarts: Cloud Functions, Cloud Workflows.