How Deutsche Bank built a new retail data platform on Google Cloud

Vladimir Elvov

Lead Customer Engineer, Data & Analytics

Lars Fockele

Lead Data Engineer, Deutsche Bank AG

Getting insights into customer’s preferences and needs is crucial for any modern business — and that’s especially true for a retail bank. Insights from customer data help deliver improved customer experiences through custom tailored products, better services, and higher levels of automation. But to gain these customer insights, you need your input data to be in a common data platform. At the same time, data volumes continue to grow and there are always new real-time input sources cropping up. To address these needs, whatever solution you pick has to be scalable.

With the data of over 20 million private, commercial and corporate partners and customers Deutsche Bank decided to build such a data platform on Google Cloud that it calls the Private Bank data platform (PBDP). It's a transformative addition to Deutsche Bank’s IT capabilities:

“In collaboration with Google we established one central and holistic data platform for Deutsche Bank with flexible data modeling options, an analytical workplace for exploration, prototyping and analysis and easy data ingestion into Google Cloud using real-time events and daily batch processes. Our new Data Platform serves as a cornerstone of our Bank’s strategic approach to consolidate data using a modern, cloud-based tech stack and is a fundamental enabler of multiple initiatives in the Bank. Having one place where you can find all your relevant data for your data consumer needs will enable multiple different future Data Driven operational and analytical use cases.” - Jan Struewing, Director, Domain Lead Private Bank Data Platform, Deutsche Bank

To inspire organizations that are also building a data platform on Google Cloud, in this blog, we take a deeper look at Deutsche Bank’s PBDP – its requirements, its architecture, the services that it runs, and Deutsche Bank’s approach to management and operations.

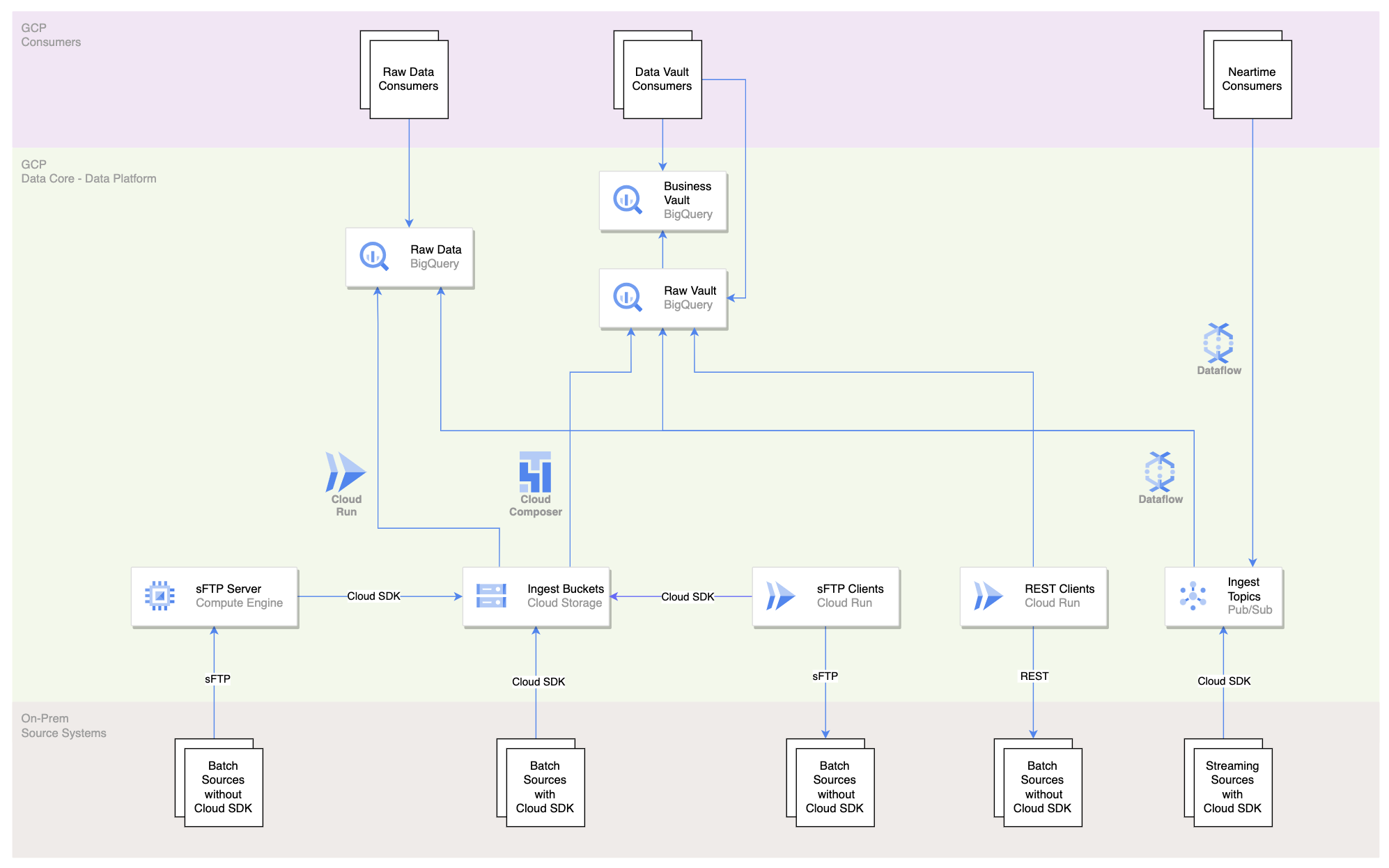

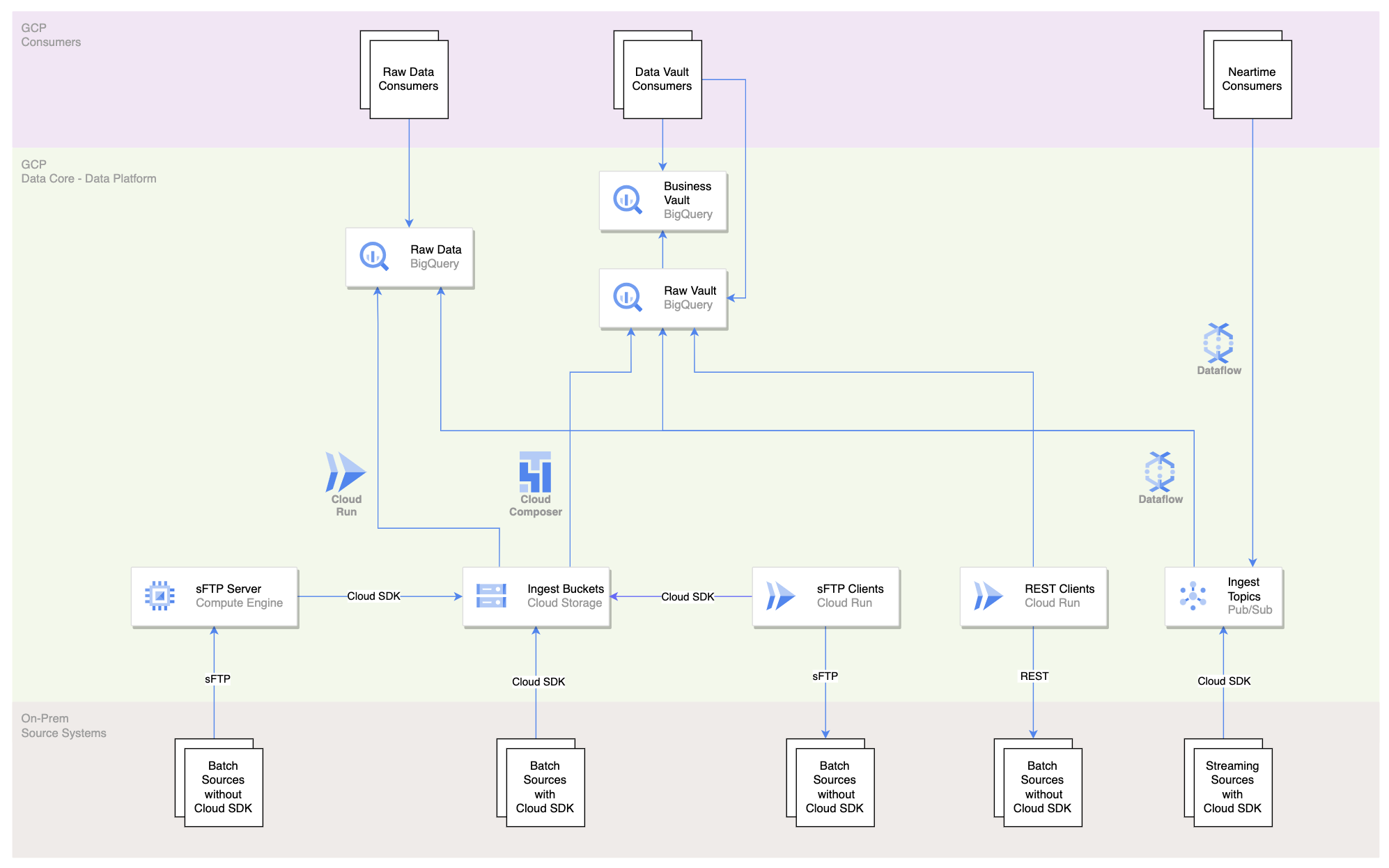

The capabilities of a modern data platform

The foundation of the Deutsche Bank’s PBDP is a layer that provisions data centrally and continuously in a well-governed and easy to consume way. This part of the PBDP is also referred to as the Data Core. Data arrives from various systems at different speeds, which means that the PBDP needs to be able to pick up both files and events from the data producers. It does this through batch and real-time ingestion mechanisms that eventually land the data in BigQuery — or in the case of event data, in Pub/Sub for real-time consumption. Data access is governed based on the rights and permissions of consumers and the review of the CDO Residency and Access team to ensure that data access is compliant to the respective regulations and policies. Above all, the PBDP also provides metadata on top of the input data to make data discovery and access simple for consumers.

The Data Core is the cornerstone of the PBDP because it allows for simple, central data retrieval. This means that data consumers don’t have to source the same data multiple times through parallel pipelines, but can instead leverage a central data store. This improves data quality and reliability, and makes data sharing much more cost efficient than a point-to-point solution between producers and consumers. It also makes getting access to data a much faster process from an organizational point of view due to centralized and standardized processes for onboarding consumers rather than custom processes and point-to-point integrations.

PBDP data can be consumed in two ways from BigQuery: Either in the “raw” format as it comes directly from the source systems, or through a data model. This data model is based on the Data Vault 2.0 paradigm, where the data in the business vault layer follows the same data model, which is agnostic to the source systems' data models.

There are two types of consumers — data-driven products or an analytical workplace on top of the PBDP:

-

A data-driven product is basically an application that contains business logic specific to the insights it is supposed to offer to its users based on the data it is processing. It is owned by a team in the bank and sources its input data from the PBDP’s Data Core, while taking care of its specific data transformations on its own. Input data can be retrieved either from BigQuery, or from Pub/Sub directly for real-time data processing. To implement these transformations, data-driven products can utilize PBDP out-of-the-box tooling and templates.

-

The analytical workplace allows exploration and analysis of the data on the PBDP Data Core in an interactive manner so that new insights can be derived and new algorithms can be developed. It is commonly used by data scientists and analysts who leverage standardized solutions from the PBDP, for example Vertex AI notebooks. The artifacts that are the results of the work on the analytical workplace can eventually be productionized in the form of a new data driven product.

PBDP architectural overview

The following diagram shows the high-level architecture of Deutsche Bank’s PBDP. It focuses on the interfaces between the on-premises estate, located inside the Deutsche Bank network, and the PBDP running inside Google Cloud. With this architecture, Deutsche Bank was able to initial-load billions of records during the Postbank-Deutsche Bank migration and process millions of records per day for its consumers.

A central data hub for a private bank

PBDP’s input data sources are pretty broad, and include the main information needed for data-driven products like the new online banking platform, which was built using cloud-native services on Google Cloud. The data for the online banking platform covers core customer information, current accounts data (a.k.a. checking accounts), credit card data, savings accounts data, partner data, loans data and data about securities/brokerage accounts. It is sourced from SAP, mainframes, relational databases and other systems of record.

In addition to serving as a central data platform for the private bank, PBDP functions as a key integration layer between the bank’s on-prem systems for core banking and its new online banking platform. That’s important because when migrating from an on-prem estate to the cloud, not all applications can move at once. Having an integration layer or middleware capability between the on-prem and cloud-based systems is tremendously important. It “de-risks” the migration by enabling a phased approach and integrates the on-prem and cloud-based systems in a scalable way.

In addition to the online banking platform, other PBDP consumers include accounts reporting, the client sales warehouse, financial reporting, analytical tooling for commercial clients in Germany and others, with new consumers being constantly onboarded.

Key roadmap activities

There are some areas of improvement for the PB Data Platform, like for example the introduction of a staging area/level which will give the possibility to add further housekeeping or data management tasks. Another example is adding row-level security tags to the data rows, before the data will be written into the final tables and provided to consumers. In addition, the team is working on detailed implementation patterns linked to the Enterprise Architecture Data Principles and Data Standards, as well as extending the use of Enterprise Data Capabilities and being aligned to DB’s group-wide data strategy. This includes further alignment on working packages like:

-

Usage of a single group-wide data registry

-

Aligned data standards for data interfaces (pathways), common data modeling techniques, and data harmonization

-

Enhancing the alignment to the concepts of Data Products and Data Mesh to further streamline data consumption

Embracing a DevOps way of working

A modern data platform requires a modern approach to development and operations. In addition to adopting DevOps principles for their data platform, the development team has also embraced automation to streamline infrastructure provisioning and CI/CD processes.

The team manages their Google Cloud infrastructure through all its different environments (from development, to testing, to production) with Terraform, which allows them to define their infrastructure in code, ensuring consistency and reducing the risk of manual errors. Developers, meanwhile, have automated the provisioning and management of data platform infrastructure including virtual machines, network configurations, storage resources, security configurations and many more.

The team stores its Terraform scripts in GitHub so they can version control their infrastructure and easily track changes. This enables them to roll back changes if necessary and maintain a consistent state across different environments. At the same time, a series of GitHub Actions workflows perform the typical CI/CD steps. Automating infrastructure provisioning and CI/CD processes brings numerous benefits to the team, including reduced manual effort, improved consistency, faster delivery and enhanced reliability.

Finally, Deutsche Bank monitors all important operations on the data platform with native Google Cloud monitoring services. Every malfunction of the system triggers alerts that are automatically sent to a support mailbox and a dedicated team chat. With all of this, developers are able to build, deploy and operate the complete PBDP as a single DevOps team.

These capabilities and architectural patterns allow Deutsche Bank’s PBDP to scale further and onboard more analytical use cases seamlessly - something many organizations could benefit from. If you want to learn more about how to get started, reach out to the Google Cloud team.