Dive deeper into Gemini with BigQuery and Vertex AI

Xi Cheng

Engineering Manager

Firat Tekiner

Product Management, Google

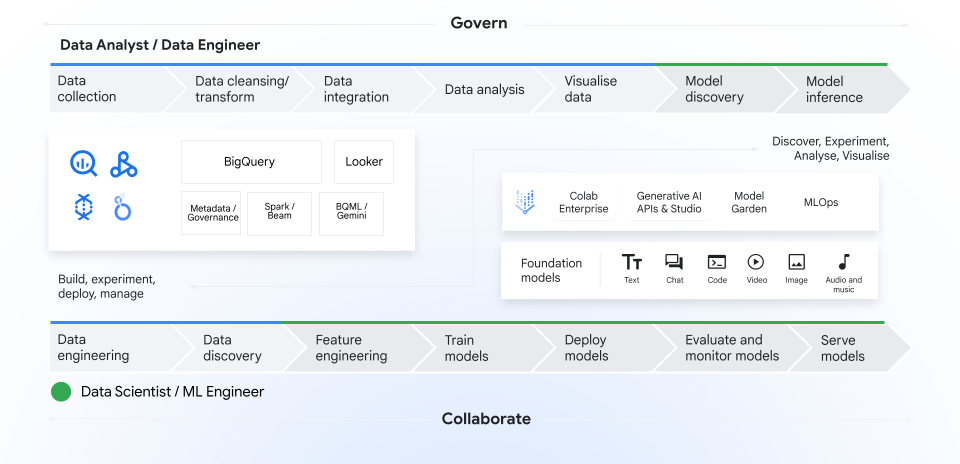

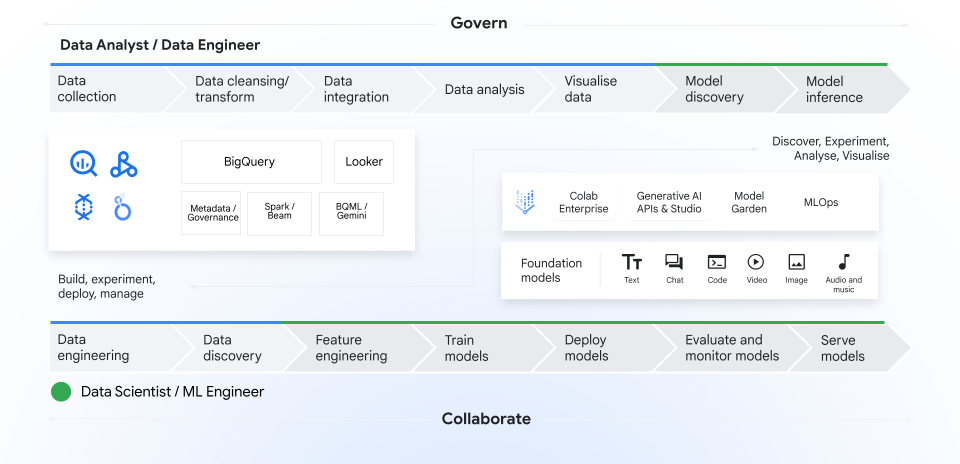

Traditional barriers between data and AI teams can hinder innovation. Often, these disciplines operate separately and use disparate tools, leading to data silos, redundant data copies, data governance overhead and cost challenges. From an AI implementation perspective, this increases security risks and leads to failed ML deployments and a lower rate of ML models reaching production.

To derive the maximum value from data and AI investments, especially around generative AI, it can be good to have a single platform that breaks down these barriers, helping to accelerate data to AI workflows from data ingestion and preparation to analysis, exploration and visualization — all the way to ML training and inference.

To help you accomplish this, we recently announced innovations that further connect data and AI using BigQuery and Vertex AI. In this blog we will dive deeper into some of these innovations and show you how to use Gemini 1.0 Pro in BigQuery.

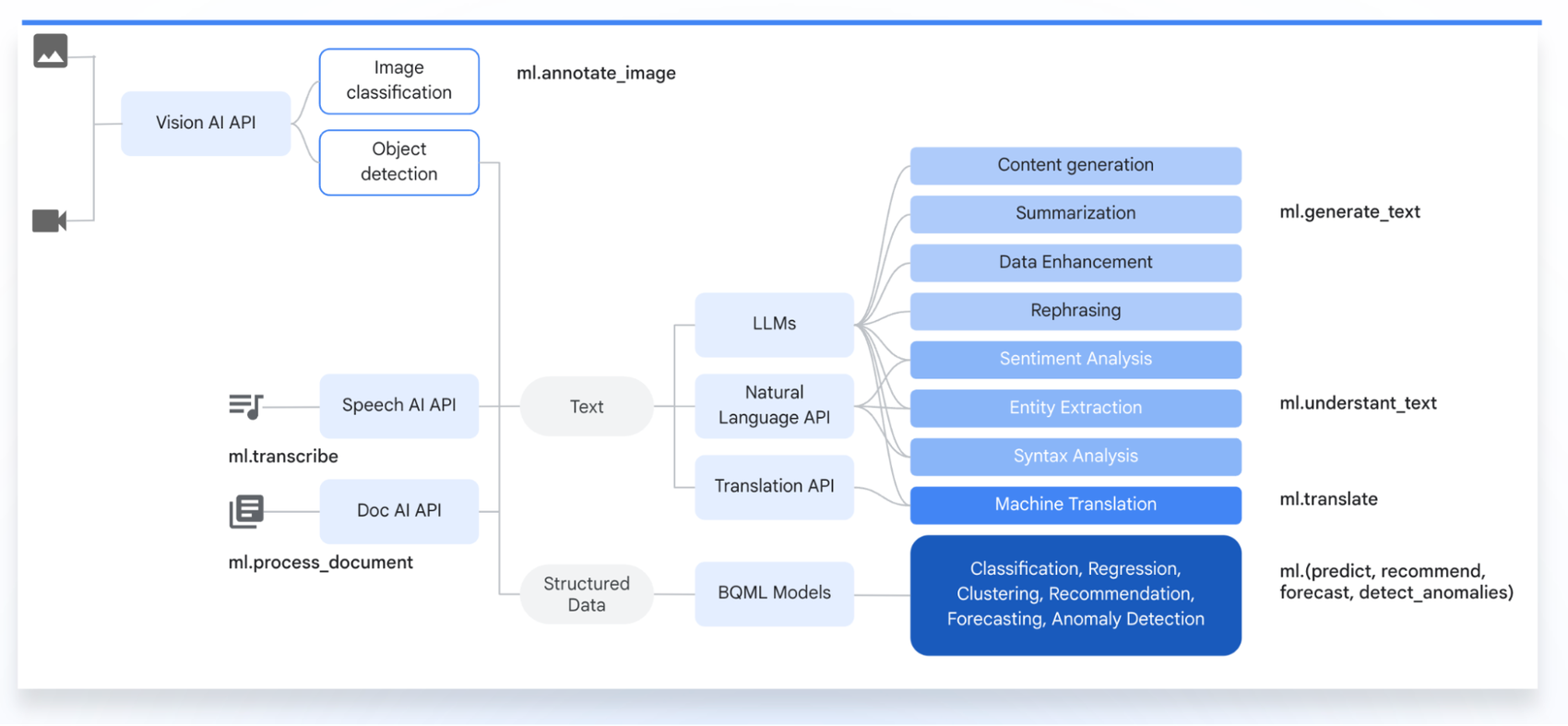

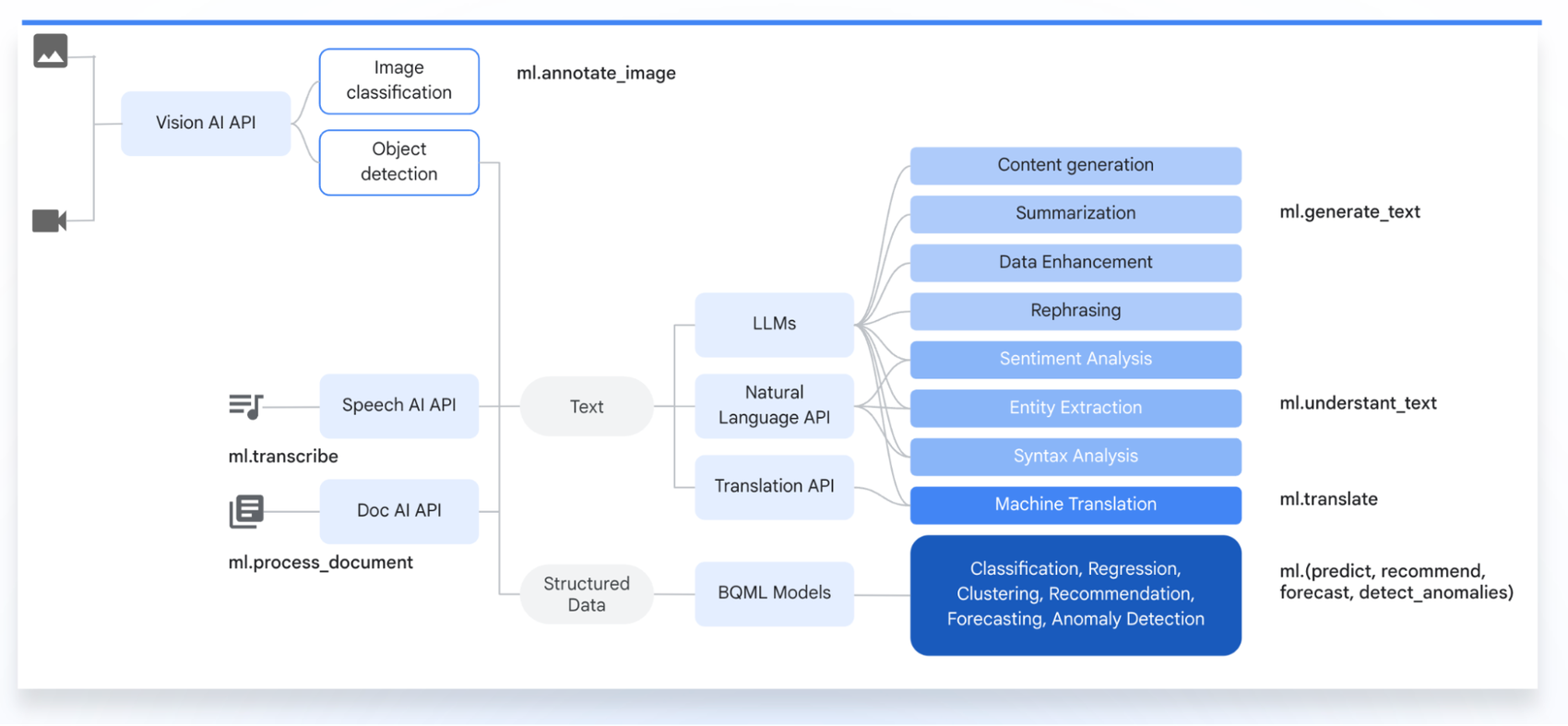

Bring AI to your data using BigQuery ML

BigQuery ML lets data analysts and engineers create, train and execute machine learning models directly in BigQuery using familiar SQL, helping them transcend traditional roles and leverage advanced ML models directly in BigQuery, with built-in support for linear regression, logistic regression and deep neural networks, Vertex AI-trained models such as PaLM 2 or Gemini Pro 1.0, or imported custom models based on TensorFlow, TensorFlow Lite and XGBoost. Additionally, ML engineers and data scientists can share their trained models through BigQuery, ensuring that data is used in a governed manner, and that datasets are easily discoverable.

Each component in the data pipeline might use different tools and technologies. This complexity slows down development, experimentation, and puts a greater burden on specialized teams. BigQuery ML lets users build and deploy machine learning models directly within BigQuery using familiar SQL syntax. To simplify generative AI even more, we went one step further and integrated Gemini 1.0 Pro into BigQuery via Vertex AI. The Gemini 1.0 Pro model is designed for higher input/output scale and better result quality across a wide range of tasks like text summarization and sentiment analysis.

BigQuery ML enables you to scale and streamline generative models by directly embedding them in your data workflow. This eliminates data movement bottlenecks, fostering seamless collaboration between teams while enhancing security and governance. You'll benefit from BigQuery's proven infrastructure for greater scale and efficiency.

Bringing generative AI directly to your data has numerous benefits:

-

Helps eliminate the need to build and manage data pipelines between BigQuery and generative AI model APIs

-

Streamlines governance and helps reduce the risk of data loss by avoiding data movement

-

Helps reduce the need to write and manage custom Python code to call AI models

-

Enables you to analyze data at petabyte-scale without compromising on performance

-

Can lower your total cost of ownership with a simplified architecture

Faraday, a leading customer prediction platform, previously had to build data pipelines and join multiple datasets to perform sentiment analysis on their data. By bringing LLMs directly to their data, they simplified the process, joining the data with additional customer first-party data, and feeding it back into the model to generate hyper-personalized content — all within BigQuery. Watch this demo video to learn more.

BigQuery ML and Gemini 1.0 Pro

To use Gemini 1.0 Pro in BigQuery, first create the remote model that represents a hosted Vertex AI large language model. This step usually takes just a few seconds. Once the model is created, use the model to generate text, combining data directly with your BigQuery tables.

Then, use the ML.GENERATE_TEXT construct to access the Gemini 1.0 Pro via Vertex AI to perform text-generation tasks. CONCAT appends your PROMPT statement and the database record. Temperature is the prompt parameter to control the randomness of the response (the lesser the better in terms of relevance). Flatten_json_output represents the boolean, which if set true returns a flat understandable text extracted from the JSON response.

What generative AI can do for your data

We believe that the world is just beginning to understand what AI technology can do for your business data. With generative AI, the role of data analysts is expanding beyond merely collecting, processing, and performing analysis of large datasets to proactively driving data-driven business impact.

For example, data analysts can use generative models to summarize historical email marketing data (open rates, click-through rates, conversion rates, etc.) to understand which types of subject lines consistently lead to higher open rates, and whether personalized offers perform better than general promotions. Using these insights, analysts can prompt the model to create a list of compelling subject line options tailored to the identified preferences. They can further utilize the generative AI model to draft engaging email content, all within one platform.

Early users have expressed tremendous interest in solving various use cases across industries. For instance, using ML.GENERATE_TEXT can simplify advanced data processing tasks including:

-

Content generation: Analyze customer feedback and generate personalized email content right inside BigQuery without the need for complex tools. Prompt example: “Create a customized marketing email based on customer sentiment stored in [table name]”

-

Summarization: Summarize text stored in BigQuery columns such as online reviews or chat transcripts. Prompt example: “Summarize customer reviews in [table name]”

-

Data enhancement: Obtain a country name for a given city name. Prompt example: “For every zip code in column X, give me city name in column Y”

-

Rephrasing: Correct spelling and grammar in textual content such as voice-to-text transcriptions. Prompt example: “Rephrase column X and add results to column Y”

-

Feature extraction: Extract key information or words from the large text files such as in online reviews and call transcripts. Prompt example: “Extract city names from column X”

-

Sentiment analysis: Understand human sentiment about specific subjects in a text. Prompt example: “Extract sentiment from column X and add results to column Y”

-

Retrieval-augmented generation (RAG): Retrieve data relevant to a question or task using BigQuery vector search and provide it as context to a model. For example, use a support ticket to find 10 closely-related previous cases, and pass them to a model as context to summarize and suggest a resolution.

By expanding the support for state-of-the-art foundation models such as Gemini 1.0 Pro in Vertex AI, BigQuery helps make it simple, easy, and cost-effective to integrate unstructured data within your Data Cloud.

Join us for the future of data and generative AI

To learn more about these new features, check out the documentation. Use this tutorial to apply Google's best-in-class AI models to your data, deploy models and operationalize ML workflows without moving data from BigQuery. You can also watch a demonstration on how to build an end-to-end data analytics and AI application directly from BigQuery while harnessing the potential of advanced models like Gemini together with behind the scenes on how it’s made. Watch our recent product innovation webcast to learn more about the latest innovations and how you can use BigQuery ML a to create and use models using simple SQL.

Googlers Mike Henderson, Tianxiang Gao and Manoj Gunti contributed to this blog post. Many Googlers contributed to make these features a reality.