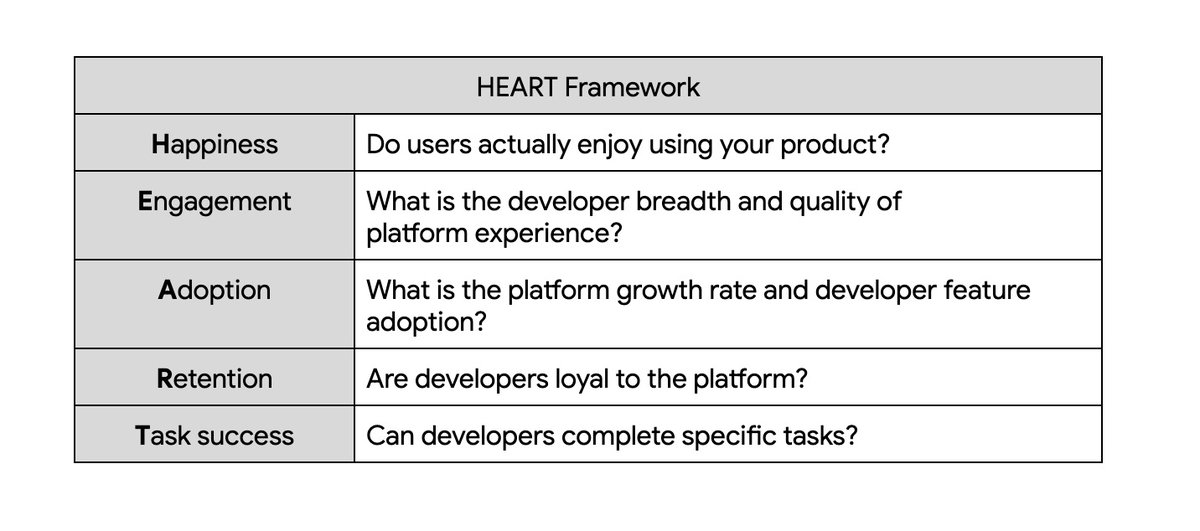

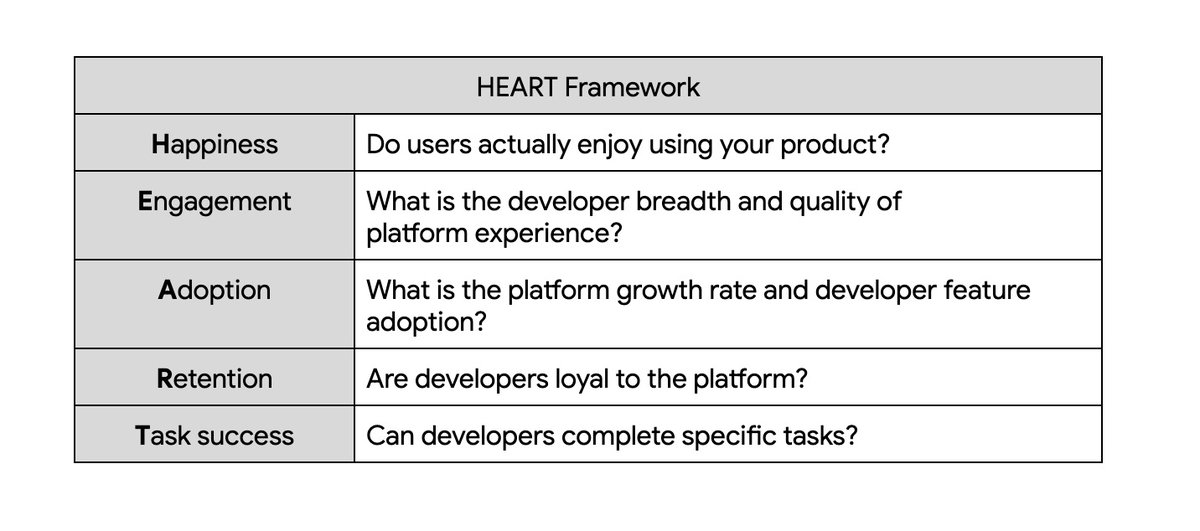

Measuring developer experience with the HEART Framework: A guide for platform engineers

Darren Evans

EMEA Practice Solutions Lead, Application Platform

At the end of the day, developers build, test, deploy and maintain software. But like with lots of things, it’s about the journey, not the destination.

Among platform engineers, we sometimes refer to that journey as the developer experience (DX), which encompasses how developers feel and interact with the tools and services they use throughout the software build, test, deployment and maintenance process.

Prioritizing DX is essential: Frustrated developers lead to inefficiency and talent loss as well as to shadow IT. Conversely, a positive DX drives innovation, community, and productivity. And if you want to provide a positive DX, you need to start measuring how you’re doing.

At PlatformCon 2024, I gave a talk entitled "Improving your developers' platform experience by applying Google frameworks and methods” where I spoke about Google’s HEART Framework, which provides a holistic view of your organization's developers’ experience through actionable data.

In this article, I will share ideas on how you can apply the HEART framework to your Platform Engineering practice, to gain a more comprehensive view of your organization’s developer experience. But before I do that, let me explain what the HEART Framework is.

The HEART Framework: an introduction

In a nutshell, HEART measures developer behaviors and attitudes from their experience of your platform and provides you with insights into what’s going on behind the numbers, by defining specific metrics to track progress towards goals. This is beneficial because continuous improvements through feedback are vital components of a platform engineering journey, helping both platform and application product teams make decisions that are data-driven and user-centered.

However, HEART is not a data collection tool in and of itself; rather, it’s a user-sentiment framework for selecting the right metrics to focus on based on product or platform objectives. It balances quantitative or empirical data, e.g., number of active portal users, with qualitative or subjective insights such as "My users feel the portal navigation is confusing." In other words, consider HEART as a framework or methodology for assessing user experience, rather than a specific tool or assessment. It helps you decide what to measure, not how to measure it.

Let’s take a look at each of these in more detail.

Happiness: Do users actually enjoy using your product?

Highlight: Gathering and analyzing developer feedback

Subjective metrics:

-

Surveys: Conduct regular surveys to gather feedback about overall satisfaction, ease of use, and pain points. Toil negatively affects developer satisfaction and morale. Repetitive, manual work can lead to frustration burnout and decreased happiness with the platform.

-

Feedback mechanisms: Establish easy ways for developers to provide direct feedback on specific features or areas of the platform like Net Promoter Score (NPS) or Customer Satisfaction surveys (CSAT).

-

Collect open-ended feedback from developers through interviews and user groups.

-

Sentiment analysis: Analyze developer sentiment expressed in feedback channels, support tickets and online communities.

System metrics:

-

Feature requests: Track the number and types of feature requests submitted by developers. This provides insights into their needs and desires and can help you prioritize improvements that will enhance happiness.

Watch out for: While platforms can boost developer productivity, they might not necessarily contribute to developer job satisfaction. This warrants further investigation, especially if your research suggests that your developers are unhappy.

Engagement: What is the developer breadth and quality of platform experience?

Highlight: Frequency of interaction between platform engineers with developers and quality of interaction — intensity and quality of interaction with the platform, participation on chat channels, training, dual ownership of golden paths, joint troubleshooting, engaging in architectural design discussions, and the breadth of interaction by everyone from new hires through to senior developers.

Subjective metrics:

-

Survey for quality of interaction — focus on depth and type of interaction whether through chat channel, trainings, dual ownership of golden paths, joint troubleshooting, or architectural design discussions

-

High toil can reduce developer engagement with the platform. When developers spend excessive amounts of time on tedious tasks, they are less likely to explore new features, experiment, and contribute to the platform's evolution.

System metrics:

-

Active users: Track daily, weekly, and monthly active developers and how long they spend on tasks.

-

Usage patterns: Analyze the most used platform features, tools, and portal resources.

-

Frequency of interaction between platform engineers with developers.

-

Breadth of user engagement: Track onboarding time for new hires to reach proficiency, measure the percentage of senior developers actively contributing to golden paths or portal functionality.

Watch out for: Don’t confuse engagement with satisfaction. Developers may rate the platform highly in surveys, but usage data might reveal low frequency of interaction with core features or a limited subset of teams actively using the platform. Ask them “How has the platform changed your daily workflow?” rather than "Are you satisfied with the platform?”

Adoption: What is the platform growth rate and developer feature adoption?

Highlight: Overall acceptance and integration of the platform into the development workflow.

System metrics:

-

New user registrations: Monitor the growth rate of new developers using the platform.

-

Track time between registration and time to use the platform i.e., executing golden paths, tooling and portal functionality.

-

Number of active users per week / month / quarter / half-year / year who authenticate via the portal and/or use golden paths, tooling and portal functionality

-

Feature adoption: Track how quickly and widely new features or updates are used.

-

Percentage of developers using CI/CD through the platform

-

Number of deployments per user / team / day / week / month — basically of your choosing

-

Training: Evaluate changes in adoption, after delivering training.

Watch out for: Overlooking the "long tail" of adoption. A platform might see a burst of early adoption, but then plateau or even decline if it fails to continuously evolve and meet changing developer needs. Don't just measure initial adoption, monitor how usage evolves over weeks, months, and years.

Retention: Are developers loyal to the platform?

Highlight: Long-term engagement and reducing churn.

Subjective metrics:

- Use an exit survey if a user is dormant for 12 or more months.

System metrics:

-

Churn rate: Track the percentage of developers who stop logging into the portal and are not using it.

-

Dormant users: Identify developers who become inactive after 6 months and investigate why.

-

Track services that are less frequently used.

Watch out for: Misinterpreting the reasons for churn. When developers stop using your platform (churn), it's crucial to understand why. Incorrectly identifying the cause can lead to wasted effort and missed opportunities for improvement. Consider factors outside the platform — churn could be caused by changes in project requirements, team structures or industry trends.

Task success: Can developers complete specific tasks?

Highlight: Efficiency and effectiveness of the platform in supporting specific developer activities.

Subjective metrics:

-

Survey to assess the ongoing presence of toil and its inimical influence on developer productivity, ultimately hindering efficiency and leading to increased task completion times.

System metrics:

-

Completion rates: Measure the percentage of golden paths and tools successfully run on the platform without errors.

-

Time to complete tasks using golden paths, portal, or tooling.

-

Error rates: Track common errors and failures developers encounter from log files or monitoring dashboards from golden paths, portal or tooling.

-

Mean Time to Resolution (MTTR): When errors do occur, how long does it take to resolve them? A lower MTTR indicates a more resilient platform and faster recovery from failures.

-

Developer platform and portal uptime: Measure the percentage of time that the developer platform and portal is available and operational. Higher uptime ensures developers can consistently access the platform and complete their tasks.

Watch out for: Don't confuse task success with task completion. Simply measuring whether developers can complete tasks on the platform doesn't necessarily indicate true success. Developers might find workarounds or complete tasks inefficiently, even if they technically achieve the end goal. It may be worth manually observing developer workflows in their natural environment to identify pain points and areas of friction in their workflows.

Also, be careful with misaligning task success with business goals. Task completion might overlook the broader impact on business objectives. A platform might enable developers to complete tasks efficiently, but if those tasks don't contribute to overall business goals, the platform's true value is questionable.

Applying the HEART framework to platform engineering

It’s not necessary to use all of the categories each time. The number of categories to consider really depends on the specific goals and context of the assessment; you can include everything or trim it down to better match your objective. Here are some examples:

-

Improving onboarding for new developers: Focus on adoption, task success and happiness.

-

Launching a new feature: Concentrate on adoption and happiness.

-

Increasing platform usage: Track engagement, retention and task success.

Keep in mind that relying on just one category will likely provide an incomplete picture.

When should you use the framework?

In a perfect world, you would use the HEART framework to establish a baseline assessment a few months after launching your platform, which will provide you with a valuable insight into early developer experience. As your platform evolves, this initial data becomes a benchmark for measuring progress and identifying trends. Early measurement allows you to proactively address UX issues, guide design decisions with data, and iterate quickly for optimal functionality and developer satisfaction. If you're starting with an MVP, conduct the baseline assessment once the core functionality is in place and you have a small group of early users to provide feedback.

After 12 or more months of usage, you can also add metrics to embody a new or more mature platform. This can help you gather deeper insights into your developers’ experience by understanding how they are using the platform, measure the impact of changes you’ve made to the platform, or identify areas for improvement and prioritize future development efforts. If you've added new golden paths, tooling, or enhanced functionality, then you'll need to track metrics that measure their success and impact on developer behavior.

The frequency with which you assess HEART metrics depends on several factors, including:

-

The maturity of your platform: Newer platforms benefit from more frequent reviews (e.g. monthly or quarterly) to track progress and address early issues. As the platform matures, you can reduce the frequency of your HEART assessments (e.g., bi-annually or annually).

-

The rate of change: To ensure updates and changes have a positive impact, apply the HEART framework more frequently when your platform is undergoing a period of rapid evolution such as major platform updates, new portal features or new golden paths, or some change in user behavior. This allows you to closely monitor the effects of each change on key metrics.

-

The size and complexity of your platform: Larger and more complex platforms may require more frequent assessments to capture nuances and potential issues.

-

Your team's capacity: Running HEART assessments requires time and resources. Consider your team's bandwidth and adjust the frequency accordingly.

Schedule periodic deep dives (e.g. quarterly or bi-annually) using the HEART framework to gain a more in-depth understanding of your platform's performance and identify areas for improvement.

Taking more steps towards platform engineering

In this blog post, we’ve shown how the HEART framework can be applied to platform engineering to measure and improve the developer experience. We’ve explored the five key aspects of the framework — happiness, engagement, adoption, retention, and task success — and provided specific metrics for each and guidance on when to apply them.By applying these insights, platform engineering teams can create a more positive and productive environment for their developers, leading to greater success in their software development efforts.To learn more about platform engineering, check out some of our other articles: 5 myths about platform engineering: what it is and what it isn’t, Another five myths about platform engineering, and Laying the foundation for a career in platform engineering.

And finally, check out the DORA Report 2024, which now has a section on Platform Engineering.