USAA and Google Cloud: modernizing insurance operations with machine learning

Raiyaan Serang

Product Manager, Vertex AI

Mike Bernico

AI Engineer

Delivering an automated solution capable of going from images of vehicle damage to actual car part repair/replace predictions has been an elusive dream for many auto insurance carriers. Such a solution could not only streamline the repair process, but also increase auditability of repairs and improve overall cost efficiency. In keeping with its reputation for being an innovative leader in the insurance industry, USAA decided it was time for things to change and teamed up with Google to make the dream of touchless claims a reality.

Google and USAA previously worked together on an effort to identify damaged regions/parts of a vehicle from a picture. USAA’s vision went far beyond simple identification, however. USAA realized that by combining their data and superb customer service expertise with Google’s AI technology and industry expertise, they could create a service that could map a photo of a damaged vehicle to a list of parts, and in turn identify if those parts should be repaired or replaced. If a repair was needed, the service could also predict how long it would take to do so, taking into account local labor rates.

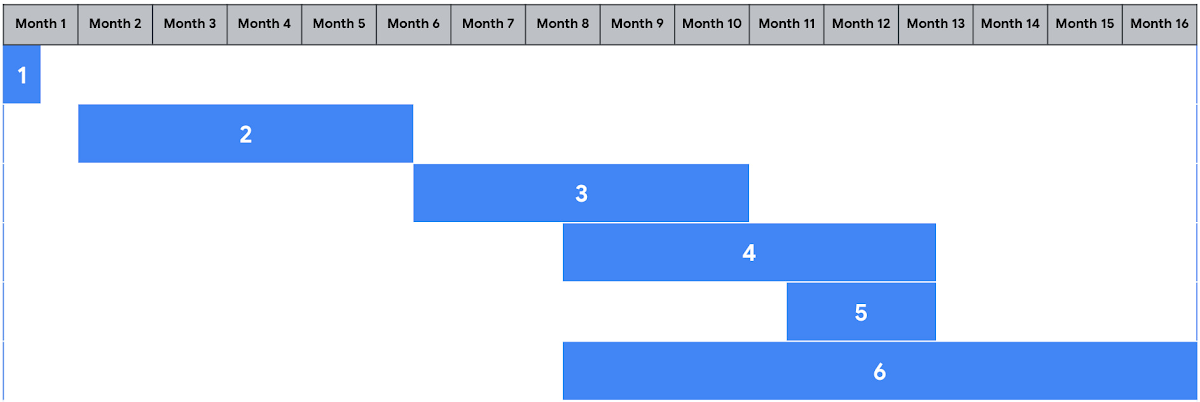

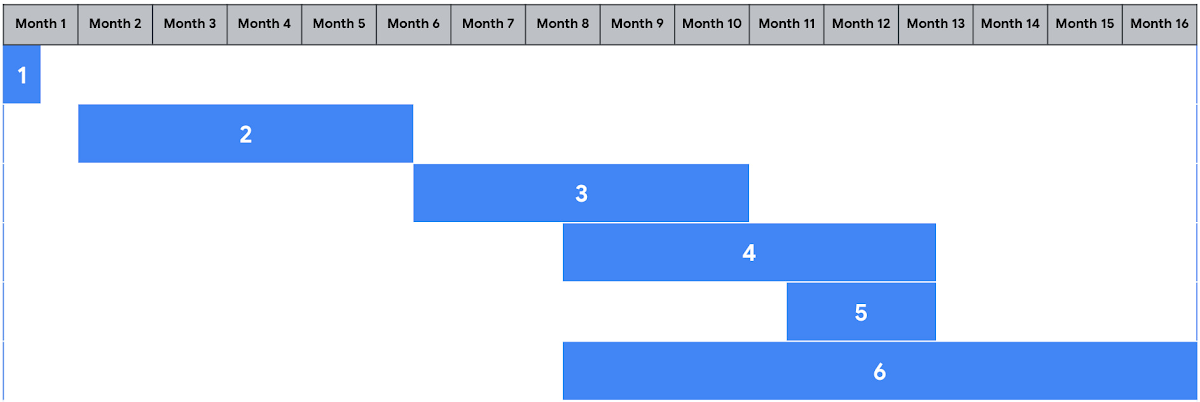

This provided an opportunity to streamline operations for USAA and improve the claims processing workflow, ultimately leading to a smoother customer and appraiser experience. Through our 16-month collaboration, we achieved a peak machine learning (ML) performance improvement of 28% when compared to baseline models, created a modern ML operations infrastructure offering real time and batch prediction capabilities, developed a complete retraining pipeline, and set USAA up for continued success.

To understand how this all came together, our delivery team will explore the approach, architecture design, and underlying AI methodologies that helped USAA once again demonstrate why they’re an industry leader.

Leading with AI Industry Expertise

Recognizing a key piece of USAA’s vision was for the solution to be customer-centric and in alignment with USAA’s service-first values, Google Cloud’s AI Industry Solutions Services team broke down the problem into several, often parallel, workstreams that focused on both developing the solution and enabling its adoption:

1. Discover and Assess Available Data

2. Explore Different Modeling Approaches and Engineered Features

3. Create Model Serving Infrastructure

4. Implement a Sophisticated Model Retraining Pipeline

5. Support USAA’s Model Validation Process

6. Provide Engagement Oversight & Ongoing Advisory

“The partnership between Google and USAA has allowed us to learn through technology avenues to better support our members and continue to push forward the best experience possible.” described Jennifer Nance, lead experience owner at USAA.

For this work to be successful, it was critical to bring together a team of experts who could not only build a custom AI solution for USAA, but could also understand the business process and what would be required to pass regulatory scrutiny.

Building the Model

USAA and Google had previously developed a service, provided as a REST endpoint hosted on Google Cloud, that takes a photo of an image and returns details about the damaged parts of a vehicle. This system needed to be extended to provide additional repair/replace and repair labor hour estimates.

Identifying Relevant Data

While the output of the computer vision service was very important, it wasn’t sufficient to make repair/replace decisions and labor hours estimates. Additional structured data about the vehicle and insurance claim, such as the vehicle model, year, primary point of impact, zip code, and more could be useful signals for prediction. As an example, some makes and models of vehicles contain parts that are not easy to acquire, making “repair” a better choice than “replace.” USAA and Google leveraged industry knowledge and familiarity with the problem to explore available data to arrive at a starting point for model development.

Cleaning and Preparing the Data

Once additional datasets were identified, both the structured and unstructured data had to be prepared for machine learning.

The unstructured data included millions of images, each of which needed to be scored by the existing USAA/Google computer vision API. Results of that process could then be stored in Google Cloud BigQuery to be used as features in the repair/replace and labor hours models. Of course, sending millions of images through a model scoring API is no small feat. We used Google Cloud Dataflow, a serverless Apache Beam runner, to process these images. Dataflow allowed us to score many images in parallel, while respecting the quota of the vision API.

The structured data consisted of information about the collision reported by the customer (e.g. point of impact, drivability of the vehicle, etc.) as well as information about the vehicle itself (e.g. make, model, year, options, etc.). This data was all readily available in USAA’s BigQuery based data lake. The team cleaned, regularized, and transformed this data into a single common table for model training.

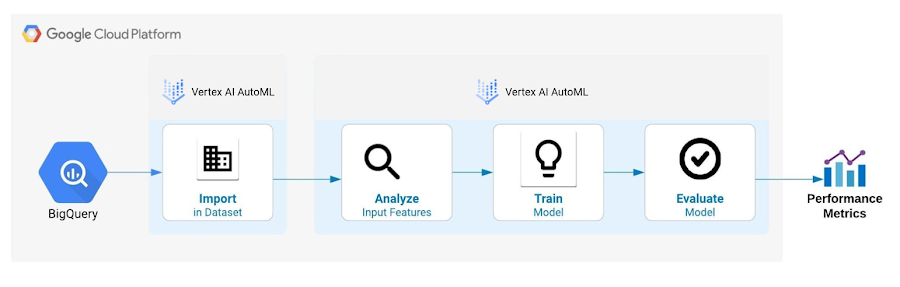

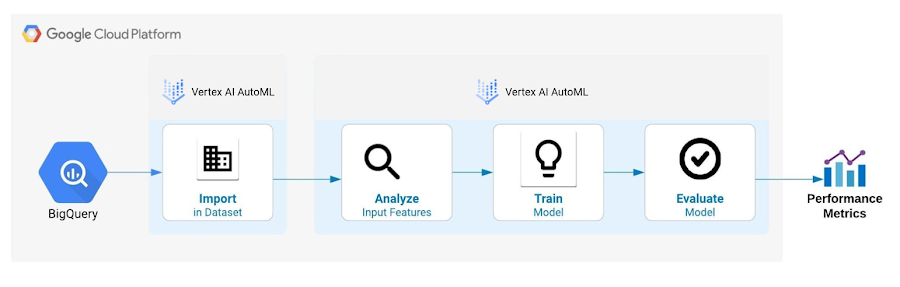

“Having a single table as the input for AutoML on Vertex AI is useful for multiple reasons: 1) You can reference the input data in one place instead of searching multiple databases and sources of all the different data sources 2) It becomes easy to compare metrics and distributions of the data when it comes times to retraining the data 3) It’s an easy snapshot of the data for regulatory purposes.” noted USAA Data Science Leader Lydia Chen.

Creating a Baseline

Once the relevant data sources were identified, cleaned, and assembled, initial models for both the repair/replace decision and the labor hours estimation were created to establish a baseline using AutoML for structured data on Vertex AI. Area under the curve for receiver operating characteristic (AUC ROC) was selected as the metric of choice for the repair/replace decision model since we cared equally about both classes, while root-mean-square error (RMSE) was selected as the metric of choice for the repair labor hours estimation model since it was a well understood number. See more on evaluation metrics for AutoML models on Vertex AI here.

These models required a few iterations to identify the correct way to connect both the image data and the structured data into a single system. Ultimately, these steps proved that the solution to this problem was viable and could be improved with iteration.

Engineering Features

Feature engineering is always an iterative process where the existing data is transformed to create new model inputs through various techniques aimed at extracting as much predictive power as possible from the source dataset. We started with the existing data provided, created multiple hypotheses based on current model performance, built new features which supported those hypotheses, and tested to see if these new features improved the models.

This iteration required many models to be trained and for each model to produce global feature importances. As you can imagine, this process can take a significant amount of time, especially without the right set of tools. To rapidly and efficiently create models that could screen new features and meet USAA’s deadlines, we continued using AutoML for structured data on Vertex AI, which allowed us to quickly prototype new feature sets without modifying any code. This fast and easy iteration was key to our success.

Domain expertise was another important part of the feature engineering process. Google Cloud’s AI Industry Solutions Services team brought in multiple engineers and consultants with deep insurance expertise and machine learning experience. Combined with USAA’s firsthand knowledge, several ‘golden’ features were created. As a simple example, when asking a model if a part should be repaired or replaced, the model was also given information about other parts that were damaged on the same car in the same collision.

Ultimately, the feature engineering process proved to be the best source for model performance improvement.

Creating Optimized Models

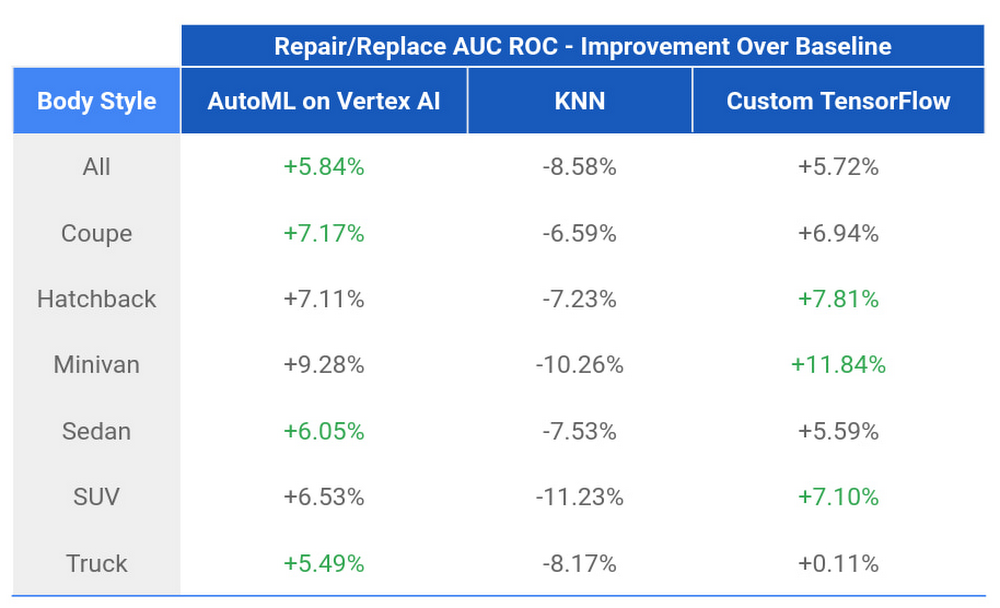

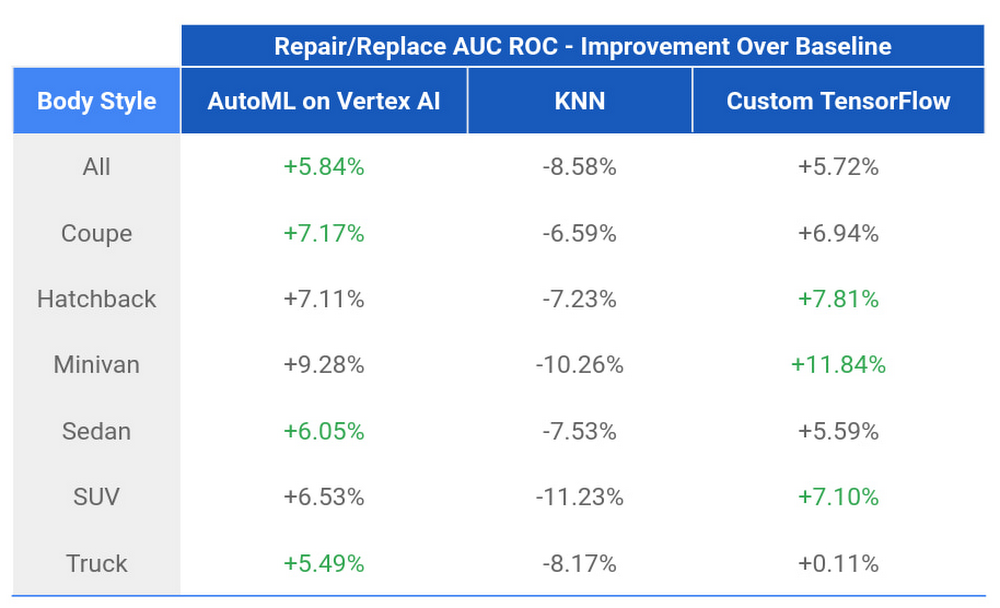

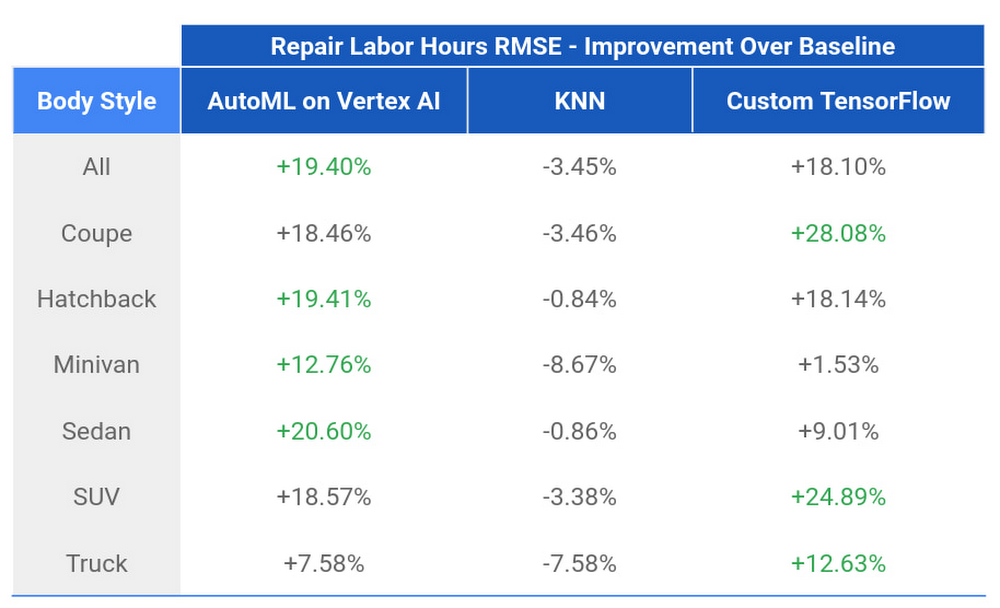

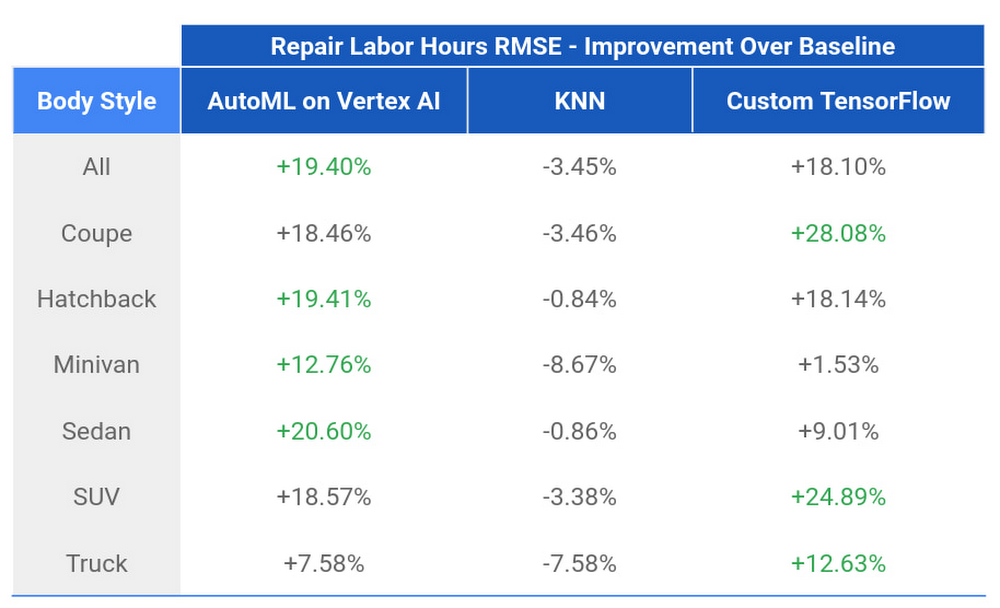

We experimented with three separate modeling approaches during this collaboration, with a focus on identifying the best models to productionize in later workstreams. These approaches included leveraging an AutoML model for structured data on Vertex AI, a K-Nearest Neighbors (K-NN) model, and a Tensorflow model created in TensorFlow Extended (TFX) for rapid experimentation. These models needed to cover 6 body styles (coupe, hatchback, minivan, sedan, SUV, and truck). Our selection criteria included model performance, improvement potential, maintenance, latency, and ease of productionization.

Since AutoML for structured data on Vertex AI uses automated architecture and parameter search to discover and train an optimal model with little to no code and provides one click deployment as a Vertex AI Prediction endpoint, productionization and maintenance of the model would be fairly straightforward. On average, AutoML models provided a 6.78% performance improvement over baseline models for the Repair/Replace decision and a 16.7% performance improvement over baseline models for Repair Labor Hours estimation.

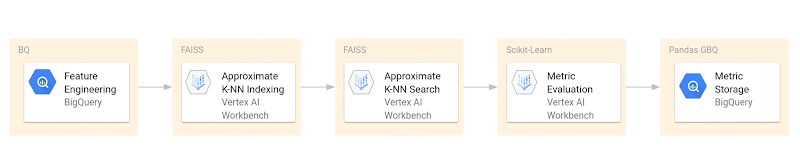

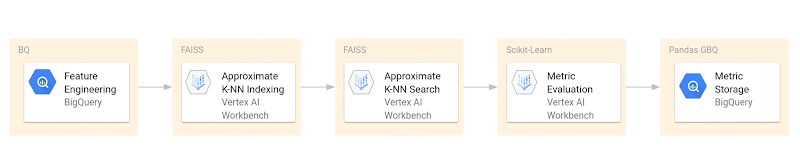

The team also explored building and testing a K-NN model to experiment with the idea of “Damage Like Yours.” Since USAA provided a rich dataset containing similar auto claims, the hypothesis was that similar damage should take a similar amount of time to repair. We implemented approximation search to strike a balance between latency (K-NN models required longer inference times) and accuracy using state-of-the-art algorithms (FAISS, citation). On average, K-NN models provided a 8.51% performance decline over baseline models for the Repair/Replace decision and a 4.03% performance decline over baseline models for Repair Labor Hours estimation.

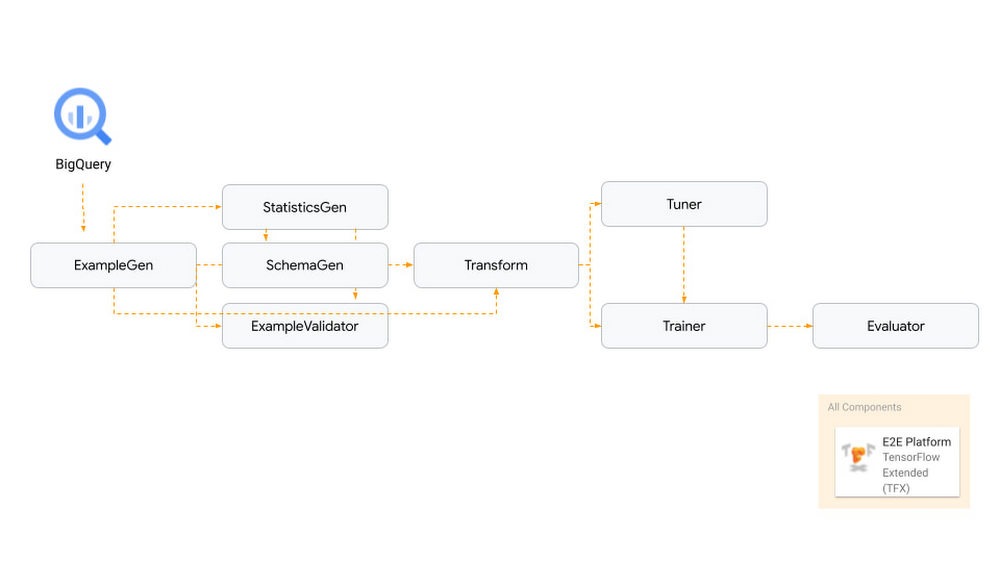

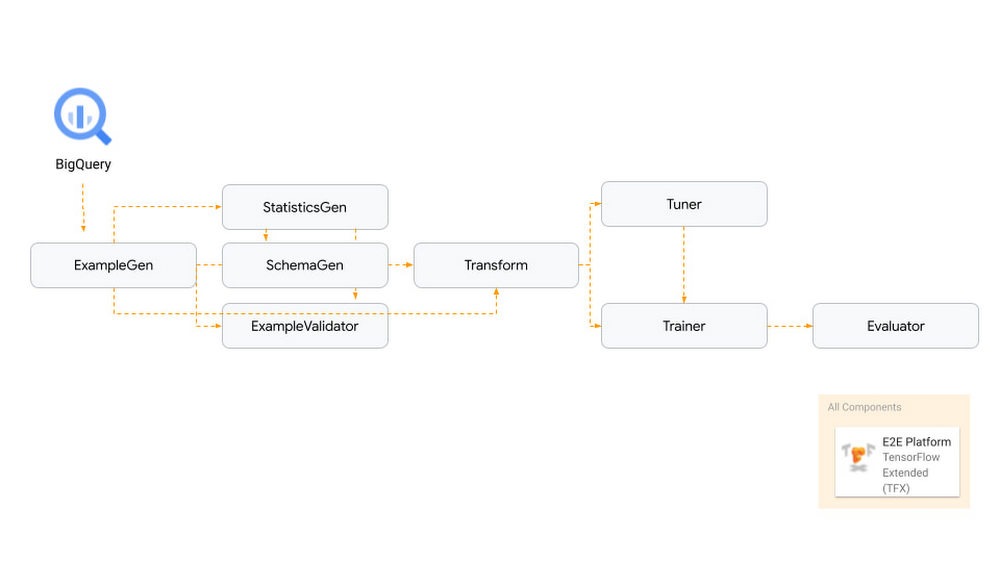

Finally, we explored using Tensorflow and TFX for a single model across all body styles to give the model builder complete control over the model architecture and hyperparameter tuning process. It would also be easy to use Vertex AI Vizier for hyperparameter optimization and to deploy the trained Tensorflow model as a Vertex AI Prediction endpoint, making deployment relatively straightforward as well. On average, TensorFlow models provided a 6.45% performance improvement over baseline models for the Repair/Replace decision and a 16.1% performance improvement over baseline models for Repair Labor Hours estimation.

By the end of the workstream, we had processed 4GB of data, written over 5000 lines of code, engineered 20 new features, and created over 120 models in search of the best model to productionize. Both AutoML on Vertex AI and TFX modeling approaches performed very well and had surprisingly similar results. Each model would outperform the other on various slices of the data. Ultimately, USAA made the choice to use AutoML on Vertex AI going forward because of its superior performance for the most common body styles along with the low administrative burden of managing, maintaining, and improving an AutoML on Vertex AI based model over time. In addition to being a great way to create a baseline, AutoML on Vertex AI also provided the best models for productionization.

“The same features were used as input for the TFX, KNN, and AutoML on Vertex AI models evaluated for comparison. Specific model performance metrics were then used for optimization and evaluation of these models, which were based on USAA's business priorities. Ultimately, AutoML on Vertex AI was chosen based on its performance on those evaluation metrics, including AUC ROC and RMSE. AutoML on Vertex AI optimizes metrics by automatically iterating through different ML algorithms, model architectures, hyperparameters, ensembling techniques, and engineered features. Effectively you are creating thousands of challenger models simultaneously. Because of AutoML on Vertex AI you can focus more on feature engineering than manually building models one by one.” said Lydia Chen.

Creating a Production Infrastructure

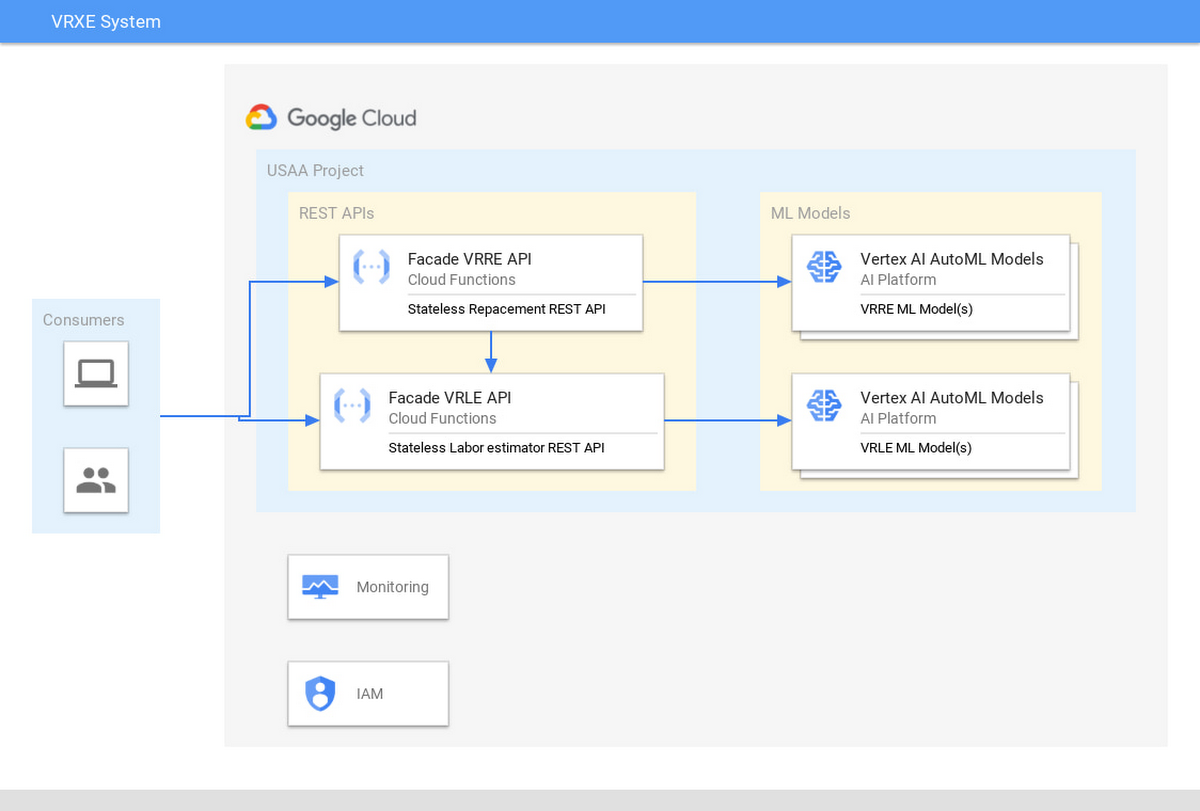

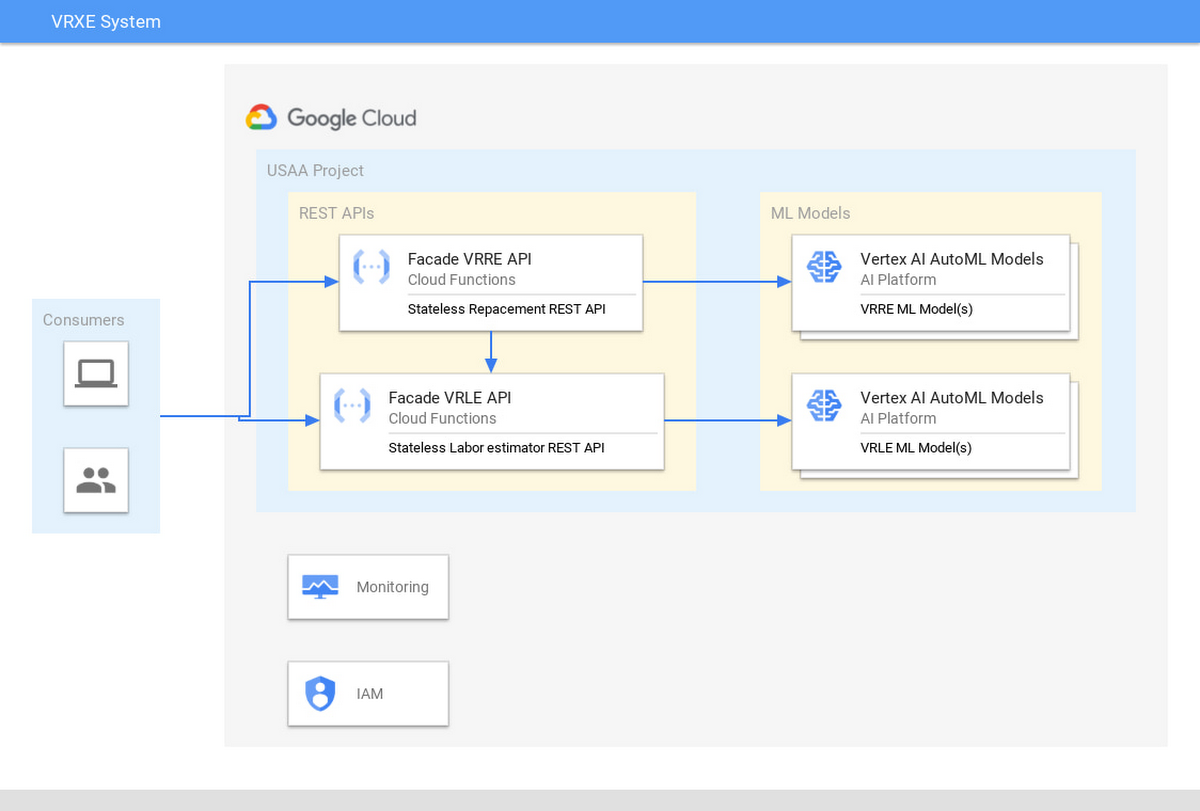

Model Serving

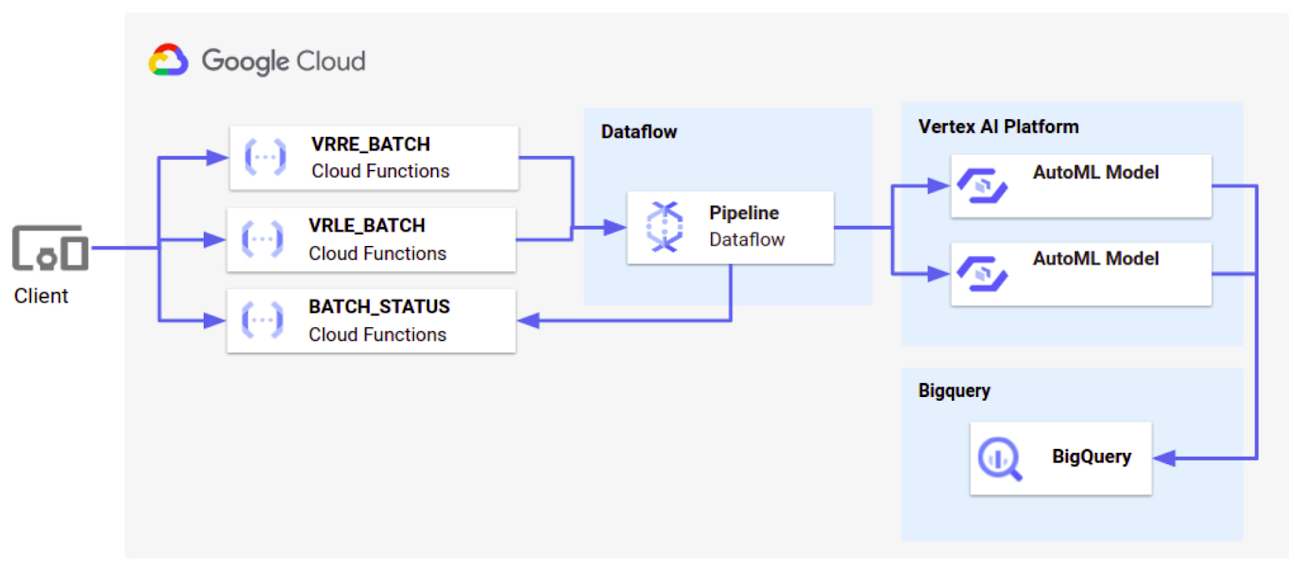

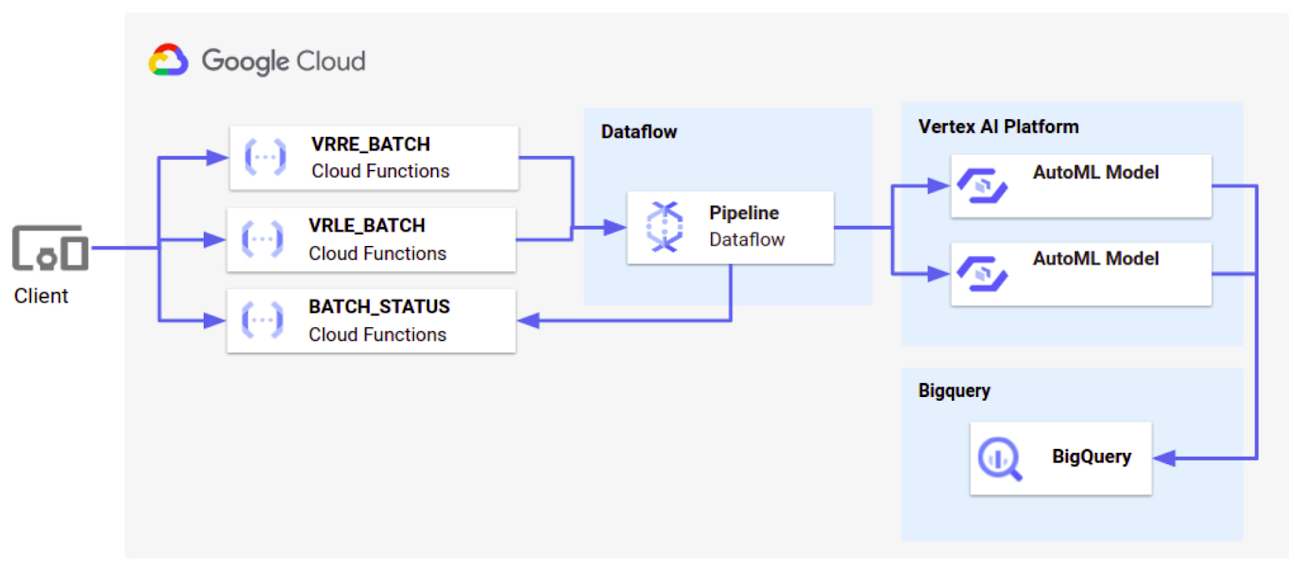

After selecting the AutoML on Vertex AI based approach, we created a production capable model serving infrastructure leveraging Vertex AI that could be incorporated into the retraining pipeline. This included defining API contracts and developing API’s that covered all vehicle types and enabled both online and batch classification of “Repair” vs. “Replace” at a configurable % threshold. If the classification decision was “Repair,” then the API would also provide a prediction of the number of Repair Labor Hours associated with the subparts at the claim estimate level. We incorporated explainability leveraging out of the box capabilities within Vertex Explainable AI to arrive at feature importance. After writing test cases and over 1200 lines of code, we confirmed functionality and advised on the automated deployment of the API’s to Cloud Functions using Terraform modules.

Model Retraining

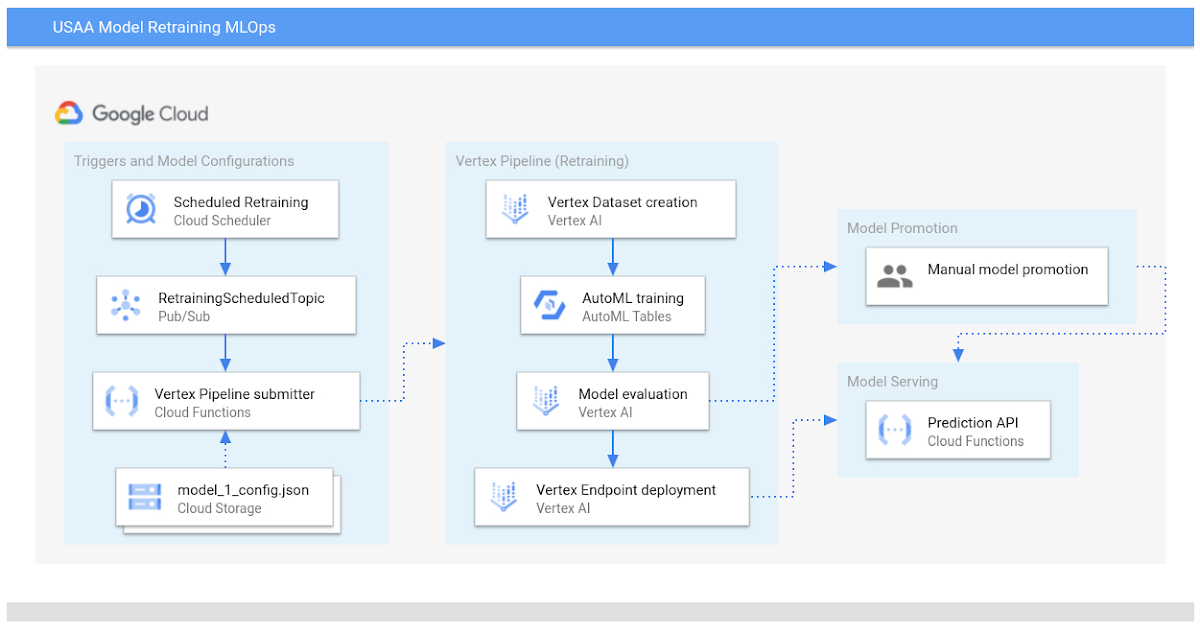

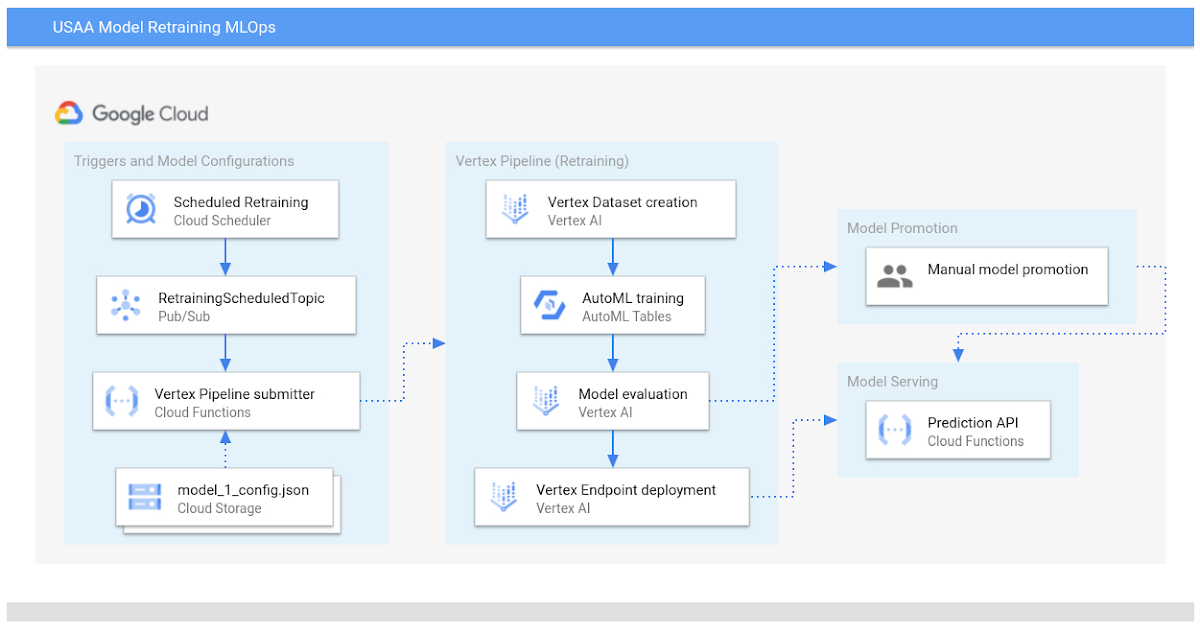

Once in production, it’s important to retrain your models based on new data. Since USAA processes millions of new claims a year, new vehicles with new parts and new damage present a constant challenge to models like these. So it was important to learn from and adapt to this new data over time through a proper model retraining process. Model retraining and promotion, however, come with inherent change management risks. We chose to use Vertex AI Pipelines and Vertex ML Metadata to establish an automated and auditable process for retraining all models on newly-incorporated data, tracking the lineage of all associated model artifacts (data, models, metrics, etc.), and promoting the models to the production serving environment.

We created two generic Vertex AI Pipeline definitions: one capable of retraining the six Repair/Replace classification models (one per body style), and the other capable of retraining the six Repair Labor Hours regression models (one per body style). Both pipeline definitions enforced MLOps best practices across the 12 models by orchestrating the following steps:

1. Vertex AI managed dataset creation

2. Vertex AI Training of AutoML models

3. Vertex AI Model Evaluation (classification- or regression-specific)

4. Model deployment to a Vertex AI Prediction endpoint

In addition to the two Vertex AI Pipeline definitions, we leveraged 12 “model configuration” JSON files to define model-specific details like the input training data location, the optimization function, the training budget, etc. Actual Vertex AI Pipeline executions would be created by combining a Vertex AI Pipeline definition and a model configuration at runtime.

We deployed a combination of Cloud Scheduler, Cloud PubSub, and Cloud Functions in order to trigger these retraining pipelines at the appropriate cadence. This architecture enables three triggering methods required by USAA: scheduled, event-driven, and manual. The scheduled trigger can be easily configured depending on USAA’s data velocity. The event-driven trigger enables upstream processes to trigger retraining, such as when valuable new data becomes available for training. Finally, the manual trigger allows for ad-hoc human intervention when needed.

We also created A CI/CD pipeline to automate the testing, compilation, and deployment of both Vertex Pipeline definitions and the triggering architecture described above.

In production, the result of a model-specific Vertex AI Pipeline execution is a newly-trained AutoML model on Vertex AI that is served by a newly-deployed Vertex AI Prediction endpoint. The newly-trained model performance metrics can then easily be compared to the existing “champion” model (currently being served) using the Vertex AI Pipelines user interface. In order to promote the new model to the production serving infrastructure, a USAA model manager must manually update a version-controlled config file within the Model Serving repository with the new Vertex AI model and endpoint IDs. This action triggers a CI/CD pipeline that finally promotes the new model to the user-facing API, as described in the previous section. The human-in-the-loop promotion process allows USAA to adhere to their model governance policies.

“Working with the Google PSO team, we've developed a customized approach that works efficiently for us to productionize our models, integrate with the data source, and reproduce our training pipeline. To do this, we leveraged the Vertex AI platform, where we componentized each stage of our pipeline and as a result were able to quickly test and validate our operations. Once each stage of our operation was fine-tuned and working efficiently, it was straightforward for us to integrate implementation into our CI/CD system. As a financial services company, we are even more accountable to model governance and risk that might be introduced into the environment. Having a clear picture of the training pipeline helps demonstrate clarity to partners.” noted USAA Strategic Innovation Director Heather Hernandez.

Supporting Model Risk Management & Responsible Adoption

Historically, the use of algorithms in the insurance industry has required approval by state level regulators. Since 2008, however, some in the industry have faced additional scrutiny at the federal level under SR 11-07 and are now required to understand the risk a machine learning model could potentially pose to their business and available mitigations. While this adds a step in the process to develop and implement machine learning models, it also puts the industry ahead of others in both understanding and managing the risk/impact that comes from leveraging machine learning.

Google Cloud’s AI Industry Solutions Services team utilized its extensive experience in financial services model risk management to document and quantify the new system, while integrating with and conforming to USAA’s model risk management services. This use case also underwent ethical analysis early on in the partnership, as part of Google Cloud’s Responsible AI governance process, to assess potential risks, impacts and opportunities and drive alignment with Google’s AI Principles. Together, Google and USAA created nearly 300 pages of model risk documentation completely documenting and quantifying the acceptable use of the system.

Knowing that true success goes beyond solution development, the Google team provided an additional 6 months’ worth of office hours and support to answer any questions that the USAA team had regarding the solution to best support its use and adoption.

Working with Google Cloud AI Industry Solutions Services

Google Cloud AI Industry Solutions Services leads the transformation of enterprise customers and industries with cloud solutions. To try Vertex AI for free, please visit here and to learn more about how we can help transform your business, please get in touch with Google Cloud's sales team.

Acknowledgements

This post was written by the authors listed and the rest of the delivery team (Jeff Myers, Alex Ottenwess, Elvin Zhu, Rahul Gupta, and Rostam Dinyari). Each of them contributed significantly to this engagement’s success.

We would also like to thank our counterparts at USAA for their close collaboration throughout this effort (Heather Hernandez, Brian Katz, Lydia Chen, Jennifer Nance, Pietro Spitzer, Mark Prestriedge, Kyle Sterneckert, Kyle Talbott, Bertha Cortez, Jim Gage, Erik Graf, and Patrick Burnett). We would also like to recognize our account team members (Cindy Spess, Charles Lambert, Joe Timmons, Wil Rivera, Michael Peter, Siraj Mohammad), leadership (Andrey Evtimov, Marcello Pedersen), and our partner team members from Deloitte and Quantiphi who made this program possible.