We just released our “Empowering Defenders: How AI is shaping malware analysis” report, where we want to share VirusTotal’s visibility to help researchers, security practitioners and the general public better understand the nature of malicious attacks, this time focusing on how AI complements traditional malware analysis tools by providing a new functionality, leading to very significant time savings for analysts. Here are some of the main ideas presented:

AI offers a different angle on malware detection, from a binary verdict to a detailed explanation.

AI excels in identifying malicious scripts, particularly obfuscated ones, achieving up to 70% better detection rates compared to traditional methods alone.

AI proved to be a powerful tool for detection and analysis of malicious scripting tool sets traditionally overlooked by security products.

AI demonstrates enhanced detection and identification of scripts exploiting vulnerabilities, with an improvement on exploit identification of up to 300% over traditional tools alone.

We observed suspicious samples using AI APIs or leveraging enthusiasm for AI products for distribution. However, AI usage in APT-like attacks cannot be confirmed at this time.

For full details, you can download the report here.

The question most asked of VirusTotal since AI became more mainstream is “have you found any AI generated malware”. Detecting if any malware was “AI generated” is a challenging task. How does one trace where any source code comes from? We played with different ideas, trying to find unusual patterns in malware families and actors for the last 12 to 15 months. Through all of our research, we didn’t see any strong indicators.

In this blog post we provide additional technical details for the AI-generated malware section of our report.

Impersonation Tactics in the Age of AI

As the popularity of certain applications and services grows, cybercriminals capitalize on this trend by impersonating them to infect unsuspecting victims. We observed different campaigns abusing ChatGPT and Google Bard iconography, file name and metadata for distribution. Despite ChatGPT's official launch in November 2022 and Google Bard's in February 2023, it wasn't until early 2023 that distinct patterns and spikes in malware exploiting their reputations emerged, highlighting the evolving tactics of cybercriminals in leveraging popular trends.

Infostealers are the primary type of malware we've observed exploiting the reputations of ChatGPT and Google Bard. Families like Redline, Vidar, Raccoon, and Agent Tesla are among the most prevalent examples we've encountered. In addition, we found an extended list of Remote Access Trojans (RATs) families mimicking these applications, including DCRat, NjRAT, CreStealer, AsyncRAT, Lummac, RevengeRAT, Spymax, Aurora Stealer, Spynote, Warzone and OrcusRAT.

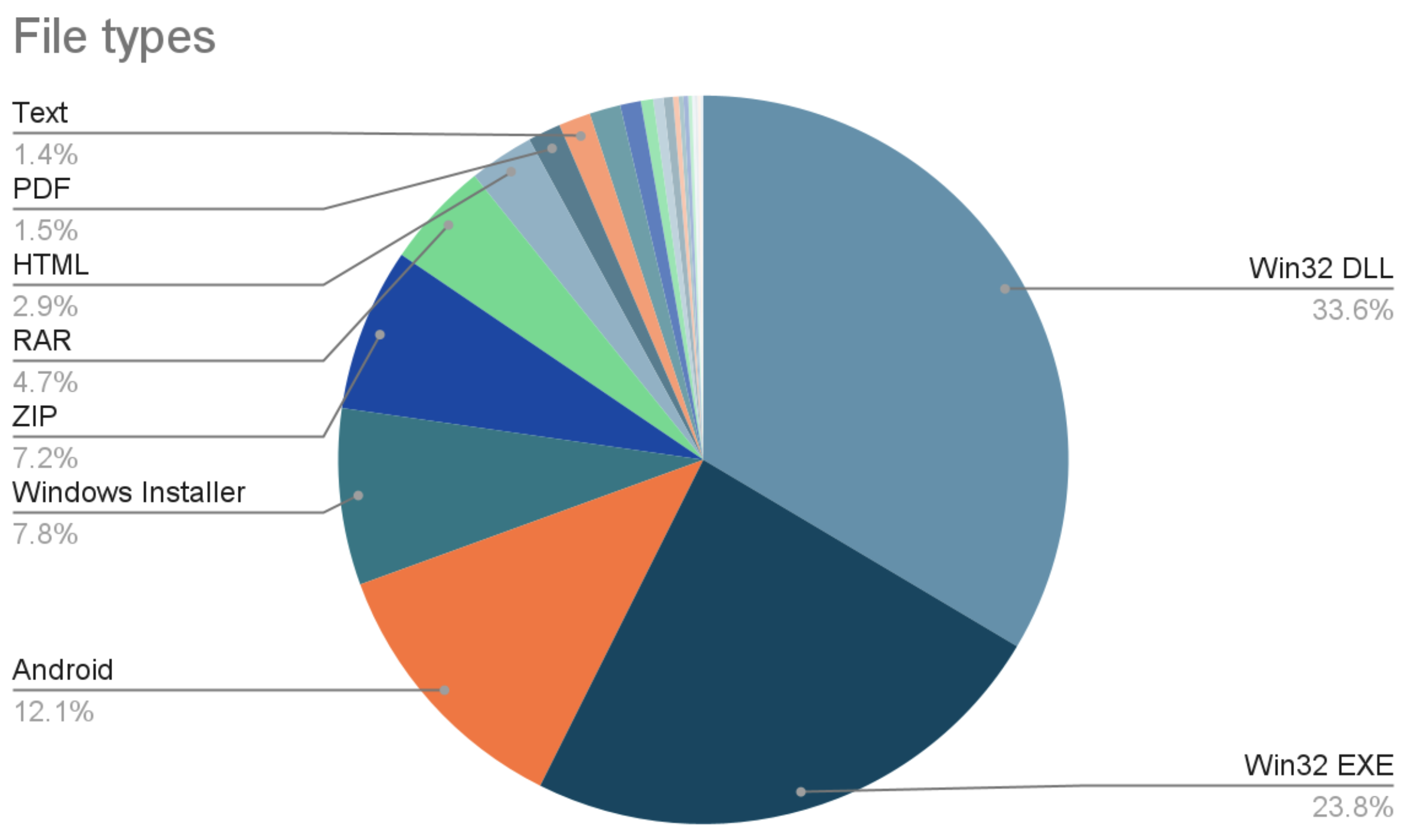

In addition to Windows executables, the second most popular sample type is Android:

As an example of infection vector, we found Redline samples deployed through a .bat file distributed inside a .zip bundling a document called "GPT CHAT INSTALLATION INSTRUCTIONS.docx":

Another distribution vector is through the use of ISO images. We found a Vidar sample distributed through "ChatGPT For Dummies 2st Edition.iso".

Other interesting findings include a Bumblebee sample distributed as “ChatGPT_Setup.msi”, or a sample (“ChatGPT_0.12.0_windows_x86_64.exe”) using drivers to probably escalate privileges. “ChatGPT Complete Guide For Developers Students And Worrkers 2023.exe” uses Process Explorer drivers to elevate privileges during execution as well.

Although the most popular infection vector for the samples analyzed are other samples (like droppers or compressed files), we found some of them distributed through legitimate websites, hosting services and web applications, and Discord. We believe the latter is on the verge of discontinuation, as they've recently announced a shift in their Content Delivery Network (CDN) approach.

Additionally, we searched for samples communicating with platforms hosting AI models based on the findings of the following Kaspersky's blog post, where a sample downloads a potential malicious model from huggingface.co. You can find the following query to find suspicious samples communicating with this domain.Suspicious samples using OpenAI’s API

The number of suspicious samples interacting in some way with api.openai.com shows a slow growing trend, with a peak in August 2023.

To search for files that contact or contain the OpenAI API endpoint you can use the following query:

entity:file p:5+ (embedded_domain:api.openai.com or behaviour_network:api.openai.com)

Another option could be searching for common patterns when using the OpenAI API:

entity:file p:5+ (content:code-davinci or content:text-davinci or content: api.openai.com)

A third option is getting files related to the URL api.openai.com, which includes files that reference this domain and communicating files.

This can be automated to easily discriminate samples based on different criteria, such as detection rate, using VT-PY or VirusTotal’s API. Let’s see an example.

The following code uses the API to get a list of entities communicating with api.openai.com, including referring files:

async for mai in cli.iterator(

'/intelligence/search',

params={

'query': 'https://api.openai.com',

'attributes': (''),

'relationships': ('referrer_files')},

limit=0):

malware_AI_objects.append(mai.to_dict())

We can iterate over the previous query to obtain the list of all related files. The 'malware_AI_objects' variable contains the URLs to get additional details on them:

The following code iterates the previous list of URLs obtaining additional details in the 'last_analysis_stats' field to filter out malicious files.

[{'domain': mai['id'], **mai['relationships']}

for mai in malware_AI_objects

if mai['relationships']['referrer_files']['data']])

malware_AI_hashes = []

async for mai in cli.iterator('/urls/9b1b5eabf33c765585b7f7095d3cd726d73db49f3559376f426935bbd4a22d4b/referrer_files',

params={'attributes': ('sha256,last_analysis_stats')},limit=0):

malware_AI_hashes.append(mai.to_dict())

Finally, we can filter out results based on the number of AV detections provided in the “malicious” field.

[{'sha256': mai['id']}

for mai in malware_AI_hashes

if mai['attributes']['last_analysis_stats']['malicious'] > 1 ]

)

We can easily modify this script to obtain the files ('Communicating Files') that interact with the 'api.openai.com' domain.

RAT in the chat

As previously mentioned, we found several RAT samples mimicking AI applications (Google Bard, OpenAI Chat-GPT). Some DarkComet samples use 'https://api.openai.com/v1/completions', which according to OpenAI’s documentation, can be used to prompt Chat-GPT. This endpoint requires an API key.

One of these samples, with 42 AV detections, included this URL although it did not connect to openai.com during sandbox execution, so we took a deeper look.

The first disassembled instructions show the 'krnln.fnr' string and the registry entry "Software\FlySky\E\Install", that refers to EPL (Easy Programming Language). EPL provides functionality similar to Visual Basic. This blog post (@Hexacorn) provides more information on how to analyze these files.

If this framework is installed on the victim's computer, the sample opens a window for the victim to interact with Chat-GPT with a 'How are you?' in Chinese.

Wrapping it up

The integration of AI engines into VirusTotal has provided a unique opportunity to evaluate their capabilities in real-world scenarios. While the field is still rapidly evolving, AI engines have demonstrated remarkable potential for automating and enhancing various analysis tasks, particularly those that are time-consuming and challenging, such as deobfuscation and interpreting suspicious behavior.

Pinpointing whether malware is AI-generated remains a complex task due to the difficulty of tracing the origins of source code. Instead, we've encountered malware families employing AI themes for distribution, exploiting the current trend of AI-based threats. This opportunistic behavior is unsurprising, given attackers' tendency to capitalize on trending topics. The majority of these disguised samples are trojans targeting Windows systems, followed by Android samples.

While the integration of OpenAI APIs into certain RATs has been observed, the specific purpose and effectiveness of this integration remain unclear. It appears that RAT operators may be utilizing OpenAI APIs as a distraction tactic rather than leveraging their full potential for advanced malicious activities. Nonetheless, it is imperative to maintain vigilance and closely monitor how the usage of OpenAI APIs in RATs might evolve in the future.

As always, we would like to hear from you.

Happy hunting!