How we built Immersive View for routes on Maps

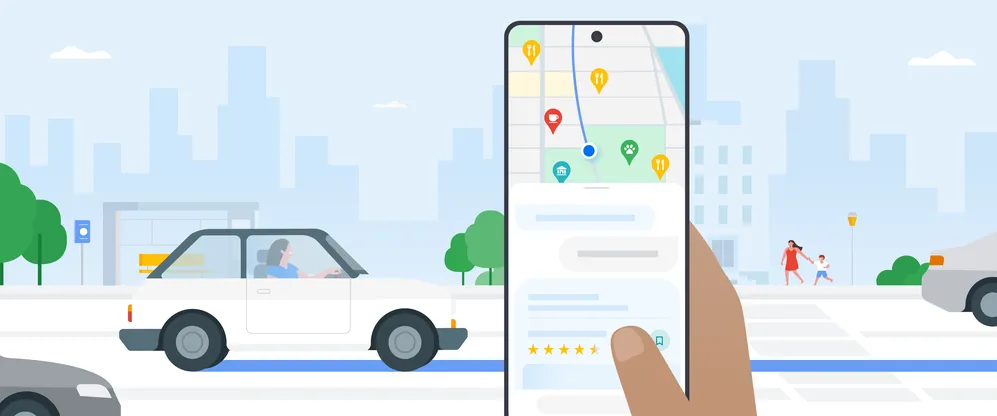

When navigating to a destination, it’s helpful to get a sense of your route so you can travel with confidence. Let’s say you’re biking to the park to meet friends; you might look at the weather, see if there are road closures along the way, or scope out the bike parking situation on Street View. That’s why we introduced Immersive View for routes, a feature that gives you all of the information you need about your journey — like weather and traffic — in a single, multidimensional view whether you’re walking, biking or cycling. This feature, which has started rolling out in 15 cities, builds on Immersive View for places that allows you to experience a place before you go.

Here’s a behind-the-scenes look at how AI and imagery bring Immersive View for routes to life in Google Maps.

Putting together a jigsaw puzzle of 2D imagery

To start, billions of high-resolution images are collected – these can come from planes, as well as Street View cars and Trekkers. The images are then stitched together in a process that feels a lot like putting together the world’s largest jigsaw puzzle.

Different map layers showing object labels, elevation, vegetation and color.

In theory, this sounds relatively straightforward, but several factors make this process quite complex. All of the imagery needs to align with existing Google Maps data so that each road, street and business name fits together. This is only possible with our advanced photogrammetry techniques that can align imagery and data within centimeters.

Visualization of our models aligning 3D aerial imagery to Street View data.

Using machine learning to extract and label helpful information

Once the images are aligned, we use AI and computer vision to understand what’s in them. Within seconds, our machine learning models can understand elements in a photo — like sidewalks, street signs, speed limit signs, road names, addresses, posted business hours and building entrances. These models are trained on millions of photos from around the world, so they’re able to learn and adapt to different regions. For example, they can recognize a “SLOW” sign in the U.S., which is a yellow or orange diamond, and in Japan, which is triangular and largely white and red.

Understanding these elements helps us show you the most helpful information in Immersive View for routes. For example, this allows us to navigate you right to a building’s entrance rather than just the general vicinity, saving you a time-consuming trip around the block!

Our AI labels helpful object categories like sidewalks, street signs, speed limit signs, road names, addresses, posted business hours and building entrances.

Transforming 2D imagery into 3D

With all the visuals and object labels in place, it’s time to reconstruct the world in 3D. One of the biggest challenges with building a 3D map is modeling the terrains of roads and heights of buildings. To do this, we use imagery from our state-of-the-art aerial camera systems that are much like the 3D cameras used to film Hollywood movies. These systems have clusters of cameras pointed in slightly different directions, allowing them to take pictures from multiple viewpoints and accurately understand depth. Once we have this set of imagery, our advanced photogrammetry techniques help us place these on top of our 2D model of the world, turning it into a 3D model.

A preview of the route overlaid on 3D terrain.

Creating a realistic, helpful route

Once our model is in 3D, we need to actually show you how to navigate. This is the piece that differentiates Immersive View for routes from Immersive View for places. One of the biggest challenges of overlaying the route line in 3D is creating a realistic and helpful overview of your travel path, whether that’s a road, bike lane or sidewalk. We solve this using a couple of techniques.

We include a lot of intricate camera zooms, pans, and tilts to show you both a big-picture overview and street-level details about your route. We use a technique called occlusion to hide the blue route line when it is meant to go behind buildings, under bridges, or around trees. To make sure all of this dynamic movement is not jumpy or jarring to you, we use a mathematical construct called a B-spline curve to create a smooth camera path that has a clear view of the route. Combining these techniques, our system can quickly compute what should be in view at each step and always generate a path that’s easy to follow and beautiful in real-time.

Adding Google Maps’ trusted, real-world information

Finally, we layer on Google Maps’ trusted information — like weather, air quality and traffic — so that you can visualize what your route will look like as conditions change throughout the day and week.

To simulate live traffic — both current and in the future — we partner with Google Research to analyze historical, aggregated driving trends. So if a street is known to be congested at 5 p.m. on Thursdays because of heavy traffic from delivery trucks, you’ll see more trucks reflected in Immersive View. This allows you to get a sense of what you’re likely to encounter on the road.

Using aggregate traffic data, our models simulate what traffic might look like at any given time.

Bringing it all together

All of this comes together to show you your route on your phone by utilizing on-device and real-time cloud rendering so you can visualize where you’re going from the palm of your hand. Immersive View for routes is starting to roll out in Amsterdam, Barcelona, Dublin, Florence, Las Vegas, London, Los Angeles, Miami, New York, Paris, San Francisco, San Jose, Seattle, Tokyo, and Venice.

To learn more about the technology that powers your favorite features in Google Maps, check out our Maps 101 blog post series.