Sample language model responses to different varieties of English and native speaker reactions.

ChatGPT does amazingly well at communicating with people in English. But whose English?

Only 15% of ChatGPT users are from the US, where Standard American English is the default. But the model is also commonly used in countries and communities where people speak other varieties of English. Over 1 billion people around the world speak varieties such as Indian English, Nigerian English, Irish English, and African-American English.

Speakers of these non-“standard” varieties often face discrimination in the real world. They’ve been told that the way they speak is unprofessional or incorrect, discredited as witnesses, and denied housing–despite extensive research indicating that all language varieties are equally complex and legitimate. Discriminating against the way someone speaks is often a proxy for discriminating against their race, ethnicity, or nationality. What if ChatGPT exacerbates this discrimination?

To answer this question, our recent paper examines how ChatGPT’s behavior changes in response to text in different varieties of English. We found that ChatGPT responses exhibit consistent and pervasive biases against non-“standard” varieties, including increased stereotyping and demeaning content, poorer comprehension, and condescending responses.

Continue

Dillon Bowen, Scott Emmons, Alexandra Souly, Qingyuan Lu, Tu Trinh, Elvis Hsieh, Sana Pandey, Pieter Abbeel, Justin Svegliato, Olivia Watkins, Sam Toyer

Aug 28, 2024

When we began studying jailbreak evaluations, we found a fascinating paper claiming that you could jailbreak frontier LLMs simply by translating forbidden prompts into obscure languages. Excited by this result, we attempted to reproduce it and found something unexpected.

Continue

Humans excel at processing vast arrays of visual information, a skill that is crucial for achieving artificial general intelligence (AGI). Over the decades, AI researchers have developed Visual Question Answering (VQA) systems to interpret scenes within single images and answer related questions. While recent advancements in foundation models have significantly closed the gap between human and machine visual processing, conventional VQA has been restricted to reason about only single images at a time rather than whole collections of visual data.

This limitation poses challenges in more complex scenarios. Take, for example, the challenges of discerning patterns in collections of medical images, monitoring deforestation through satellite imagery, mapping urban changes using autonomous navigation data, analyzing thematic elements across large art collections, or understanding consumer behavior from retail surveillance footage. Each of these scenarios entails not only visual processing across hundreds or thousands of images but also necessitates cross-image processing of these findings. To address this gap, this project focuses on the “Multi-Image Question Answering” (MIQA) task, which exceeds the reach of traditional VQA systems.

Visual Haystacks: the first "visual-centric" Needle-In-A-Haystack (NIAH) benchmark designed to rigorously evaluate Large Multimodal Models (LMMs) in processing long-context visual information.

Continue

The ability of LLMs to execute commands through plain language (e.g. English) has enabled agentic systems that can complete a user query by orchestrating the right set of tools (e.g. ToolFormer, Gorilla). This, along with the recent multi-modal efforts such as the GPT-4o or Gemini-1.5 model, has expanded the realm of possibilities with AI agents. While this is quite exciting, the large model size and computational requirements of these models often requires their inference to be performed on the cloud. This can create several challenges for their widespread adoption. First and foremost, uploading data such as video, audio, or text documents to a third party vendor on the cloud, can result in privacy issues. Second, this requires cloud/Wi-Fi connectivity which is not always possible. For instance, a robot deployed in the real world may not always have a stable connection. Besides that, latency could also be an issue as uploading large amounts of data to the cloud and waiting for the response could slow down response time, resulting in unacceptable time-to-solution. These challenges could be solved if we deploy the LLM models locally at the edge.

Continue

As computer vision researchers, we believe that every pixel can tell a story. However, there seems to be a writer’s block settling into the field when it comes to dealing with large images. Large images are no longer rare—the cameras we carry in our pockets and those orbiting our planet snap pictures so big and detailed that they stretch our current best models and hardware to their breaking points when handling them. Generally, we face a quadratic increase in memory usage as a function of image size.

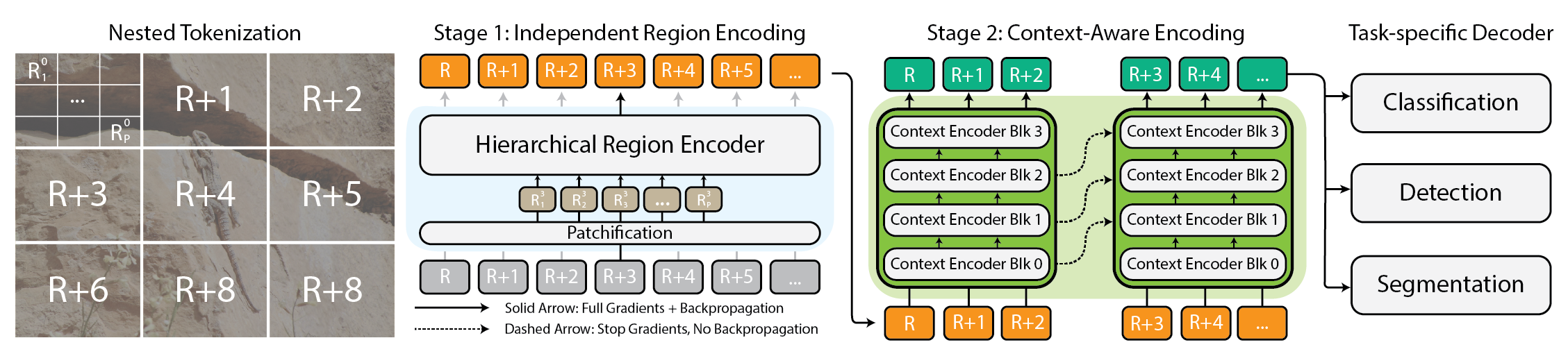

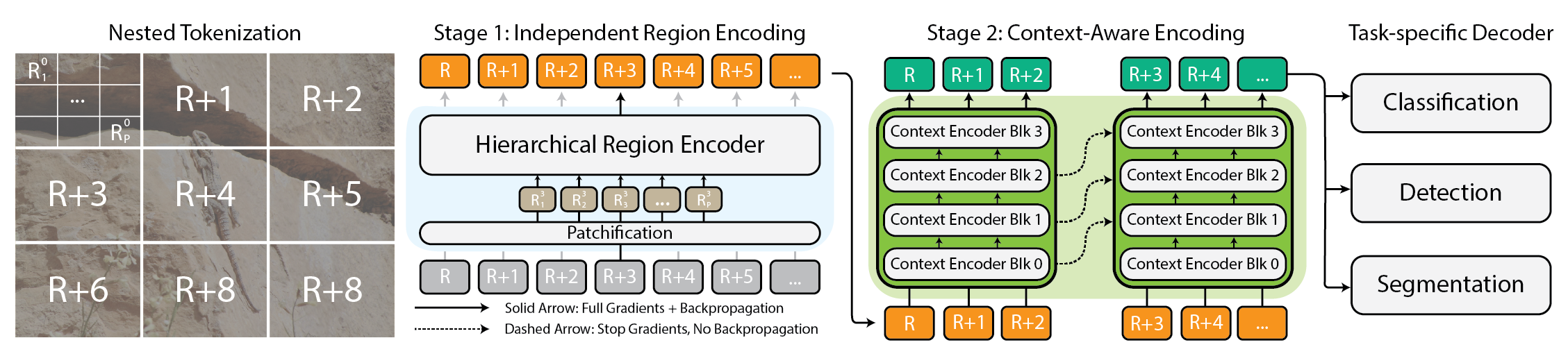

Today, we make one of two sub-optimal choices when handling large images: down-sampling or cropping. These two methods incur significant losses in the amount of information and context present in an image. We take another look at these approaches and introduce $x$T, a new framework to model large images end-to-end on contemporary GPUs while effectively aggregating global context with local details.

Architecture for the $x$T framework.

Continue

Berkeley AI Research Editors

Mar 11, 2024

Every year, the Berkeley Artificial Intelligence Research (BAIR) Lab graduates some of the most talented and innovative minds in artificial intelligence and machine learning. Our Ph.D. graduates have each expanded the frontiers of AI research and are now ready to embark on new adventures in academia, industry, and beyond.

These fantastic individuals bring with them a wealth of knowledge, fresh ideas, and a drive to continue contributing to the advancement of AI. Their work at BAIR, ranging from deep learning, robotics, and natural language processing to computer vision, security, and much more, has contributed significantly to their fields and has had transformative impacts on society.

This website is dedicated to showcasing our colleagues, making it easier for academic institutions, research organizations, and industry leaders to discover and recruit from the newest generation of AI pioneers. Here, you’ll find detailed profiles, research interests, and contact information for each of our graduates. We invite you to explore the potential collaborations and opportunities these graduates present as they seek to apply their expertise and insights in new environments.

Join us in celebrating the achievements of BAIR’s latest PhD graduates. Their journey is just beginning, and the future they will help build is bright!

Continue

AI caught everyone’s attention in 2023 with Large Language Models (LLMs) that can be instructed to perform general tasks, such as translation or coding, just by prompting. This naturally led to an intense focus on models as the primary ingredient in AI application development, with everyone wondering what capabilities new LLMs will bring.

As more developers begin to build using LLMs, however, we believe that this focus is rapidly changing: state-of-the-art AI results are increasingly obtained by compound systems with multiple components, not just monolithic models.

For example, Google’s AlphaCode 2 set state-of-the-art results in programming through a carefully engineered system that uses LLMs to generate up to 1 million possible solutions for a task and then filter down the set. AlphaGeometry, likewise, combines an LLM with a traditional symbolic solver to tackle olympiad problems. In enterprises, our colleagues at Databricks found that 60% of LLM applications use some form of retrieval-augmented generation (RAG), and 30% use multi-step chains.

Even researchers working on traditional language model tasks, who used to report results from a single LLM call, are now reporting results from increasingly complex inference strategies: Microsoft wrote about a chaining strategy that exceeded GPT-4’s accuracy on medical exams by 9%, and Google’s Gemini launch post measured its MMLU benchmark results using a new CoT@32 inference strategy that calls the model 32 times, which raised questions about its comparison to just a single call to GPT-4. This shift to compound systems opens many interesting design questions, but it is also exciting, because it means leading AI results can be achieved through clever engineering, not just scaling up training.

In this post, we analyze the trend toward compound AI systems and what it means for AI developers. Why are developers building compound systems? Is this paradigm here to stay as models improve? And what are the emerging tools for developing and optimizing such systems—an area that has received far less research than model training? We argue that compound AI systems will likely be the best way to maximize AI results in the future, and might be one of the most impactful trends in AI in 2024.

Continue

The structure of Ghostbuster, our new state-of-the-art method for detecting AI-generated text.

Large language models like ChatGPT write impressively well—so well, in fact, that they’ve become a problem. Students have begun using these models to ghostwrite assignments, leading some schools to ban ChatGPT. In addition, these models are also prone to producing text with factual errors, so wary readers may want to know if generative AI tools have been used to ghostwrite news articles or other sources before trusting them.

What can teachers and consumers do? Existing tools to detect AI-generated text sometimes do poorly on data that differs from what they were trained on. In addition, if these models falsely classify real human writing as AI-generated, they can jeopardize students whose genuine work is called into question.

Our recent paper introduces Ghostbuster, a state-of-the-art method for detecting AI-generated text. Ghostbuster works by finding the probability of generating each token in a document under several weaker language models, then combining functions based on these probabilities as input to a final classifier. Ghostbuster doesn’t need to know what model was used to generate a document, nor the probability of generating the document under that specific model. This property makes Ghostbuster particularly useful for detecting text potentially generated by an unknown model or a black-box model, such as the popular commercial models ChatGPT and Claude, for which probabilities aren’t available. We’re particularly interested in ensuring that Ghostbuster generalizes well, so we evaluated across a range of ways that text could be generated, including different domains (using newly collected datasets of essays, news, and stories), language models, or prompts.

Continue

Samuel Pfrommer

Nov 14, 2023

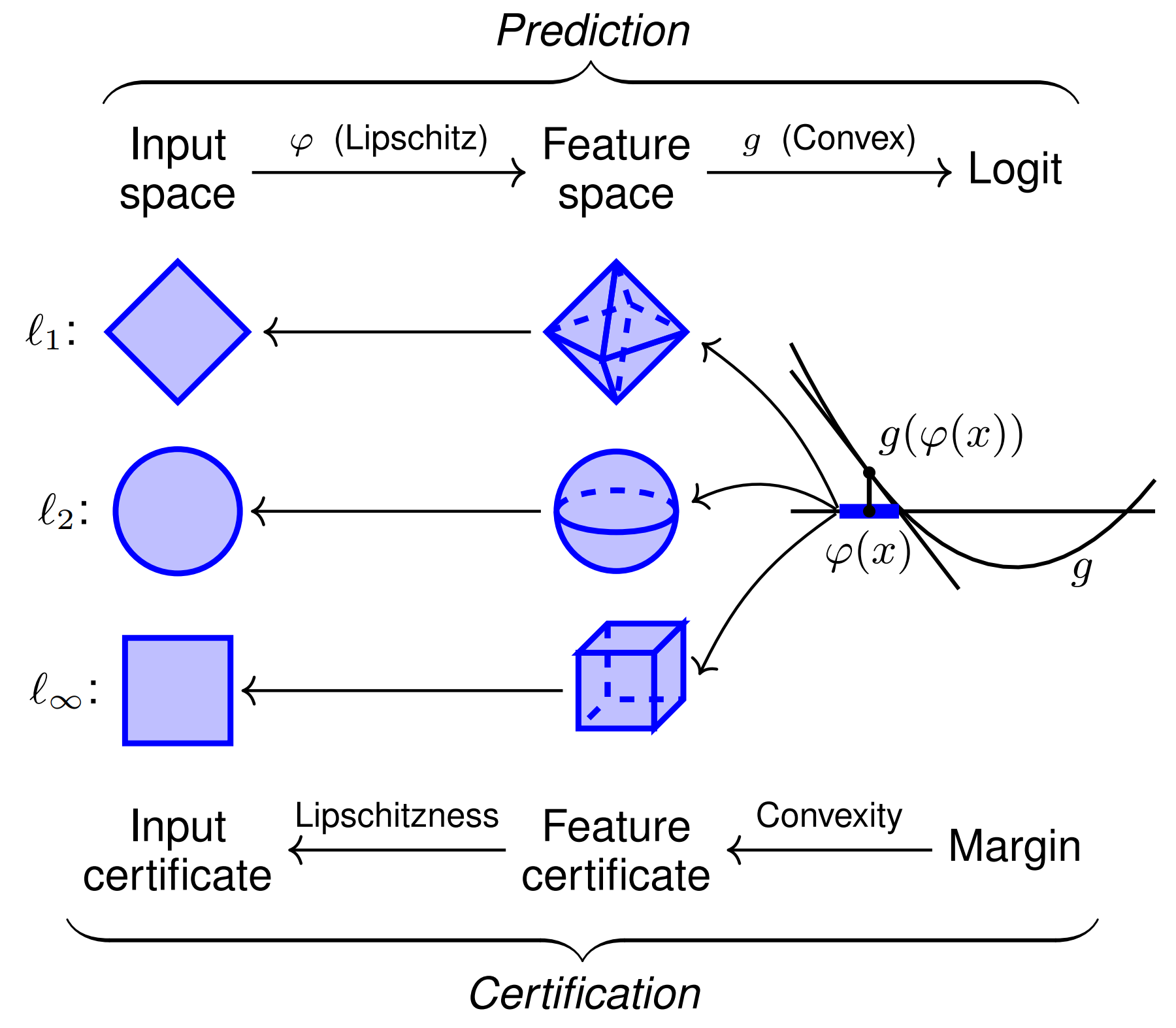

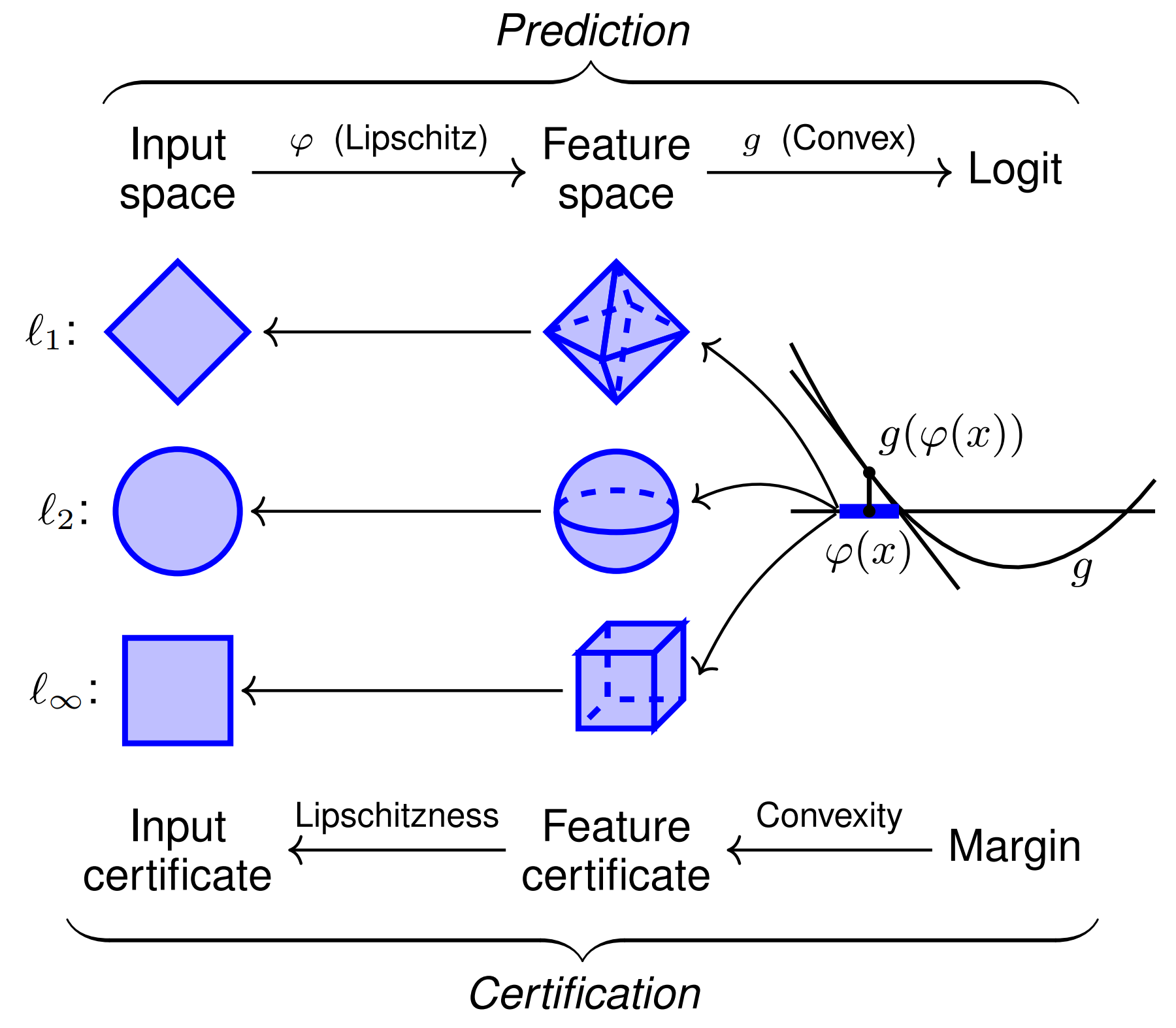

Asymmetric Certified Robustness via Feature-Convex Neural Networks

TLDR: We propose the asymmetric certified robustness problem, which requires certified robustness for only one class and reflects real-world adversarial scenarios. This focused setting allows us to introduce feature-convex classifiers, which produce closed-form and deterministic certified radii on the order of milliseconds.

Figure 1. Illustration of feature-convex classifiers and their certification for sensitive-class inputs. This architecture composes a Lipschitz-continuous feature map $\varphi$ with a learned convex function $g$. Since $g$ is convex, it is globally underapproximated by its tangent plane at $\varphi(x)$, yielding certified norm balls in the feature space. Lipschitzness of $\varphi$ then yields appropriately scaled certificates in the original input space.

Despite their widespread usage, deep learning classifiers are acutely vulnerable to adversarial examples: small, human-imperceptible image perturbations that fool machine learning models into misclassifying the modified input. This weakness severely undermines the reliability of safety-critical processes that incorporate machine learning. Many empirical defenses against adversarial perturbations have been proposed—often only to be later defeated by stronger attack strategies. We therefore focus on certifiably robust classifiers, which provide a mathematical guarantee that their prediction will remain constant for an $\ell_p$-norm ball around an input.

Conventional certified robustness methods incur a range of drawbacks, including nondeterminism, slow execution, poor scaling, and certification against only one attack norm. We argue that these issues can be addressed by refining the certified robustness problem to be more aligned with practical adversarial settings.

Continue

Andre He, Vivek Myers

Oct 17, 2023

Goal Representations for Instruction Following

A longstanding goal of the field of robot learning has been to create generalist agents that can perform tasks for humans. Natural language has the potential to be an easy-to-use interface for humans to specify arbitrary tasks, but it is difficult to train robots to follow language instructions. Approaches like language-conditioned behavioral cloning (LCBC) train policies to directly imitate expert actions conditioned on language, but require humans to annotate all training trajectories and generalize poorly across scenes and behaviors. Meanwhile, recent goal-conditioned approaches perform much better at general manipulation tasks, but do not enable easy task specification for human operators. How can we reconcile the ease of specifying tasks through LCBC-like approaches with the performance improvements of goal-conditioned learning?

Continue